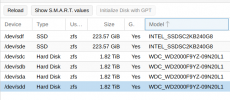

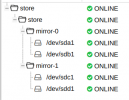

I installed PBS in VM and attached one virtio drive for datastore ... added datastore through GUI (storage(disks/...) etc.

Everything works fine except that backup is cca 20% faster than restore (?!) ... and (traditional) restore from nfs-backup server (vzdump) is cca 3x faster

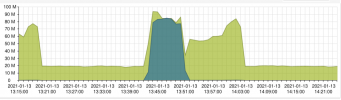

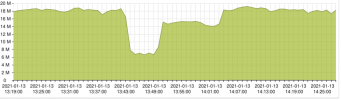

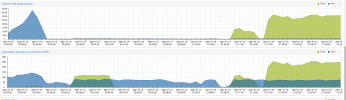

and on PVE where PBS is hosted we can see bigger i/o wait during restore than during backup period (?!) :

I kindly ask for some hint how to optimize and speed up this restore ?

Thank you very much in advance

BR

Tonci

Everything works fine except that backup is cca 20% faster than restore (?!) ... and (traditional) restore from nfs-backup server (vzdump) is cca 3x faster

and on PVE where PBS is hosted we can see bigger i/o wait during restore than during backup period (?!) :

I kindly ask for some hint how to optimize and speed up this restore ?

Thank you very much in advance

BR

Tonci