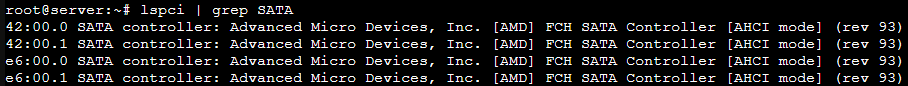

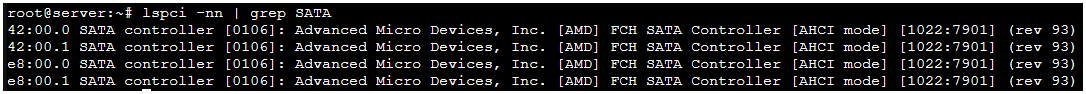

I have an AMD Epyc 9155 Turin CPU on an ASROCK GENOAD8UD-2T/X550 motherboard.

Two MCIO ports can be configured for SATA mode (each port is then seen as two 4 lane SATA controllers).

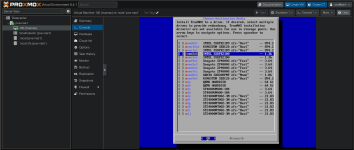

When being passed through to a VM the VM would not start with:

this occured for both 42:00.0 and 42:00.1

this is a bug likely caused by invalid MSIX PBA settings for the device on the motherboard as per this comment https://bugs.launchpad.net/qemu/+bug/1894869/comments/4

as in this thread https://forum.proxmox.com/threads/pci-passtrough-a100.143838/

amending my vm conf file to add the following was the solution

where the two SATA devices were defind in the conf like this

I am logging this incase anyone else hits this, and for incase i ever forget this and do a google search later

Two MCIO ports can be configured for SATA mode (each port is then seen as two 4 lane SATA controllers).

When being passed through to a VM the VM would not start with:

Code:

error writing '1' to '/sys/bus/pci/devices/0000:42:00.0/reset': Inappropriate ioctl for device

failed to reset PCI device '0000:42:00.0', but trying to continue as not all devices need a reset

kvm: -device vfio-pci,host=0000:42:00.0,id=hostpci0.0,bus=ich9-pcie-port-1,addr=0x0.0,multifunction=on: vfio 0000:42:00.0: hardware reports invalid configuration, MSIX PBA outside of specified BAR

TASK ERROR: start failed: QEMU exited with code 1this occured for both 42:00.0 and 42:00.1

this is a bug likely caused by invalid MSIX PBA settings for the device on the motherboard as per this comment https://bugs.launchpad.net/qemu/+bug/1894869/comments/4

as in this thread https://forum.proxmox.com/threads/pci-passtrough-a100.143838/

amending my vm conf file to add the following was the solution

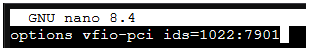

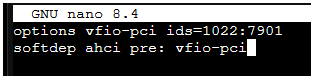

Code:

args: -set device.hostpci0.x-msix-relocation=bar2 -set device.hostpci1.x-msix-relocation=bar2where the two SATA devices were defind in the conf like this

Code:

hostpci0: 0000:42:00.0,pcie=1

hostpci1: 0000:42:00.1,pcie=1I am logging this incase anyone else hits this, and for incase i ever forget this and do a google search later

Last edited: