Hello,

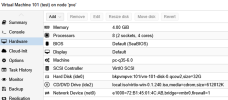

I have a PC with a Nvidia GTX 1650 PCIe. with Proxmox 7.0 I did the following configuration to passthrough the GPU to my VM Windows 10

I install a fresh install of proxmox 8.3 and I did the previous conf and restore my VM Windows from the Proxmox V 7.0:

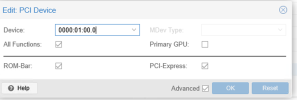

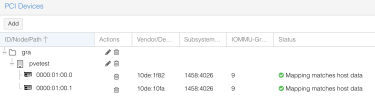

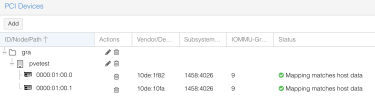

The only difference in the VM conf is, I used ressources mapping :

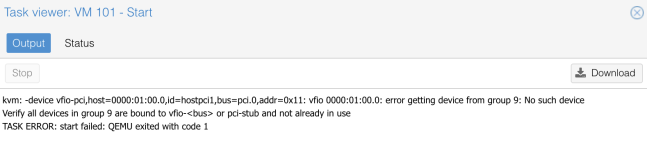

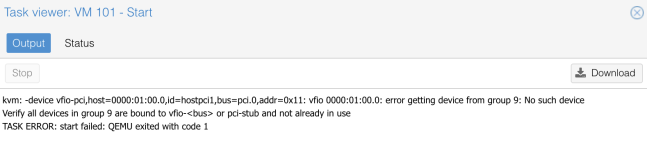

The VM tried to start, but stops and I get the following error:

Frankly, I don't know why, if you have any ideas ?

Thanks

I have a PC with a Nvidia GTX 1650 PCIe. with Proxmox 7.0 I did the following configuration to passthrough the GPU to my VM Windows 10

Code:

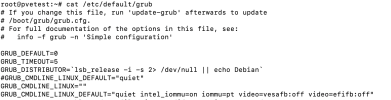

/etc/default/grub:

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt

video=vesafb:off video=efifb:off"

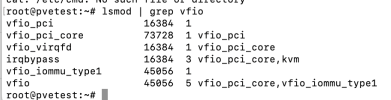

/etc/modules:

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

then update-grub

/etc/modprobe.d/vfio.conf

options vfio-pci ids=10de:1f82,10de:10fa disable vga=1

then update-initramfs -u

/etc/modprobe.d/blacklist.conf

blacklist nvidia

blacklist nouveau

blacklist radeon

blacklist i2c_nvidia_gpu

blacklist nvidiafb

then rebootI install a fresh install of proxmox 8.3 and I did the previous conf and restore my VM Windows from the Proxmox V 7.0:

The only difference in the VM conf is, I used ressources mapping :

The VM tried to start, but stops and I get the following error:

Frankly, I don't know why, if you have any ideas ?

Thanks