Palo Alto Networks VM

- Thread starter g0rgonus

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Do you mind sharing your hardware config? I was able to get it to boot finally, however I am stuck at an issue when trying to login.Made an account to reply to this.

Yes, I have PANOS as my primary firewall (as a vm). I have 2 double NICs PCI passthrough'd, 1 for management, 1 for WAN, and 1 for LAN.

It was a heck of a time to set up.

I am in need of some help on this also. Running PVE 7.3 and cannot get PANOS v10 or v11 to run. It boots to the point of seeing the :vm login" prompt, but no further and cannot find any issues when rebooting to maintenance mode and looking at any of the logs..

Looking at this thread, appears this is possible, but some stumbling blocks. e.g. add a serial port. But anything major needed? I'm currently running on ESXi, but would like to migrate to Proxmox. I would think a PANOS build from scratch would be the ideal approach, followed by a selective config restore of sorts. Any thoughts from folks that are running PANOS on Proxmox would be appreciated. Thank you.

I got this working..

The thing I found is that when I had the processor type set to kvm64, the boot failed. When set to "host" or a specific cpu type, like "Nehalam", the vm booted and I was able to access the login page. So it seems the type of "kvm" it doesn't like..

The only other thing I have setup is OVS with sr-iov enabled, but I think the boot issue is with the cpu type.

Edit: I have been able to upgrade to version 11.0 and the admin page is accessible and I can login. I have not tested actual data passing yet, as the system is sitting on my build bench. Will update when I get a chance to get it connected into the network and start passing data.

Edit 2: Ok, have this setup with a standard linux bridge running 2 vlans (untrust and trust) and using the virtio driver. Pulling 1.3Gb down and 41Mb up using basic allow all policies with no inspection at this time.

Values below are when the 1.3Gb download is occurring.

show running resource-monitor

Resource monitoring sampling data (per second):

CPU load sampling by group:

flow_lookup : 29%

flow_fastpath : 29%

flow_slowpath : 29%

flow_forwarding : 29%

flow_mgmt : 29%

flow_ctrl : 29%

nac_result : 0%

flow_np : 29%

dfa_result : 0%

module_internal : 29%

aho_result : 0%

zip_result : 0%

pktlog_forwarding : 29%

send_out : 29%

flow_host : 29%

send_host : 29%

fpga_result : 0%

The thing I found is that when I had the processor type set to kvm64, the boot failed. When set to "host" or a specific cpu type, like "Nehalam", the vm booted and I was able to access the login page. So it seems the type of "kvm" it doesn't like..

The only other thing I have setup is OVS with sr-iov enabled, but I think the boot issue is with the cpu type.

Edit: I have been able to upgrade to version 11.0 and the admin page is accessible and I can login. I have not tested actual data passing yet, as the system is sitting on my build bench. Will update when I get a chance to get it connected into the network and start passing data.

Edit 2: Ok, have this setup with a standard linux bridge running 2 vlans (untrust and trust) and using the virtio driver. Pulling 1.3Gb down and 41Mb up using basic allow all policies with no inspection at this time.

Values below are when the 1.3Gb download is occurring.

show running resource-monitor

Resource monitoring sampling data (per second):

CPU load sampling by group:

flow_lookup : 29%

flow_fastpath : 29%

flow_slowpath : 29%

flow_forwarding : 29%

flow_mgmt : 29%

flow_ctrl : 29%

nac_result : 0%

flow_np : 29%

dfa_result : 0%

module_internal : 29%

aho_result : 0%

zip_result : 0%

pktlog_forwarding : 29%

send_out : 29%

flow_host : 29%

send_host : 29%

fpga_result : 0%

Last edited:

In the processor setup, try changing type to "host" and see if that helps..Do you mind sharing your hardware config? I was able to get it to boot finally, however I am stuck at an issue when trying to login.

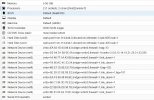

View attachment 45189

View attachment 45190

Came across this and got my Palo to work. The fix was adding the right hardware.

Adding host CPU did not resolve my issue, but adding enough network interfaces did. I'm using Palo Alto 11 (KVM) and encountered errors like unable to communicate to sysd on the login screen.

Followed this guide,

https://gist.github.com/cdot65/595e09f7d599024d65a56ee1cfc3abb2

The only difference in my configuration was enabling hotplugging resources and adding network interfaces (I'm assuming the missing interfaces was the problem).

Final config looks like this:

Hardware

RAM: 6 GB

CPU: 2 Cores (host)

BIOS: SeaBIOS

Display: Default

Machine: q35

SCSI Controller: VirtIO SCSI

Network Device (net0): virtio, bridge=vmbr0

Network Device (net1): virtio, bridge=vmbr0

Network Device (net2): virtio, bridge=vmbr0

Network Device (net3): virtio, bridge=vmbr0

Serial Port (serial0): socket

Some Relevant Options

OS Type: Linux

Hotplug: Disk, Network, USB, Memory, CPU

ACPI Support: Yes

KVM hardware virtualization: Yes

Adding host CPU did not resolve my issue, but adding enough network interfaces did. I'm using Palo Alto 11 (KVM) and encountered errors like unable to communicate to sysd on the login screen.

Followed this guide,

https://gist.github.com/cdot65/595e09f7d599024d65a56ee1cfc3abb2

The only difference in my configuration was enabling hotplugging resources and adding network interfaces (I'm assuming the missing interfaces was the problem).

Final config looks like this:

Hardware

RAM: 6 GB

CPU: 2 Cores (host)

BIOS: SeaBIOS

Display: Default

Machine: q35

SCSI Controller: VirtIO SCSI

Network Device (net0): virtio, bridge=vmbr0

Network Device (net1): virtio, bridge=vmbr0

Network Device (net2): virtio, bridge=vmbr0

Network Device (net3): virtio, bridge=vmbr0

Serial Port (serial0): socket

Some Relevant Options

OS Type: Linux

Hotplug: Disk, Network, USB, Memory, CPU

ACPI Support: Yes

KVM hardware virtualization: Yes

So it looks like there are two requirements here. One is the host cpu change and the other is at least 2 interfaces, which makes sense becasue the first interface is dedicated to management and you need at least one for the data plane.Came across this and got my Palo to work. The fix was adding the right hardware.

Adding host CPU did not resolve my issue, but adding enough network interfaces did. I'm using Palo Alto 11 (KVM) and encountered errors like unable to communicate to sysd on the login screen.

Followed this guide,

https://gist.github.com/cdot65/595e09f7d599024d65a56ee1cfc3abb2

The only difference in my configuration was enabling hotplugging resources and adding network interfaces (I'm assuming the missing interfaces was the problem).

Final config looks like this:

Hardware

RAM: 6 GB

CPU: 2 Cores (host)

BIOS: SeaBIOS

Display: Default

Machine: q35

SCSI Controller: VirtIO SCSI

Network Device (net0): virtio, bridge=vmbr0

Network Device (net1): virtio, bridge=vmbr0

Network Device (net2): virtio, bridge=vmbr0

Network Device (net3): virtio, bridge=vmbr0

Serial Port (serial0): socket

Some Relevant Options

OS Type: Linux

Hotplug: Disk, Network, USB, Memory, CPU

ACPI Support: Yes

KVM hardware virtualization: Yes

I have now moved mine to a more beefy ESXi box and with the better physical CPU, then I now push 1.4G through it, max download from my ISP, the data plane cpu is running around 8%.. For a $315 lab box, it iw well worth the price for the throughput you can get out of it..

My Palo is successfully migrated from ESXi to Proxmox. I was always aware of need to have 10 NICs on the guest, but the addition of serial was the key.

I used sftp to copy over all the vmdk files and imported using qm as shown below.

Once tested, I shutdown on Proxmox and brought up the Palo ESXi in order to deactivate the license on the old host. Then shutdown Palo on ESXi for the last time and started up on Proxmox, where the final license activation on the new platform was then completed.

I used sftp to copy over all the vmdk files and imported using qm as shown below.

qm importdisk 113 xyz.vmdk ceph-pool1

qm importdisk 113 xyz_1.vmdk ceph-pool1

Once tested, I shutdown on Proxmox and brought up the Palo ESXi in order to deactivate the license on the old host. Then shutdown Palo on ESXi for the last time and started up on Proxmox, where the final license activation on the new platform was then completed.

There is absolutely NO requirement to have 10 nic's on the VM. You can have "up to" 10 nics on the VM to use, but you do not have to..My Palo is successfully migrated from ESXi to Proxmox. I was always aware of need to have 10 NICs on the guest, but the addition of serial was the key.

View attachment 47795

I used sftp to copy over all the vmdk files and imported using qm as shown below.

qm importdisk 113 xyz.vmdk ceph-pool1 qm importdisk 113 xyz_1.vmdk ceph-pool1

Once tested, I shutdown on Proxmox and brought up the Palo ESXi in order to deactivate the license on the old host. Then shutdown Palo on ESXi for the last time and started up on Proxmox, where the final license activation on the new platform was then completed.

I am very successfully running with two nic's configured without any issue. You do have to have a minimum of two. One for management and one for data to pass through the firewall.

Serial port is also not required, but is great to have to see what is going on during boot.

Totally understand and agree. I think I used the wrong words more than anything. In the past, when I have built VMs I found that adding and deleting vNICs can create some out of order situations and more difficult to identify (need to check MAC). So that's why I add 10 before that first power up.There is absolutely NO requirement to have 10 nic's on the VM. You can have "up to" 10 nics on the VM to use, but you do not have to..

I am very successfully running with two nic's configured without any issue. You do have to have a minimum of two. One for management and one for data to pass through the firewall.

Serial port is also not required, but is great to have to see what is going on during boot.

Here is my final config procedure

- It's rebuilt from a newly downloaded qcow2 disk, imported using `qm`

- re-licensed the newly built Palo Alto vm-series firewall

- all AV, threat patterns downloaded (if I restored config without there would be policy references that would fail the commit)

- Palo config restored from snapshot xml

- all Palo version updates applied to recommended level, which today is 10.1.9

Things I have not done additional testing on yet

- Migrate to different hosts (all same proc)

EDIT: March 14 2023. Couple tweaks as discovered a major issue on the external network. Turns out the AsusWRT Merlin which, was connected for some testing, has STP enabled by default. I was surprised as dd-wrt, Open-WRT and Aruba AP do not have STP enabled by default. So I backed out items in strike-though above in order to get to that solution. So MSTP on the main switch combined with STP on the AsusWRT was causing major issues. Disable of STP on the AsusWRT Merlin instantly solved. Prior to finding this, I also rolled back to 10.1.x. The PanOS "drop all STP packets" feature didn't appear to provide a solution and didn't perform anything similar on the switch. Lesson there is edge ports can introduce disruptive packets and take down your Palo. The many layer 2 interfaces that I have, which are bridged on the Palo, underpins this, in all likelihood. There is a residual issue, potentially related to how MAC addresses are learned on a Proxmox bridge / promiscuous mode that I have some tests to do with.

Attachments

Last edited:

Hi all,

I got the same issue and tried all options available above to install my PanOS 10.x. but It stuck always in PA-HDF Login: prompt even I waited 3-4 hours with multiple tries and different VM-hardware options but it did not work and got the same error.

After few hours of google research, I found out that the KVM Image file which we download direkt from Paloalto homepage does not work in default settings in proxmox.

Here are my settings which worked:

Secondly, it didn't work in first start, I have to do in Debug mode (Maintenance mode) and from there re-install the PANOS setup. It took approx. 60 minutes. After that all error messages were disappeared and default login credentials were able to login.

Best Regards

Mashhood Ahmad

TechStadt DE

I got the same issue and tried all options available above to install my PanOS 10.x. but It stuck always in PA-HDF Login: prompt even I waited 3-4 hours with multiple tries and different VM-hardware options but it did not work and got the same error.

After few hours of google research, I found out that the KVM Image file which we download direkt from Paloalto homepage does not work in default settings in proxmox.

Here are my settings which worked:

Secondly, it didn't work in first start, I have to do in Debug mode (Maintenance mode) and from there re-install the PANOS setup. It took approx. 60 minutes. After that all error messages were disappeared and default login credentials were able to login.

Best Regards

Mashhood Ahmad

TechStadt DE

Hey everyone,

I have PA VM (2 vCPU) running on Proxmox, but one thing that I don't like - VM is always burning one core, meaning there's around 50% CPU usage. Based on knowledgebase article by PA (sadly it is gone now and didn't save it) it is that way to have less latency when CPU cycles are required for DP forwarding, so CPU is being polled constantly even though there may not be actual work to do. Given I have a passive hardware setup, I would be interested in reducing the load and heat, but I'm a networking guy, so I generally have no idea if that is even possible or reasonable. There were even some variables to tune on the VM side, but anyway - that's why asking exactly in this thread - have you dealt with it?

I have PA VM (2 vCPU) running on Proxmox, but one thing that I don't like - VM is always burning one core, meaning there's around 50% CPU usage. Based on knowledgebase article by PA (sadly it is gone now and didn't save it) it is that way to have less latency when CPU cycles are required for DP forwarding, so CPU is being polled constantly even though there may not be actual work to do. Given I have a passive hardware setup, I would be interested in reducing the load and heat, but I'm a networking guy, so I generally have no idea if that is even possible or reasonable. There were even some variables to tune on the VM side, but anyway - that's why asking exactly in this thread - have you dealt with it?

I've been fighting this same issue tonight -- Palo VM hanging at PA-HDF login.

This is with PA-VM-KVM 10.1.6-h6 (and 10.1.6).

My Proxmox cluster is running 8.1.3

The funny part is, PA VM 10.2.7 installs and runs just fine with default options.

I noticed right before the PA-HDF prompt, there was (very briefly) an error on the console, and I had to screen-cap the console to see it.

It was an error in the "vm_license_check" script, saying: option -m require argument

-m is "memory size", so it's clear Palo was having trouble collecting the RAM size to size the VM appropriately.

After a lot of playing around, I found that the "machine" type was the trick.

The default (i440fx) and q35 didn't work.

But setting q35 to version <=8.0 works for me.

The "ostype", NICs, CPU and SCSI type don't seem to make a difference.

There was also no need to boot to maintenance mode and re-install the OS once I had a working configuration.

This is a repeatable successful deployment for me on 8.1.3:

qm create 453 --name pavm3 --memory 4608 --cores 2 --ostype l26 --machine pc-q35-8.0 --serial0 socket \

--net0 model=virtio,bridge=vmbr0,tag=100

qm disk import 453 /mnt/pve/ISO/PaloAlto/PA-VM-KVM-10.1.6-h6.qcow2 VMProx1 --format qcow2

qm set 453 --scsihw virtio-scsi-single --virtio0 VMProx1:453/vm-453-disk-0.qcow2 --boot order=virtio0

I hope that helps someone!

This is with PA-VM-KVM 10.1.6-h6 (and 10.1.6).

My Proxmox cluster is running 8.1.3

The funny part is, PA VM 10.2.7 installs and runs just fine with default options.

I noticed right before the PA-HDF prompt, there was (very briefly) an error on the console, and I had to screen-cap the console to see it.

It was an error in the "vm_license_check" script, saying: option -m require argument

-m is "memory size", so it's clear Palo was having trouble collecting the RAM size to size the VM appropriately.

After a lot of playing around, I found that the "machine" type was the trick.

The default (i440fx) and q35 didn't work.

But setting q35 to version <=8.0 works for me.

The "ostype", NICs, CPU and SCSI type don't seem to make a difference.

There was also no need to boot to maintenance mode and re-install the OS once I had a working configuration.

This is a repeatable successful deployment for me on 8.1.3:

qm create 453 --name pavm3 --memory 4608 --cores 2 --ostype l26 --machine pc-q35-8.0 --serial0 socket \

--net0 model=virtio,bridge=vmbr0,tag=100

qm disk import 453 /mnt/pve/ISO/PaloAlto/PA-VM-KVM-10.1.6-h6.qcow2 VMProx1 --format qcow2

qm set 453 --scsihw virtio-scsi-single --virtio0 VMProx1:453/vm-453-disk-0.qcow2 --boot order=virtio0

I hope that helps someone!

I just banged my head against this for a couple hours on PAN-OS 10.1.12. Changing the machine version from "Latest" to 8.0 also resolved for me.I've been fighting this same issue tonight -- Palo VM hanging at PA-HDF login.

This is with PA-VM-KVM 10.1.6-h6 (and 10.1.6).

My Proxmox cluster is running 8.1.3

The funny part is, PA VM 10.2.7 installs and runs just fine with default options.

I noticed right before the PA-HDF prompt, there was (very briefly) an error on the console, and I had to screen-cap the console to see it.

It was an error in the "vm_license_check" script, saying: option -m require argument

-m is "memory size", so it's clear Palo was having trouble collecting the RAM size to size the VM appropriately.

After a lot of playing around, I found that the "machine" type was the trick.

The default (i440fx) and q35 didn't work.

But setting q35 to version <=8.0 works for me.

The "ostype", NICs, CPU and SCSI type don't seem to make a difference.

There was also no need to boot to maintenance mode and re-install the OS once I had a working configuration.

This is a repeatable successful deployment for me on 8.1.3:

qm create 453 --name pavm3 --memory 4608 --cores 2 --ostype l26 --machine pc-q35-8.0 --serial0 socket \

--net0 model=virtio,bridge=vmbr0,tag=100

qm disk import 453 /mnt/pve/ISO/PaloAlto/PA-VM-KVM-10.1.6-h6.qcow2 VMProx1 --format qcow2

qm set 453 --scsihw virtio-scsi-single --virtio0 VMProx1:453/vm-453-disk-0.qcow2 --boot order=virtio0

I hope that helps someone!

Thanks for sharing!