Out of space: really ?

- Thread starter digit23

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

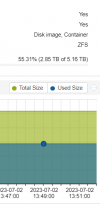

7x 1TB disks in raidz1 with the default 8K volblocksize would result in 3,5TB of usable space for zvols and because a ZFS pool will become slow and fragment faster when filling it up too much only 2.8 - 3.15 TB (80-90%) should be used. And thats TB, so only 2.55 - 2.86 TiB.

To not waste half of your raw capacity you would need to destroy all virtual disks of all VMs and recreate them after increasing the "block size" of your ZFS storage. I would recommend to use a volblockize of 32K, as a too big volblocksize again wastes space nd performance when doing small IO.

To not waste half of your raw capacity you would need to destroy all virtual disks of all VMs and recreate them after increasing the "block size" of your ZFS storage. I would recommend to use a volblockize of 32K, as a too big volblocksize again wastes space nd performance when doing small IO.

Last edited:

Zvols consuming space on 7 disk raidz1 created with ashift=12:

| Data stored on VMs virtual disks actually consumes space (parity loss not included): | |

| 4K volblocksize | 171% |

| 8K volblockize | 171% |

| 16K volblockize | 128% |

| 32K volblockize | 107% |

| 64K volblockize | 107% |

| 128K volblockize | 102% |

| 256K volblockize | 102% |

| 512K volblockize | 101% |

| 1M volblockize | 101% |

Last edited:

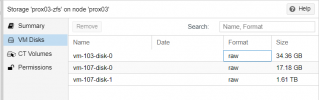

Yes, so 163% size in practice and 171% in theory.Holly shit !!! 275G -> 449G

root@prox01:~# zfs get volsize,refreservation,used vm/vm-108-disk-0

NAME PROPERTY VALUE SOURCE

vm/vm-108-disk-0 volsize 275G local

vm/vm-108-disk-0 refreservation 449G local

vm/vm-108-disk-0 used 449G -

In case you are running DBs or you got similar workloads that do a lot of small IO I would highly recommend to create a striped mirror (raid10). 8x 1TB disks in a striped mirror would give you 4 times the IOPS performance, you could use a 16K volblocksize, easier to add more storage when needed, better reliability, resilvering time would be way lower and there is no padding overhead with zvols, so the full 4TB are really usable.

Padding overhead, by the way, also only effects zvols and not datasets, so LXCs could use the full 7TB.

And if you still want to use a raidz1, the easiest way to change the volblocksize would be:

1.) in PVE webUI go to Datacenter -> Storage -> select your ZFS storage -> Edit -> set something like "32K" as your "Block size"

2.) stop and backup a VM

3.) verify the backup

4.) restore that VM from backup overwriting the existing VM

5.) repeat step 2 to 4 until all VMs are replaced.

Last edited:

I don't think cloning will work as a "zfs clone" will reference the old snapshotted data and you can't destroy the cloned zvol/dataset as long as the clone exists.

You really need to write all data again. If you got enough empty space you could copy the whole zvol locally using "zfs send | zfs recv" , rename the old zvol, rename the new zvol, test if the VM is still working and only then destroy the old zvol.

You really need to write all data again. If you got enough empty space you could copy the whole zvol locally using "zfs send | zfs recv" , rename the old zvol, rename the new zvol, test if the VM is still working and only then destroy the old zvol.