Hello!

I managed to set up my first Proxmox machine. I'm using it for 2 gaming VMs.

I got everything working, pci-e passthrough, USB devices, etc.

The problem I'm having is that I'm running out of memory, and I can't seem to find a good balance between VM memory and host memory.

For some background, I have 32GB of RAM, on a RAID0 ZFS setup with 2 SSDs.

I set the ZFS ARC to 4GB max, and I've read that Proxmox can run with 2GB.

So, I give each VM 13GB, and leave 6GB for the host.

I have 4GB of swap, vm.swappiness is set to either 0 or 1, I've tried both, no difference.

min_free_kbytes is set to 67584.

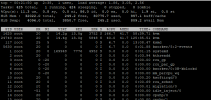

Here's the output of a OOM entry:

If I decrease both VMs to 8GB, it doesn't crash, but....using half of 32GB for the host seems silly. So I'm wondering if there's a way to tweak settings or do something to get the most RAM usage for the VMs. I've tried with 10GB per VM too, and it will eventually also OOM.

I managed to set up my first Proxmox machine. I'm using it for 2 gaming VMs.

I got everything working, pci-e passthrough, USB devices, etc.

The problem I'm having is that I'm running out of memory, and I can't seem to find a good balance between VM memory and host memory.

For some background, I have 32GB of RAM, on a RAID0 ZFS setup with 2 SSDs.

I set the ZFS ARC to 4GB max, and I've read that Proxmox can run with 2GB.

So, I give each VM 13GB, and leave 6GB for the host.

I have 4GB of swap, vm.swappiness is set to either 0 or 1, I've tried both, no difference.

min_free_kbytes is set to 67584.

Here's the output of a OOM entry:

Code:

Jan 02 15:47:51 rimuru kernel: kworker/6:2 invoked oom-killer: gfp_mask=0xc2cc0(GFP_KERNEL|__GFP_NOWARN|__GFP_COMP|__GFP_NOMEMALLOC), order=3, oom_score_adj=0

Jan 02 15:47:51 rimuru kernel: CPU: 6 PID: 12442 Comm: kworker/6:2 Tainted: P O 5.4.78-2-pve #1

Jan 02 15:47:51 rimuru kernel: Hardware name: ASUS System Product Name/ROG STRIX B550-E GAMING, BIOS 1401 12/03/2020

Jan 02 15:47:51 rimuru kernel: Workqueue: events usbnet_deferred_kevent [usbnet]

Jan 02 15:47:51 rimuru kernel: Call Trace:

Jan 02 15:47:51 rimuru kernel: dump_stack+0x6d/0x9a

Jan 02 15:47:51 rimuru kernel: dump_header+0x4f/0x1e1

Jan 02 15:47:51 rimuru kernel: oom_kill_process.cold.33+0xb/0x10

Jan 02 15:47:51 rimuru kernel: out_of_memory+0x1ad/0x490

Jan 02 15:47:51 rimuru kernel: __alloc_pages_slowpath+0xd40/0xe30

Jan 02 15:47:51 rimuru kernel: __alloc_pages_nodemask+0x2df/0x330

Jan 02 15:47:51 rimuru kernel: kmalloc_large_node+0x42/0x90

Jan 02 15:47:51 rimuru kernel: __kmalloc_node_track_caller+0x258/0x320

Jan 02 15:47:51 rimuru kernel: ? __alloc_skb+0x87/0x1d0

Jan 02 15:47:51 rimuru kernel: __kmalloc_reserve.isra.61+0x31/0x90

Jan 02 15:47:51 rimuru kernel: __alloc_skb+0x87/0x1d0

Jan 02 15:47:51 rimuru kernel: __netdev_alloc_skb+0x3f/0x150

Jan 02 15:47:51 rimuru kernel: rx_submit+0x49/0x350 [usbnet]

Jan 02 15:47:51 rimuru kernel: usbnet_deferred_kevent+0x2c0/0x350 [usbnet]

Jan 02 15:47:51 rimuru kernel: ? pwq_dec_nr_in_flight+0x4d/0xa0

Jan 02 15:47:51 rimuru kernel: process_one_work+0x20f/0x3d0

Jan 02 15:47:51 rimuru kernel: worker_thread+0x25e/0x400

Jan 02 15:47:51 rimuru kernel: kthread+0x120/0x140

Jan 02 15:47:51 rimuru kernel: ? process_one_work+0x3d0/0x3d0

Jan 02 15:47:51 rimuru kernel: ? kthread_park+0x90/0x90

Jan 02 15:47:51 rimuru kernel: ret_from_fork+0x22/0x40

Jan 02 15:47:51 rimuru kernel: Mem-Info:

Jan 02 15:47:51 rimuru kernel: active_anon:6294531 inactive_anon:602548 isolated_anon:3

active_file:60855 inactive_file:285776 isolated_file:0

unevictable:1330 dirty:28628 writeback:483 unstable:0

slab_reclaimable:50329 slab_unreclaimable:362627

mapped:9406 shmem:4001 pagetables:15863 bounce:0

free:54456 free_pcp:1786 free_cma:0

Jan 02 15:47:51 rimuru kernel: Node 0 active_anon:25178124kB inactive_anon:2410192kB active_file:243420kB inactive_file:1143104kB unevictable:5320kB isolated(anon):12kB isolated(file):0kB mapped:37624kB dirty:114512kB writeback:1932kB shmem:16004kB shmem_thp: 0kB shmem_pmdmapped: 0kB anon_thp: 27262976kB writeback_tmp:0kB unstable:0kB all_unreclaimable? no

Jan 02 15:47:51 rimuru kernel: Node 0 DMA free:15732kB min:32kB low:44kB high:56kB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB writepending:0kB present:15992kB managed:15896kB mlocked:0kB kernel_stack:0kB pagetables:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Jan 02 15:47:51 rimuru kernel: lowmem_reserve[]: 0 2622 31962 31962 31962

Jan 02 15:47:51 rimuru kernel: Node 0 DMA32 free:125420kB min:5540kB low:8224kB high:10908kB active_anon:51860kB inactive_anon:64840kB active_file:73944kB inactive_file:598836kB unevictable:0kB writepending:64124kB present:2793200kB managed:2726388kB mlocked:0kB kernel_stack:672kB pagetables:1404kB bounce:0kB free_pcp:4096kB local_pcp:0kB free_cma:0kB

Jan 02 15:47:51 rimuru kernel: lowmem_reserve[]: 0 0 29339 29339 29339

Jan 02 15:47:51 rimuru kernel: Node 0 Normal free:76672kB min:62008kB low:92052kB high:122096kB active_anon:25126264kB inactive_anon:2345352kB active_file:169828kB inactive_file:543400kB unevictable:5320kB writepending:52448kB present:30658048kB managed:30052052kB mlocked:5320kB kernel_stack:7280kB pagetables:62048kB bounce:0kB free_pcp:3020kB local_pcp:0kB free_cma:0kB

Jan 02 15:47:51 rimuru kernel: lowmem_reserve[]: 0 0 0 0 0

Jan 02 15:47:51 rimuru kernel: Node 0 DMA: 1*4kB (U) 2*8kB (U) 0*16kB 1*32kB (U) 1*64kB (U) 0*128kB 1*256kB (U) 0*512kB 1*1024kB (U) 1*2048kB (M) 3*4096kB (M) = 15732kB

Jan 02 15:47:51 rimuru kernel: Node 0 DMA32: 11730*4kB (UME) 1613*8kB (UE) 4112*16kB (UE) 0*32kB 0*64kB 0*128kB 0*256kB 0*512kB 0*1024kB 0*2048kB 0*4096kB = 125616kB

Jan 02 15:47:51 rimuru kernel: Node 0 Normal: 1802*4kB (UMH) 6191*8kB (UMH) 1180*16kB (UH) 9*32kB (H) 8*64kB (H) 6*128kB (H) 0*256kB 0*512kB 0*1024kB 0*2048kB 0*4096kB = 77184kB

Jan 02 15:47:51 rimuru kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=1048576kB

Jan 02 15:47:51 rimuru kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB

Jan 02 15:47:51 rimuru kernel: 371556 total pagecache pages

Jan 02 15:47:51 rimuru kernel: 19881 pages in swap cache

Jan 02 15:47:51 rimuru kernel: Swap cache stats: add 154337, delete 134453, find 115895/148861

Jan 02 15:47:51 rimuru kernel: Free swap = 3756796kB

Jan 02 15:47:51 rimuru kernel: Total swap = 4194300kB

Jan 02 15:47:51 rimuru kernel: 8366810 pages RAM

Jan 02 15:47:51 rimuru kernel: 0 pages HighMem/MovableOnly

Jan 02 15:47:51 rimuru kernel: 168226 pages reserved

Jan 02 15:47:51 rimuru kernel: 0 pages cma reserved

Jan 02 15:47:51 rimuru kernel: 0 pages hwpoisoned

Jan 02 15:47:51 rimuru kernel: Tasks state (memory values in pages):

Jan 02 15:47:51 rimuru kernel: [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name

Jan 02 15:47:51 rimuru kernel: [ 844] 0 844 7434 1601 98304 137 0 systemd-journal

Jan 02 15:47:51 rimuru kernel: [ 866] 0 866 5765 908 73728 8 -1000 systemd-udevd

Jan 02 15:47:51 rimuru kernel: [ 1210] 106 1210 1705 556 53248 0 0 rpcbind

Jan 02 15:47:51 rimuru kernel: [ 1213] 100 1213 23270 1115 81920 0 0 systemd-timesyn

Jan 02 15:47:51 rimuru kernel: [ 1214] 0 1214 21543 347 69632 0 0 pvefw-logger

Jan 02 15:47:51 rimuru kernel: [ 1241] 0 1241 3137 1078 61440 1 0 smartd

Jan 02 15:47:51 rimuru kernel: [ 1243] 0 1243 136554 443 114688 0 0 pve-lxc-syscall

Jan 02 15:47:51 rimuru kernel: [ 1247] 104 1247 2261 668 57344 0 -900 dbus-daemon

Jan 02 15:47:51 rimuru kernel: [ 1250] 0 1250 4880 1215 77824 0 0 systemd-logind

Jan 02 15:47:51 rimuru kernel: [ 1252] 0 1252 41689 755 86016 0 0 zed

Jan 02 15:47:51 rimuru kernel: [ 1256] 0 1256 21333 342 53248 0 0 lxcfs

Jan 02 15:47:51 rimuru kernel: [ 1260] 0 1260 56455 907 81920 7 0 rsyslogd

Jan 02 15:47:51 rimuru kernel: [ 1266] 0 1266 1022 34 49152 0 0 qmeventd

Jan 02 15:47:51 rimuru kernel: [ 1281] 0 1281 1681 537 61440 0 0 ksmtuned

Jan 02 15:47:51 rimuru kernel: [ 1410] 0 1410 954 380 45056 0 0 lxc-monitord

Jan 02 15:47:51 rimuru kernel: [ 1417] 0 1417 568 142 36864 0 0 none

Jan 02 15:47:51 rimuru kernel: [ 1420] 0 1420 1722 60 49152 0 0 iscsid

Jan 02 15:47:51 rimuru kernel: [ 1422] 0 1422 1848 1254 53248 0 -17 iscsid

Jan 02 15:47:51 rimuru kernel: [ 1425] 0 1425 3962 1064 65536 1 -1000 sshd

Jan 02 15:47:51 rimuru kernel: [ 1438] 0 1438 1402 420 45056 0 0 agetty

Jan 02 15:47:51 rimuru kernel: [ 1451] 0 1451 183190 739 184320 5 0 rrdcached

Jan 02 15:47:51 rimuru kernel: [ 1461] 0 1461 142882 5024 319488 1303 0 pmxcfs

Jan 02 15:47:51 rimuru kernel: [ 1470] 0 1470 2125 574 57344 0 0 cron

Jan 02 15:47:51 rimuru kernel: [ 1475] 0 1475 76185 7964 307200 13548 0 pve-firewall

Jan 02 15:47:51 rimuru kernel: [ 1476] 0 1476 75787 9103 307200 12334 0 pvestatd

Jan 02 15:47:51 rimuru kernel: [ 1500] 0 1500 88354 1546 417792 28415 0 pvedaemon

Jan 02 15:47:51 rimuru kernel: [ 1501] 0 1501 90480 7639 442368 24293 0 pvedaemon worke

Jan 02 15:47:51 rimuru kernel: [ 1502] 0 1502 90465 7645 442368 24303 0 pvedaemon worke

Jan 02 15:47:51 rimuru kernel: [ 1503] 0 1503 90513 8540 438272 23404 0 pvedaemon worke

Jan 02 15:47:51 rimuru kernel: [ 1509] 33 1509 88732 2285 409600 28350 0 pveproxy

Jan 02 15:47:51 rimuru kernel: [ 1516] 33 1516 17583 483 172032 12089 0 spiceproxy

Jan 02 15:47:51 rimuru kernel: [ 1517] 33 1517 17649 1179 172032 11593 0 spiceproxy work

Jan 02 15:47:51 rimuru kernel: [ 1616] 0 1616 3743704 3424439 29777920 0 0 kvm

Jan 02 15:47:51 rimuru kernel: [ 1753] 0 1753 3764267 3416877 29802496 0 0 kvm

Jan 02 15:47:51 rimuru kernel: [ 2297] 33 2297 90862 7961 434176 24566 0 pveproxy worker

Jan 02 15:47:51 rimuru kernel: [ 2093] 33 2093 90873 8268 434176 24296 0 pveproxy worker

Jan 02 15:47:51 rimuru kernel: [ 12804] 33 12804 90858 8459 434176 24049 0 pveproxy worker

Jan 02 15:47:51 rimuru kernel: [ 12498] 0 12498 1314 189 49152 0 0 sleep

Jan 02 15:47:51 rimuru kernel: oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0,global_oom,task_memcg=/qemu.slice/100.scope,task=kvm,pid=1616,uid=0

Jan 02 15:47:51 rimuru kernel: Out of memory: Killed process 1616 (kvm) total-vm:14974816kB, anon-rss:13693780kB, file-rss:3976kB, shmem-rss:0kB, UID:0 pgtables:29080kB oom_score_adj:0

Jan 02 15:47:51 rimuru kernel: oom_reaper: reaped process 1616 (kvm), now anon-rss:0kB, file-rss:68kB, shmem-rss:0kBIf I decrease both VMs to 8GB, it doesn't crash, but....using half of 32GB for the host seems silly. So I'm wondering if there's a way to tweak settings or do something to get the most RAM usage for the VMs. I've tried with 10GB per VM too, and it will eventually also OOM.