I have this same issue, however with a twist. Due to how the IOMMU groups are setup and how bad the motherboard is (i'm using an gigabyte a520i) i need to override ACS: `pcie_acs_override=downstream,multifunction`Hey,

thanks for your reply.

1. Yes, I just checked and in fact ASM1166 is always on the 04:00:.0 bus on kernel 6.8, 6.11 and 6.14

2. Yes, ASM1166 is always in it's own IOMMU group for all aforementioned kernel versions

Changing kernel version seems to have no effect on point 1 and 2 in my setup.

Despite this I still have the problem that with kernel 6.14 my TrueNAS VM that has PCIe passthrough configured for ASM1166 SATA controller can not be started.

I can see this log message getting "spammed" to journalctl repeatedly:

VM 101 qmp command failed - VM 101 qmp command 'query-proxmox-support' failed - unable to connect to VM 101 qmp socket - timeout after 51 retries

Like mentioned before, on both kernel 6.8 and 6.11 the setup works just fine.

It appears that kernel 6.14 has issues with PCIe passthrough.

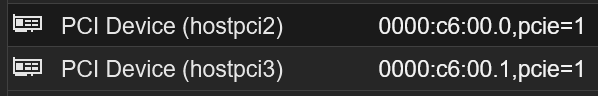

I am passing 4 devices to the VM, 2 sata controllers, 1 nvme drive and a vf network card.

LE: Turns out i needed to disable rombar on the sata controllers.

Last edited: