This sounds very HW and/or setup specifics, we saw no such thing on the HW in our testlabs, even a tiny decrease of base level IO load on one server FWIW.Whatever this kernel release is about, it is busted beyond belief.

I get CPU usage up, with IO delay and server load up the roof.

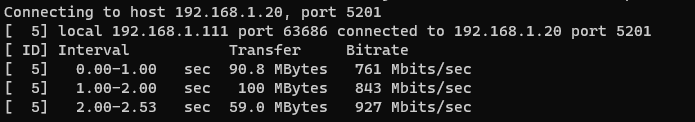

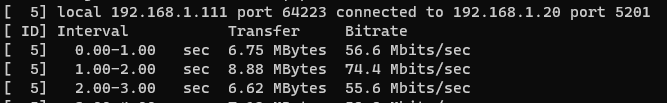

View attachment 86493

Cannot start linux VMs (TASK ERROR: timeout waiting on systemd) and cannot shutdown neither VMs not CTs through PVE interface.

After pinning the previous kernel, I couldn't soft reboot the server. Had to cold reset.

HP DL380p Gen9

Anything in the system log (journal)? More details like file system and so on would be good to have too.

That server series is also from 2014 IIRC, with more than ten years the problems with running latest kernels might start to increase again; not saying it has to be in your case, but such old systems are rarer to find in test labs. If it was a specific regression it might be still fixable, but you should in any case ensure you run the latest BIOS/Firmware available for your system to rule out such generic stuff.