Don't have one. Maybe ask t.lamprecht who seems to have one.does your N100

Opt-in Linux 6.14 Kernel for Proxmox VE 8 available on test & no-subscription

- Thread starter t.lamprecht

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

this works perfectly thank you. great work as always.

for reference i applied the patch to the driver .run file, installed it, installed 6.14 and rebooted. the modules built fine and all is well.

also if you do not mind me asking, i noticed you have a pascal on 17.5? is there a trick to that? i replaced my

vgpuConfig.xml file, which works fine to make vGPU function, but linux VMs say driver mismatch on any driver version (and they do not work) and windows will still only use 535.... just wondering if i am doing something wrong or missing something (it seems like i am. i could never figure out this or getting LXCs to use the gpu right with the kvm driver haha. )Not sure if there is a difference between POLLED and INTERRUPT when the igen6_edac is loaded, but I noticed that was a difference between 6.8 and 6.14 kernels.DDR5 has built in basic EEC and Intel N100 had an issue where memory error events were not correctly handled, this has been fixed with a commit that landed in Linux 6.13, and thus it can indeed happen that 6.14 will show these messages while older kernel do not.

This does not necessarily mean that the HW must be broken, the reporting of it could be broken too – some vendors sell Intel N100 based systems with memory installed already, that memory is often some noname cheap stuff, might even work fine but could have other issues. And, there really could be a problem with your HW, e.g. the memory DIMMs or the motherboard or the CPU and if that's the case then disabling the EDAC module is only silencing the messenger and symptoms, not really fixing anything.

FWIW, I got an Intel N100 based system running PVE, and it does not show these errors when booting our 6.14 kernel, I bought memory separately and chose a reputable vendor, so at least it's not a general issue to Intel N100 based systems.

Yeah, polling is exactly what the patch I linked implements, from the commit message:Not sure if there is a difference between POLLED and INTERRUPT when the igen6_edac is loaded, but I noticed that was a difference between 6.8 and 6.14 kernels.

Again, I'd not be surprised if it's an issue in the firmware of your board, those are often done rather sloppy for those cheaper motherboards. Adding the polling mode seems to be a workaround for something like that too.Some PCs with Intel N100 (with PCI device 8086:461c, DID_ADL_N_SKU4) experienced issues with error interrupts not working, [...].

Add polling mode support for these machines to ensure that memory error events are handled.

Switched to 6.14 kernel. Found an issue with Realtek 8125 NIC: iperf3 reports 2.35 Gbits/s in one direction, but only 2.24 Gbits/s in reverse direction.

Code:

# iperf3 -c router.home

Connecting to host router.home, port 5201

[ 5] local 192.168.64.2 port 51652 connected to 192.168.64.1 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 282 MBytes 2.37 Gbits/sec 0 749 KBytes

[ 5] 1.00-2.00 sec 281 MBytes 2.35 Gbits/sec 44 837 KBytes

[ 5] 2.00-3.00 sec 281 MBytes 2.35 Gbits/sec 0 837 KBytes

[ 5] 3.00-4.00 sec 281 MBytes 2.36 Gbits/sec 0 837 KBytes

[ 5] 4.00-5.00 sec 281 MBytes 2.35 Gbits/sec 0 837 KBytes

[ 5] 5.00-6.00 sec 280 MBytes 2.35 Gbits/sec 0 837 KBytes

[ 5] 6.00-7.00 sec 281 MBytes 2.35 Gbits/sec 0 837 KBytes

[ 5] 7.00-8.00 sec 281 MBytes 2.36 Gbits/sec 0 837 KBytes

[ 5] 8.00-9.00 sec 281 MBytes 2.36 Gbits/sec 0 837 KBytes

[ 5] 9.00-10.00 sec 280 MBytes 2.35 Gbits/sec 0 837 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.74 GBytes 2.36 Gbits/sec 44 sender

[ 5] 0.00-10.00 sec 2.00 GBytes 1.72 Gbits/sec receiver

iperf Done.

# iperf3 -c router.home -R

Connecting to host router.home, port 5201

Reverse mode, remote host router.home is sending

[ 5] local 192.168.64.2 port 39748 connected to 192.168.64.1 port 5201

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 268 MBytes 2.24 Gbits/sec

[ 5] 1.00-2.00 sec 267 MBytes 2.24 Gbits/sec

[ 5] 2.00-3.00 sec 267 MBytes 2.24 Gbits/sec

[ 5] 3.00-4.00 sec 267 MBytes 2.24 Gbits/sec

[ 5] 4.00-5.00 sec 267 MBytes 2.24 Gbits/sec

[ 5] 5.00-6.00 sec 267 MBytes 2.24 Gbits/sec

[ 5] 6.00-7.00 sec 267 MBytes 2.24 Gbits/sec

[ 5] 7.00-8.00 sec 267 MBytes 2.24 Gbits/sec

[ 5] 8.00-9.00 sec 267 MBytes 2.24 Gbits/sec

[ 5] 9.00-10.00 sec 267 MBytes 2.24 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.00 GBytes 1.72 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 2.61 GBytes 2.24 Gbits/sec receiver

iperf Done.A margin of 4.68%! Not sure if that is indicative of anything.iperf3 reports 2.35 Gbits/s in one direction, but only 2.24 Gbits/s in reverse direction.

On 6.11 kernel bitrate is symmetric:A margin of 4.68%! Not sure if that is indicative of anything.

Code:

# iperf3 -c router.home

Connecting to host router.home, port 5201

[ 5] local 192.168.64.2 port 42146 connected to 192.168.64.1 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 282 MBytes 2.36 Gbits/sec 88 730 KBytes

[ 5] 1.00-2.00 sec 280 MBytes 2.35 Gbits/sec 0 747 KBytes

[ 5] 2.00-3.00 sec 281 MBytes 2.35 Gbits/sec 0 747 KBytes

[ 5] 3.00-4.00 sec 281 MBytes 2.36 Gbits/sec 18 748 KBytes

[ 5] 4.00-5.00 sec 280 MBytes 2.35 Gbits/sec 0 748 KBytes

[ 5] 5.00-6.00 sec 281 MBytes 2.36 Gbits/sec 0 748 KBytes

[ 5] 6.00-7.00 sec 280 MBytes 2.35 Gbits/sec 0 768 KBytes

[ 5] 7.00-8.00 sec 281 MBytes 2.35 Gbits/sec 0 793 KBytes

[ 5] 8.00-9.00 sec 281 MBytes 2.36 Gbits/sec 0 793 KBytes

[ 5] 9.00-10.00 sec 280 MBytes 2.35 Gbits/sec 0 874 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.74 GBytes 2.35 Gbits/sec 106 sender

[ 5] 0.00-10.00 sec 2.00 GBytes 1.72 Gbits/sec receiver

iperf Done.

# iperf3 -c router.home -R

Connecting to host router.home, port 5201

Reverse mode, remote host router.home is sending

[ 5] local 192.168.64.2 port 39790 connected to 192.168.64.1 port 5201

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 280 MBytes 2.35 Gbits/sec

[ 5] 1.00-2.00 sec 281 MBytes 2.35 Gbits/sec

[ 5] 2.00-3.00 sec 280 MBytes 2.35 Gbits/sec

[ 5] 3.00-4.00 sec 280 MBytes 2.35 Gbits/sec

[ 5] 4.00-5.00 sec 281 MBytes 2.35 Gbits/sec

[ 5] 5.00-6.00 sec 281 MBytes 2.35 Gbits/sec

[ 5] 6.00-7.00 sec 280 MBytes 2.35 Gbits/sec

[ 5] 7.00-8.00 sec 280 MBytes 2.35 Gbits/sec

[ 5] 8.00-9.00 sec 281 MBytes 2.35 Gbits/sec

[ 5] 9.00-10.00 sec 280 MBytes 2.35 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.00 GBytes 1.72 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 2.74 GBytes 2.35 Gbits/sec receiver

iperf Done.Update: Propably, this has nothing to do with R8125. Previously I tested throughput between my PC and VM (VM sending via Virtio device). But throughput between PC and Proxmox host itself is ok:

Code:

# iperf3 -c proxmox.home -R

Connecting to host proxmox.home, port 5201

Reverse mode, remote host proxmox.home is sending

[ 5] local 192.168.64.2 port 57700 connected to 192.168.64.100 port 5201

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 281 MBytes 2.35 Gbits/sec

[ 5] 1.00-2.00 sec 281 MBytes 2.35 Gbits/sec

[ 5] 2.00-3.00 sec 281 MBytes 2.35 Gbits/sec

[ 5] 3.00-4.00 sec 281 MBytes 2.35 Gbits/sec

[ 5] 4.00-5.00 sec 280 MBytes 2.35 Gbits/sec

[ 5] 5.00-6.00 sec 280 MBytes 2.35 Gbits/sec

[ 5] 6.00-7.00 sec 281 MBytes 2.35 Gbits/sec

[ 5] 7.00-8.00 sec 281 MBytes 2.35 Gbits/sec

[ 5] 8.00-9.00 sec 280 MBytes 2.35 Gbits/sec

[ 5] 9.00-10.00 sec 280 MBytes 2.35 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.00 GBytes 1.72 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 2.74 GBytes 2.35 Gbits/sec receiver

iperf Done.

Last edited:

On 6.11 kernel bitrate is symmetric

On kernel

Linux 6.8.12-9-pve (2025-03-16T19:18Z this would be "unsymmetric" in your books (- not mine!):

Code:

root@WRKSPC-DEBIAN:~# iperf3 -c 192.168.1.205 -R

Connecting to host 192.168.1.205, port 5201

Reverse mode, remote host 192.168.1.205 is sending

[ 5] local 192.168.1.204 port 52986 connected to 192.168.1.205 port 5201

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 9.03 GBytes 77.6 Gbits/sec

[ 5] 1.00-2.00 sec 9.21 GBytes 79.2 Gbits/sec

[ 5] 2.00-3.00 sec 9.52 GBytes 81.7 Gbits/sec

[ 5] 3.00-4.00 sec 9.54 GBytes 81.9 Gbits/sec

[ 5] 4.00-5.00 sec 9.49 GBytes 81.5 Gbits/sec

[ 5] 5.00-6.00 sec 9.51 GBytes 81.7 Gbits/sec

[ 5] 6.00-7.00 sec 9.54 GBytes 82.0 Gbits/sec

[ 5] 7.00-8.00 sec 9.54 GBytes 81.9 Gbits/sec

[ 5] 8.00-9.00 sec 9.12 GBytes 78.3 Gbits/sec

[ 5] 9.00-10.00 sec 9.23 GBytes 79.3 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 93.7 GBytes 80.5 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 93.7 GBytes 80.5 Gbits/sec receiver

iperf Done.

root@WRKSPC-DEBIAN:~# iperf3 -c 192.168.1.205

Connecting to host 192.168.1.205, port 5201

[ 5] local 192.168.1.204 port 47862 connected to 192.168.1.205 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 9.60 GBytes 82.5 Gbits/sec 0 462 KBytes

[ 5] 1.00-2.00 sec 9.59 GBytes 82.4 Gbits/sec 0 462 KBytes

[ 5] 2.00-3.00 sec 9.59 GBytes 82.4 Gbits/sec 0 462 KBytes

[ 5] 3.00-4.00 sec 9.59 GBytes 82.4 Gbits/sec 0 462 KBytes

[ 5] 4.00-5.00 sec 9.57 GBytes 82.2 Gbits/sec 0 650 KBytes

[ 5] 5.00-6.00 sec 9.59 GBytes 82.4 Gbits/sec 0 650 KBytes

[ 5] 6.00-7.00 sec 9.55 GBytes 82.1 Gbits/sec 0 650 KBytes

[ 5] 7.00-8.00 sec 9.59 GBytes 82.4 Gbits/sec 0 683 KBytes

[ 5] 8.00-9.00 sec 9.57 GBytes 82.2 Gbits/sec 0 683 KBytes

[ 5] 9.00-10.00 sec 9.58 GBytes 82.3 Gbits/sec 0 683 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 95.8 GBytes 82.3 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 95.8 GBytes 82.3 Gbits/sec receiver

iperf Done.So a deviation of (a whopping!) 2.19% - nothing to write home about! I notice a lot of Retr on your end (on both kernels) - probably NW activity etc.

1. My network is practically idle, but difference in throughput is about 110 Mbits/s.So a deviation of (a whopping!) 2.19% - nothing to write home about! I notice a lot of Retr on your end (on both kernels) - probably NW activity etc.

2. My results are repeatable. I'm measuring 2.35 Gbit/s in both directions on kernel 6.11, reboot into 6.14 and measuring 2.35 Gbits/s in one direction and 2.24 Gbits/s in the other.

3. This has nothing to do with retranslations, because:

a) in submitted results retranslations shown only for forward direction, which still has 2.35-2.36 Gbits/s, no matter if there were retranslations;

b) I ran the same test from the opposite side (VM as a client, PC as a server, VM is sending), and saw zero retranslations, and still only 2.24-2.25 Gbits/s.

4. I think we should not compare 2.5G network with 100G one in terms of deviation. With 100G network even CPU or PCI-E might become bottleneck.

AFAIKretranslations

Retr stands for Retransmitted, as in Retransmitted packets.My tests were done CT to CT on same Proxmox host. Maybe try that in your tests & compare results.4. I think we should not compare 2.5G network with 100G one in terms of deviation. With 100G network even CPU or PCI-E might become bottleneck.

6.14.0-1-pveis reporting some kind of ECC errors (I'm not running ECC memory with N100 lol). Mydmesgis completely filled with these errors:

Code:# dmesg | grep igen6 [ 3.775370] caller igen6_probe+0x1bc/0x8e0 [igen6_edac] mapping multiple BARs [ 3.775412] EDAC MC0: Giving out device to module igen6_edac controller Intel_client_SoC MC#0: DEV 0000:00:00.0 (POLLED) [ 3.775430] EDAC igen6 MC0: HANDLING IBECC MEMORY ERROR [ 3.775431] EDAC igen6 MC0: ADDR 0x7fffffffe0 [ 3.775469] EDAC igen6: v2.5.1 [ 4.824379] EDAC igen6 MC0: HANDLING IBECC MEMORY ERROR [ 4.824382] EDAC igen6 MC0: ADDR 0x7fffffffe0 [ 5.852327] EDAC igen6 MC0: HANDLING IBECC MEMORY ERROR [ 5.852335] EDAC igen6 MC0: ADDR 0x7fffffffe0 [ 6.872532] EDAC igen6 MC0: HANDLING IBECC MEMORY ERROR [ 6.872536] EDAC igen6 MC0: ADDR 0x7fffffffe0 [ 7.896351] EDAC igen6 MC0: HANDLING IBECC MEMORY ERROR [ 7.896355] EDAC igen6 MC0: ADDR 0x7fffffffe0 [ 8.920317] EDAC igen6 MC0: HANDLING IBECC MEMORY ERROR [ 8.920320] EDAC igen6 MC0: ADDR 0x7fffffffe0

I've blacklist the igen6_edac module, but still with this 6.14 kernel my idle CPU frequency is still higher than with 6.8, so I've reverted.

Not sure about anyone else, but when running6.8.12-9-pve, theigen6_edacmodule also loads, but the only dmesg entries are these:

Code:# dmesg | grep igen6 [ 3.455293] caller igen6_probe+0x193/0x8b0 [igen6_edac] mapping multiple BARs [ 3.455337] EDAC MC0: Giving out device to module igen6_edac controller Intel_client_SoC MC#0: DEV 0000:00:00.0 (INTERRUPT) [ 3.455389] EDAC igen6 MC0: HANDLING IBECC MEMORY ERROR [ 3.455391] EDAC igen6 MC0: ADDR 0x7fffffffe0 [ 3.455431] EDAC igen6: v2.5.1

Same problem with a GMKtec N150. That constant flood of messages can't be good for the SSD so I've reverted back to 6.11.

Thanks for the pointer, so seems really like an issue with some specific N CPUs/motherboard combos that can luckily be worked around in the kernel.

I checked and saw that the POC patch was sent as an actual one and the maintainer accepted it. So I cherry-picked that for our next 6.14 build (few weeks at max), disable edac until then as workaround if you run an affected system and still really want to run 6.14.

Oh, of course, I meant retransmissions.AFAIKRetrstands forRetransmitted, as in Retransmitted packets.

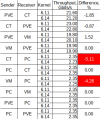

OK, I performed additional tests and wrote results in the table:My tests were done CT to CT on same Proxmox host. Maybe try that in your tests & compare results.

It looks to me, that tests inside Proxmox are not representative, and the difference is within the margin of error. The bottleneck here is, propably, CPU (AMD Ryzen 1700 in my case), so I don't really care.

But tests with PC are not good. 2.5G (2.35G with MTU 1500) should not be a problem ever. But we can clearly see, that upgrading kernel results in ~4-5% loss in throughput. Importantly, the test between PVE and PC shows no losses, so maybe this has something to do with changes in linux bridge?

https://github.com/GreenDamTan/vgpu_unlock-rs/commit/a651cf35c0097426c369c2783c749b2ed28bce39this works perfectly thank you. great work as always.

for reference i applied the patch to the driver .run file, installed it, installed 6.14 and rebooted. the modules built fine and all is well.

also if you do not mind me asking, i noticed you have a pascal on 17.5? is there a trick to that? i replaced myvgpuConfig.xmlfile, which works fine to make vGPU function, but linux VMs say driver mismatch on any driver version (and they do not work) and windows will still only use 535.... just wondering if i am doing something wrong or missing something (it seems like i am. i could never figure out this or getting LXCs to use the gpu right with the kvm driver haha. )

try to use A5500 device id in pascal card

I am currently considering replacing my Realtek 2.5G 8125BG with an Intel 10G x550 network card, as I would like to use the drivers supplied.

Does anyone know if the Realtek 8125BG is fully supported in kernel versions 6.11, 6.14? If so, is it through the r8169 driver or a special one for the 8125BG chip?

Does anyone know if the Realtek 8125BG is fully supported in kernel versions 6.11, 6.14? If so, is it through the r8169 driver or a special one for the 8125BG chip?

The issue with Realtek NICs is that their individual models actually represent quite a large array of slightly different NICs - which gain support eventually in the mainline 8169 driver - e.g. for some systems I've seen - a Realtek 8125 from Q2 2024 is supported by r8169 since kernel 6.2, while one from Q4 2024 only got support in kernel 6.14.Does anyone know if the Realtek 8125BG is fully supported in kernel versions 6.11, 6.14? If so, is it through the r8169 driver or a special one for the 8125BG chip?

We ship the `r8125-dkms` package (repackaged from Debian) but currently I don't think it supports any NICs that are not already supported by the 6.14 opt-in kernel.

That being said - once supported by the mainline driver there are not many reports about issues - so they generally work reasonably well.

I hope this helps!

Thanks Stoiko!

I'm still not really sure what I can expect . I used the 8169 driver with 6.8 with PE8.2 and had issues with failover in a bond.

. I used the 8169 driver with 6.8 with PE8.2 and had issues with failover in a bond.

I think I will continue to install the driver on the Realtek site as dkms. If I succeed that the driver is still there after a kernel update, then I will stay with the Realtek card. Otherwise I will move.

I'm still not really sure what I can expect

I think I will continue to install the driver on the Realtek site as dkms. If I succeed that the driver is still there after a kernel update, then I will stay with the Realtek card. Otherwise I will move.

I have an Epyc Genoa (Epyc 9554) system that won't boot with the 6.14 kernel. The system is only a few months old, and has run without issue on both the 6.8 and 6.11 kernels. Looking through thejournalctl -blogs after a failed boot, I see this:

Code:Apr 05 00:03:06 proxmox-host kernel: BERT: Error records from previous boot: Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: event severity: fatal Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Error 0, type: fatal Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: fru_text: ProcessorError Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: section_type: IA32/X64 processor error Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Local APIC_ID: 0x1c Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: CPUID Info: Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: 00000000: 00a10f11 00000000 1c800800 00000000 Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: 00000010: 76fa320b 00000000 178bfbff 00000000 Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: 00000020: 00000000 00000000 00000000 00000000 Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Error Information Structure 0: Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Error Structure Type: cache error Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Check Information: 0x000000000602001f Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Transaction Type: 2, Generic Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Operation: 0, generic error Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Level: 0 Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Processor Context Corrupt: true Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Uncorrected: true Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Context Information Structure 0: Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Register Context Type: MSR Registers (Machine Check and other MSRs) Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Register Array Size: 0x0050 Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: MSR Address: 0xc0002051 Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Context Information Structure 1: Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Register Context Type: Unclassified Data Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Register Array Size: 0x0030 Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: Register Array: Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: 00000000: 00000010 00000000 1c3010c0 fffffffe Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: 00000010: 00000011 00000000 cb300024 00000000 Apr 05 00:03:06 proxmox-host kernel: [Hardware Error]: 00000020: 00000017 00000000 cb300024 00000000 Apr 05 00:03:06 proxmox-host kernel: BERT: Total records found: 1 Apr 05 00:03:06 proxmox-host kernel: mce: [Hardware Error]: Machine check events logged Apr 05 00:03:06 proxmox-host kernel: PM: Magic number: 5:744:8 Apr 05 00:03:06 proxmox-host kernel: mce: [Hardware Error]: CPU 54: Machine Check: 0 Bank 5: aea0000000000108 Apr 05 00:03:06 proxmox-host kernel: clockevents clockevent82: hash matches Apr 05 00:03:06 proxmox-host kernel: mce: [Hardware Error]: TSC 0 ADDR 1ffffffc04dc0ee MISC d0140ff600000000 PPIN 2b0c17e59888012 SYND 4d000000 IPID 500> Apr 05 00:03:06 proxmox-host kernel: memory memory52: hash matches Apr 05 00:03:06 proxmox-host kernel: mce: [Hardware Error]: PROCESSOR 2:a10f11 TIME 1743825773 SOCKET 0 APIC 1c microcode a101148 Apr 05 00:03:06 proxmox-host kernel: RAS: Correctable Errors collector initialized.

I'm skeptical about this being a hardware issue with the CPU. After I saw these errors, I ranstress-ngwhile booted on the 6.11 kernel for 6+ hours on all cores without any problems. Here are some additional stats about my system that may be relevant:

Proxmox VE 8.3.5

Mirroed ZFS root on 2x SAMSUNG MZ7LH960

CPU: AMD EPYC 9554

Motherboard: ASRock Rack GENOAD8X-2T/BCM

Memory: 8x64GB MICRON DDR5 RDIMM

PCIe: LSI 9300-8I SAS3008, 2x RTX 4090

We have the same issue with the AMD EPYC 9754...

Hi guys,We have the same issue with the AMD EPYC 9754...

I can confirm, same on AMD EPYC 9124