Blacklisting ahci would get you into trouble (since all SATA cotnrolers need it) but softdep should do the trick. Something likesoftdep ahci pre: vfio-pci(added to /etc/modprobe.d/vfio.conf and runningupdate-initramfs -u) will simply load vfio-pci (just) before ahci loads. Check withlspci -nnkafter a fresh reboot without starting the VM to make sure the driver in use is vfio-pci.

PS: Feel free to make a separate thread about this if you run into issues and need help.

This worked out like a charm. Thank you very much!

Unbelievable how often I did read the

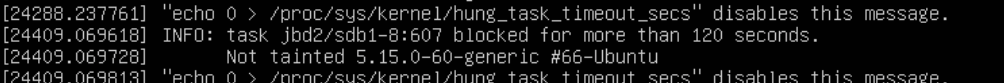

softdep-option in your posts and never came to the idea, that I should use it in my own setup; stupid me. Hopefully the stack trace came either from this, maybe in combination with changes in that kernel-version or that it at least was only a one-time thing.