OPNsense VM Config

- Thread starter spetrillo

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Are there other things in the same IOMMU group? https://pve.proxmox.com/wiki/PCI_Passthrough

The lockups typically happen when you pass through something that the kernel depends on (eg. storage) - on some systems (typically low-end home/gaming stuff), multiple things may be on the same bus and thus passing through the entire bus (which is the default) gives those devices to the VM.

The lockups typically happen when you pass through something that the kernel depends on (eg. storage) - on some systems (typically low-end home/gaming stuff), multiple things may be on the same bus and thus passing through the entire bus (which is the default) gives those devices to the VM.

So here is the information about my setup. This is a Lenovo M720q Tiny PC with a PCIe slot inside. I have added an Intel I350 4 Port PCIe network adapter. I am running UEFI, so in turn Proxmox is running SystemD.

I have added the needed IOMMU config to the SystemD config. I have blacklisted the IGB driver for the Intel adapter. I have added the Intel card to the VFIO list. I have ensured that the Intel card is in its own IOMMU group. The only other thing with it is the PCIe controller that I believe should be with it?

I attached several files for review. I honestly do not know why this is not working.

I have added the needed IOMMU config to the SystemD config. I have blacklisted the IGB driver for the Intel adapter. I have added the Intel card to the VFIO list. I have ensured that the Intel card is in its own IOMMU group. The only other thing with it is the PCIe controller that I believe should be with it?

I attached several files for review. I honestly do not know why this is not working.

Attachments

Your actual PCIe controller (which I believe is part of the CPU on those systems) is also in the same IOMMU group (2) - basically you're passing through your CPU

Does your BIOS allow you to enable anything related to ACS, VTd, IOMMU or can you try another slot? If not adding something like pcie_acs_override=downstream,multifunction to the kernel command may help (there are other ACS options there that vary from platform to platform).

Does your BIOS allow you to enable anything related to ACS, VTd, IOMMU or can you try another slot? If not adding something like pcie_acs_override=downstream,multifunction to the kernel command may help (there are other ACS options there that vary from platform to platform).

OK so I went into the BIOS and took a couple of pictures of relevant config information. Is there anything in the pics that I should be changing? I do not have an ACS option but def enabled VTd and other related config options.

Attachments

I am testing a few things:

1) i have a 2 port I350 network adapter. I want to see if a different card makes any difference.

2) I noticed the PCIe riser bracket I bought was not a genuine Lenovo bracket. I just bought one and it will be here tomorrow.

Stay tuned...

1) i have a 2 port I350 network adapter. I want to see if a different card makes any difference.

2) I noticed the PCIe riser bracket I bought was not a genuine Lenovo bracket. I just bought one and it will be here tomorrow.

Stay tuned...

So I made a ton of progress today...

I tested with a 2 port I350 network adapter, so it was just LAN and WAN. I setup my config to passthrough the entire card. I then created the OPNsense VM and added the network card as one PCI device. I then rebooted and watched as my VM booted and let me configure the networking. It was right after that the VM/PVE server would hang, but not this time. It fully configured! I was surprised!

I have attached my config changes, for those who might be having issues. Since I am using ZFS my boot manager is SystemD, but Grub should be able to handle the config. I am going back to my 4 port I350 card and going to get the PVE fully built. This is freaking cool, now that I have a config that works. If I get this going on the 4 port card I am going to take the final step and try out....wait for it...SR-IOV. My I350 card fully supports SR-IOV and if I can get that going then I have the best of all worlds.

Stay tuned...

PS - If anyone uses the attached files please remember that ethernet and vfio files need to have.conf at the end. The cmdline and modules files do not have a suffix. Any questions please PM me directly. Just remember my use case is a Lenovo M720q Tiny with an Intel I350 4 port network card.

I tested with a 2 port I350 network adapter, so it was just LAN and WAN. I setup my config to passthrough the entire card. I then created the OPNsense VM and added the network card as one PCI device. I then rebooted and watched as my VM booted and let me configure the networking. It was right after that the VM/PVE server would hang, but not this time. It fully configured! I was surprised!

I have attached my config changes, for those who might be having issues. Since I am using ZFS my boot manager is SystemD, but Grub should be able to handle the config. I am going back to my 4 port I350 card and going to get the PVE fully built. This is freaking cool, now that I have a config that works. If I get this going on the 4 port card I am going to take the final step and try out....wait for it...SR-IOV. My I350 card fully supports SR-IOV and if I can get that going then I have the best of all worlds.

Stay tuned...

PS - If anyone uses the attached files please remember that ethernet and vfio files need to have.conf at the end. The cmdline and modules files do not have a suffix. Any questions please PM me directly. Just remember my use case is a Lenovo M720q Tiny with an Intel I350 4 port network card.

Attachments

Last edited:

So more testing has been done...

I installed a 4 port I350 and built the new PVE server. When I configure the VM, with no network cables attached, I am able to get the whole network card passed through and OPNsense completes installation. I even got SR-IOV working and had 4 VFs configured on each physical port. I was feeling good. Then came the body punch. Once I connected the network cables and started up the VM it again hung...right when it was configuring the hardware.

I am really lost at this point. Since I am configuring 6 vlans, plus a physical interface, I am wondering if there is a timeout I am hitting which then causes the hang condition??

I installed a 4 port I350 and built the new PVE server. When I configure the VM, with no network cables attached, I am able to get the whole network card passed through and OPNsense completes installation. I even got SR-IOV working and had 4 VFs configured on each physical port. I was feeling good. Then came the body punch. Once I connected the network cables and started up the VM it again hung...right when it was configuring the hardware.

I am really lost at this point. Since I am configuring 6 vlans, plus a physical interface, I am wondering if there is a timeout I am hitting which then causes the hang condition??

So it works until your card actually needs to use SR-IOV - is it an authentic Intel card? There are loads of cards out there that aren't authentic, they are either clones or rejects and I've had my fair share of those (in workstations) that overheat or have other defects.

Also check if there is a firmware update for the card.

Also check if there is a firmware update for the card.

Not using SR-IOV on this vm...just passing the physical adapter thru to the VM. SR-IOV is for other vms.

I did check on whether it is a authentic or China fake. It looks real to me. Here is a picture of the card I am using. I am using the one on the bottom of the pics. Its label as a 2013 build. I am checking on driver and firmware updates.

I did check on whether it is a authentic or China fake. It looks real to me. Here is a picture of the card I am using. I am using the one on the bottom of the pics. Its label as a 2013 build. I am checking on driver and firmware updates.

Attachments

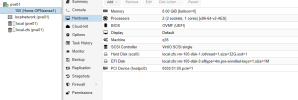

Disconnected the network cables once again and tested. It boots up and configures without hanging, so it looks like something when I actually connect it to the network. Attached is the config of the VM.

Continuing to test...

Continuing to test...

Attachments

Last edited:

I see various reports of similar issues with FreeBSD - basically the i350 in VF if it sees a VLAN packet will hang - never really a solution. So OPNSense is hanging on your hardware 'choices'. You may be limited on your hardware to just using a bare bridge for each port and emulating the hardware with VFIO.

Given you passed the entire hardware through, it's not a Proxmox 'issue' anymore - maybe an updated FreeBSD kernel driver (igb) or Intel firmware may have fixed it?

Given you passed the entire hardware through, it's not a Proxmox 'issue' anymore - maybe an updated FreeBSD kernel driver (igb) or Intel firmware may have fixed it?

Last edited:

Well...well...well....

Moving it to a different switch and it now boots successfully, with the network attached. This is soo freaking weird. I was originally using a Netgear GS108Ev3 8 port switch and moved it to a Netgear GS108Tv3 8 port switch.

So continuing to make progress. Next step is I want get the most current driver and firmware updates for the I350.

Moving it to a different switch and it now boots successfully, with the network attached. This is soo freaking weird. I was originally using a Netgear GS108Ev3 8 port switch and moved it to a Netgear GS108Tv3 8 port switch.

So continuing to make progress. Next step is I want get the most current driver and firmware updates for the I350.