Has anyone successfully gotten OPNsense to run reliably in a PCIe pass-through environment?

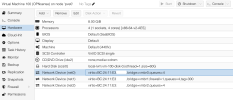

I have been trying to pass-through the 4 ports of my Intel I350 PCIe network card and it hangs up both the vm and then PVE, as it enumerates the NIC hardware and vlans.

I don't even get to install OPNsense. Getting very frustrated with this and am ready to dump it.

I have been trying to pass-through the 4 ports of my Intel I350 PCIe network card and it hangs up both the vm and then PVE, as it enumerates the NIC hardware and vlans.

I don't even get to install OPNsense. Getting very frustrated with this and am ready to dump it.