Hi,

I want to setup two OPNsense-VMs in HA mode so they use pfsync to communicate and if one VM fails the other one should do the job. The idea is that when one of the server got problems and needs to be repaired my home network wouldn't be offline for days or weeks.

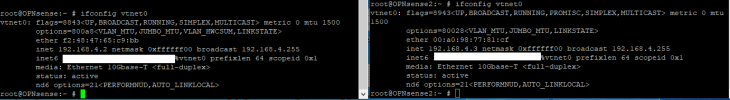

I only got 1 Proxmox server, 1 FreeNAS server and a managed Switch. Both servers got 2x Gbit Ethernet + 1x 10G SFP+ and I was able to setup tagged VLAN so both servers are using 7 VLANs over that single 10G NIC. I've setup 7 bridges on each server each one connected to a different VLAN. I've read that using virtual NICs as WAN port isn't the best idea and it is more secure to passthrough a physical NIC to the VM and use that as WAN. I already passthroughed one of the Gbit NICs to the VM on the proxmox server and want to do that on the Freenas server too.

My first idea was to add 7 virtio NICs, one for each bridge/VLAN, to the OPNsense-VM but then I asked myself if that is the best way. As far as I know OPNsense also supports using VLANs. Would it be more performant/secure to just use 1 virtio NIC connected to a VLAN capable bridge handling 7 tagged VLANs or is it better to create 7 virtio NICs where I don't need to handle VLANs inside the VM because the bridge behind the virtio NIC is already doing the tagging?

Another thing I don't unterstand is when to enable or disable hardware offloading and where to disable it.

If I PCI passthrough a single port of a multiport NIC to a Opensense-VM, do I need to disable hardware offloading on the host or inside the VM?

If I use a virtio NICs not connected to a physical NIC should I disable hardware offloading?

And what is with my 10G NIC? I use it on the host itself but also virtio NICs are connected to a bridge connected to that NIC. Do I also need to disable hardware offloading on the host if VMs are using that NIC over virtual virtio NICs too?

I want to setup two OPNsense-VMs in HA mode so they use pfsync to communicate and if one VM fails the other one should do the job. The idea is that when one of the server got problems and needs to be repaired my home network wouldn't be offline for days or weeks.

I only got 1 Proxmox server, 1 FreeNAS server and a managed Switch. Both servers got 2x Gbit Ethernet + 1x 10G SFP+ and I was able to setup tagged VLAN so both servers are using 7 VLANs over that single 10G NIC. I've setup 7 bridges on each server each one connected to a different VLAN. I've read that using virtual NICs as WAN port isn't the best idea and it is more secure to passthrough a physical NIC to the VM and use that as WAN. I already passthroughed one of the Gbit NICs to the VM on the proxmox server and want to do that on the Freenas server too.

My first idea was to add 7 virtio NICs, one for each bridge/VLAN, to the OPNsense-VM but then I asked myself if that is the best way. As far as I know OPNsense also supports using VLANs. Would it be more performant/secure to just use 1 virtio NIC connected to a VLAN capable bridge handling 7 tagged VLANs or is it better to create 7 virtio NICs where I don't need to handle VLANs inside the VM because the bridge behind the virtio NIC is already doing the tagging?

Another thing I don't unterstand is when to enable or disable hardware offloading and where to disable it.

If I PCI passthrough a single port of a multiport NIC to a Opensense-VM, do I need to disable hardware offloading on the host or inside the VM?

If I use a virtio NICs not connected to a physical NIC should I disable hardware offloading?

And what is with my 10G NIC? I use it on the host itself but also virtio NICs are connected to a bridge connected to that NIC. Do I also need to disable hardware offloading on the host if VMs are using that NIC over virtual virtio NICs too?