Hello Proxmox Community,

I have a similar problem as here:

https://forum.proxmox.com/threads/one-nic-but-need-multiple-ips.5088/

I have one network port and multiple IP addresses in the same local subnet.

And I need to give each VM a separate IP so that they look like independent servers. But they must have a network through the host system gateway (not use their own MAC's).

I'm trying to use Open vSwitch for this. But I can't find suitable examples of settings.

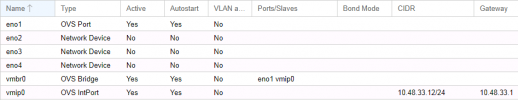

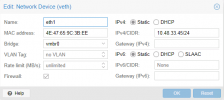

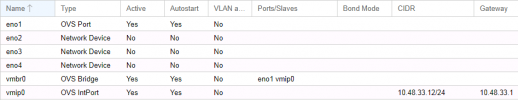

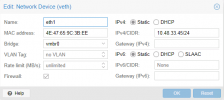

Now my settings looks:

But I can't go any further. When I try to assign a VM a separate IP (for example 10.48.33.45), it doesn't work. From the host system console, 10.48.33.45 is available, but the external network for the VM does not work in both directions.

I know that it possible to use network tags (like "ovs_options tag=01 trunks=1,2,3,4") in some way, but how in this situation?

I have a similar problem as here:

https://forum.proxmox.com/threads/one-nic-but-need-multiple-ips.5088/

I have one network port and multiple IP addresses in the same local subnet.

And I need to give each VM a separate IP so that they look like independent servers. But they must have a network through the host system gateway (not use their own MAC's).

I'm trying to use Open vSwitch for this. But I can't find suitable examples of settings.

Now my settings looks:

Code:

auto lo

iface lo inet loopback

iface eno2 inet manual

iface eno3 inet manual

iface eno4 inet manual

auto eno1

iface eno1 inet manual

ovs_type OVSPort

ovs_bridge vmbr0

post-up echo 1 > /proc/sys/net/ipv4/ip_forward

post-up echo 1 > /proc/sys/net/ipv4/conf/eno1/proxy_arp

auto vmip0

iface vmip0 inet static

address 10.48.33.12/24

gateway 10.48.33.1

ovs_type OVSIntPort

ovs_bridge vmbr0

auto vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports eno1 vmip0But I can't go any further. When I try to assign a VM a separate IP (for example 10.48.33.45), it doesn't work. From the host system console, 10.48.33.45 is available, but the external network for the VM does not work in both directions.

I know that it possible to use network tags (like "ovs_options tag=01 trunks=1,2,3,4") in some way, but how in this situation?