Hi all,

On one of our customers, we used to have a 2-node cluster. Nodes' name were: "mdc0" and "mdc1".One of the servers failed (mdc0), and we removed it using pvecm delnode mdc0.

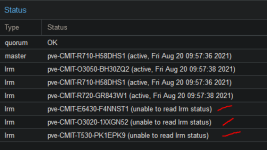

I've noticed that this old node is still showing on HA GUI Menu:

Here is the output of /etc/pve/.members (the old node is not listed):

It neither appears on /etc/pve/corosync.conf:

--The only thing I see here is that bindnetaddr is from the old node (mdc0). The current node (mdc1) has 192.168.0.199.--

But it shows if I run ha-manager status:

Any ideas?

Thank you!

On one of our customers, we used to have a 2-node cluster. Nodes' name were: "mdc0" and "mdc1".One of the servers failed (mdc0), and we removed it using pvecm delnode mdc0.

I've noticed that this old node is still showing on HA GUI Menu:

Here is the output of /etc/pve/.members (the old node is not listed):

Code:

root@mdc1:~# cat /etc/pve/.members

{

"nodename": "mdc1",

"version": 3,

"cluster": { "name": "cluster", "version": 3, "nodes": 1, "quorate": 1 },

"nodelist": {

"mdc1": { "id": 2, "online": 1, "ip": "192.168.0.199"}

}

}It neither appears on /etc/pve/corosync.conf:

--The only thing I see here is that bindnetaddr is from the old node (mdc0). The current node (mdc1) has 192.168.0.199.--

Code:

root@mdc1:~# cat /etc/pve/corosync.conf

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: mdc1

nodeid: 2

quorum_votes: 1

ring0_addr: mdc1

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: cluster

config_version: 3

ip_version: ipv4

secauth: on

version: 2

interface {

bindnetaddr: 192.168.0.200

ringnumber: 0

}

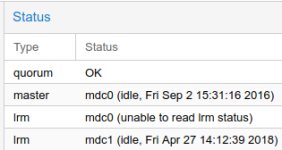

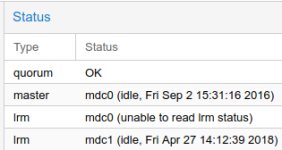

}But it shows if I run ha-manager status:

Code:

root@mdc1:~# ha-manager status

quorum OK

master mdc0 (idle, Fri Sep 2 15:31:16 2016)

lrm mdc0 (unable to read lrm status)

lrm mdc1 (idle, Fri Apr 27 14:14:14 2018)Any ideas?

Thank you!