Hello,

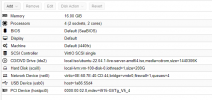

Been running Proxmox on a new setup for a couple of weeks now. Screen shot below for version etc - if you need more stats on Proxmox - let me know.

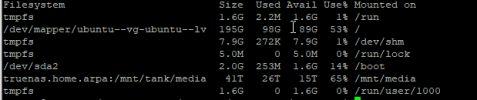

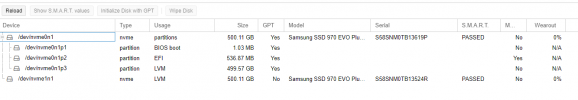

I have a I5-10400 on a https://www.amazon.com/gp/product/B08WC8QDTC?th=1 (MPG Z590 Gaming Force Gaming Motherboard) and 64gb of ram. I have 2 nvm's installed in this server - 1 is for the proxmox OS and 1 is for all the VMs to use for disks. I have a Ubuntu 22.04 server running Docker on this Proxmox server and it's working fine - but the file transfer seems capped at about 400-500 Megabytes/sec. I forgot to mention this proxmox server has a 10gbe card that is connected to a Brocade switch. Using Iperf, I can test from my Truenas server (also on 10gb) and hit the expected throughput from 800-900 Megabytes/Sec. So this is not a network issue so far as I can tell.

Should I passthrough the nvme or am I missing some settings/drivers? I'm puzzled. Any help/ideas? Happy to run any tests needed etc I'm happy to try and run them.

Been running Proxmox on a new setup for a couple of weeks now. Screen shot below for version etc - if you need more stats on Proxmox - let me know.

I have a I5-10400 on a https://www.amazon.com/gp/product/B08WC8QDTC?th=1 (MPG Z590 Gaming Force Gaming Motherboard) and 64gb of ram. I have 2 nvm's installed in this server - 1 is for the proxmox OS and 1 is for all the VMs to use for disks. I have a Ubuntu 22.04 server running Docker on this Proxmox server and it's working fine - but the file transfer seems capped at about 400-500 Megabytes/sec. I forgot to mention this proxmox server has a 10gbe card that is connected to a Brocade switch. Using Iperf, I can test from my Truenas server (also on 10gb) and hit the expected throughput from 800-900 Megabytes/Sec. So this is not a network issue so far as I can tell.

Should I passthrough the nvme or am I missing some settings/drivers? I'm puzzled. Any help/ideas? Happy to run any tests needed etc I'm happy to try and run them.