I have a TeamGroup 1TB nvme that is advertised to do 1800/1500 read/write MB/s. It is a PCIE3.0 4x drive, using a M.2 to PCIE adapter with backwards compatibility to 2.0. The motherboard only has PCIE2.0 slots (a 16x and 4x). The reduction in speed is seen noted in dmesg where 0000:00:15.0 is the PCI bridge:

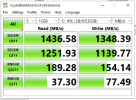

The drive is passing through fine to a Windows 10 VM, but CrystalDiskMark is suprisingly (and consistently) showing write speeds to be the same or slightly higher, not to mention both speeds slower than expected (with default test settings):

This drive is being used to run the OS - it is not secondary storage.

Given the speeds, it seems to me that it's being limited to 2 channels, or maybe PCIE1.0 1.0GB/s?

What I have tried:

I don't understand this as well as I would like to, which is partly why I am posting. PCIE2.0 on 4 lanes should be a 2.0GB/s...higher than the drive speeds advertised. It just doesn't seem right to me...especially since write speed is higher. After double-checking passthrough settings, I figured I should just post it and see what anyone thinks.

Code:

pci 0000:07:00.0: 16.000 Gb/s available PCIe bandwidth, limited by 5.0 GT/s PCIe x4 link at 0000:00:15.0 (capable of 31.504 Gb/s with 8.0 GT/s PCIe x4 link)The drive is passing through fine to a Windows 10 VM, but CrystalDiskMark is suprisingly (and consistently) showing write speeds to be the same or slightly higher, not to mention both speeds slower than expected (with default test settings):

This drive is being used to run the OS - it is not secondary storage.

Given the speeds, it seems to me that it's being limited to 2 channels, or maybe PCIE1.0 1.0GB/s?

What I have tried:

- rombar on and off

- switching pcie slots (there is also a PCIE2.0 16x)

- Installed in a second (motherboard identical) machine - that machine is running Ubuntu22 and it recognized ok with expected read/write speeds, and uses the same make of graphics card (see next section).

- Removing a graphics card in pci slot 1:00.0 that is using 8 channels of the PCIE2.0 16x slot.

I don't understand this as well as I would like to, which is partly why I am posting. PCIE2.0 on 4 lanes should be a 2.0GB/s...higher than the drive speeds advertised. It just doesn't seem right to me...especially since write speed is higher. After double-checking passthrough settings, I figured I should just post it and see what anyone thinks.