Hi everyone,

I’m working with a NVMe-over-TCP storage backend based on Huawei Dorado arrays in active-active HyperMetro mode.

The hosts are running Proxmox / Debian 12, with native NVMe multipathing enabled (nvme_core.multipath=Y).

Connectivity looks healthy: for each namespace, the host sees 8 active paths (2 host interfaces × 4 storage interfaces), for example:

So far so good.

When I run:

I get:

This means the array assigns an Owner Controller per namespace.

Optimized paths = best latency.

Non-optimized paths = functional but slower (controller-to-controller routing).

Issue with Native NVMe Multipath :

The Linux NVMe multipath implementation:

The Question

Is there a way within the native NVMe multipath framework to:

Prefer or force usage of ANA “optimized” paths

Or automatically deprioritize / avoid “non-optimized” ANA paths

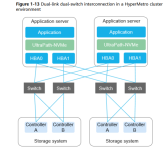

Without using a vendor-specific multipath plugin (e.g., UltraPath from Huawei but not compatible with Debian for the moment)

I’m working with a NVMe-over-TCP storage backend based on Huawei Dorado arrays in active-active HyperMetro mode.

The hosts are running Proxmox / Debian 12, with native NVMe multipathing enabled (nvme_core.multipath=Y).

Connectivity looks healthy: for each namespace, the host sees 8 active paths (2 host interfaces × 4 storage interfaces), for example:

Code:

root@applidisagpve08:/home/UltraPath/LinuxOther/packages# nvme list-subsys

nvme-subsys0 - NQN=VN0WW56VFCV0051802P1Dell BOSS-N1

hostnqn=nqn.2014-08.org.nvmexpress:uuid:4c4c4544-0046-4810-805a-b9c04f4d3834

\

+- nvme0 pcie 0000:01:00.0 live

nvme-subsys10 - NQN=nqn.2020-02.huawei.nvme:nvm-subsystem-sn-2102355GVCTURA910015

hostnqn=nqn.2014-08.org.nvmexpress:uuid:4c4c4544-0046-4810-805a-b9c04f4d3834

\

+- nvme10 tcp traddr=10.88.8.55,trsvcid=4420,host_traddr=10.88.8.59,host_iface=eno12409np1,src_addr=10.88.8.59 live

+- nvme11 tcp traddr=10.88.8.55,trsvcid=4420,host_traddr=10.88.8.60,host_iface=ens1f0np0,src_addr=10.88.8.60 live

+- nvme12 tcp traddr=10.88.8.56,trsvcid=4420,host_traddr=10.88.8.59,host_iface=eno12409np1,src_addr=10.88.8.59 live

+- nvme13 tcp traddr=10.88.8.56,trsvcid=4420,host_traddr=10.88.8.60,host_iface=ens1f0np0,src_addr=10.88.8.60 live

+- nvme14 tcp traddr=10.88.8.57,trsvcid=4420,host_traddr=10.88.8.59,host_iface=eno12409np1,src_addr=10.88.8.59 live

+- nvme15 tcp traddr=10.88.8.57,trsvcid=4420,host_traddr=10.88.8.60,host_iface=ens1f0np0,src_addr=10.88.8.60 live

+- nvme16 tcp traddr=10.88.8.58,trsvcid=4420,host_traddr=10.88.8.59,host_iface=eno12409np1,src_addr=10.88.8.59 live

+- nvme17 tcp traddr=10.88.8.58,trsvcid=4420,host_traddr=10.88.8.60,host_iface=ens1f0np0,src_addr=10.88.8.60 live

nvme-subsys2 - NQN=nqn.2020-02.huawei.nvme:nvm-subsystem-sn-2102355GVCTURA910014

hostnqn=nqn.2014-08.org.nvmexpress:uuid:4c4c4544-0046-4810-805a-b9c04f4d3834

\

+- nvme2 tcp traddr=10.88.8.51,trsvcid=4420,host_traddr=10.88.8.59,host_iface=eno12409np1,src_addr=10.88.8.59 live

+- nvme3 tcp traddr=10.88.8.51,trsvcid=4420,host_traddr=10.88.8.60,host_iface=ens1f0np0,src_addr=10.88.8.60 live

+- nvme4 tcp traddr=10.88.8.52,trsvcid=4420,host_traddr=10.88.8.59,host_iface=eno12409np1,src_addr=10.88.8.59 live

+- nvme5 tcp traddr=10.88.8.52,trsvcid=4420,host_traddr=10.88.8.60,host_iface=ens1f0np0,src_addr=10.88.8.60 live

+- nvme6 tcp traddr=10.88.8.53,trsvcid=4420,host_traddr=10.88.8.59,host_iface=eno12409np1,src_addr=10.88.8.59 live

+- nvme7 tcp traddr=10.88.8.53,trsvcid=4420,host_traddr=10.88.8.60,host_iface=ens1f0np0,src_addr=10.88.8.60 live

+- nvme8 tcp traddr=10.88.8.54,trsvcid=4420,host_traddr=10.88.8.59,host_iface=eno12409np1,src_addr=10.88.8.59 live

+- nvme9 tcp traddr=10.88.8.54,trsvcid=4420,host_traddr=10.88.8.60,host_iface=ens1f0np0,src_addr=10.88.8.60 liveSo far so good.

When I run:

Code:

nvme ana-log /dev/nvme2n1 --verboseI get:

Code:

root@applidisagpve08:/home/UltraPath/LinuxOther/packages# nvme ana-log /dev/nvme2n1 --verbose

Asymmetric Namespace Access Log for NVMe device: nvme2n1

ANA LOG HEADER :-

chgcnt : 0

ngrps : 4

ANA Log Desc :-

grpid : 1

nnsids : 3

chgcnt : 0

state : optimized

nsid : 1

nsid : 2

nsid : 3

grpid : 2

nnsids : 0

chgcnt : 0

state : non-optimized

grpid : 3

nnsids : 0

chgcnt : 0

state : inaccessible

grpid : 4

nnsids : 0

chgcnt : 0

state : persistent-lossThis means the array assigns an Owner Controller per namespace.

Optimized paths = best latency.

Non-optimized paths = functional but slower (controller-to-controller routing).

Issue with Native NVMe Multipath :

The Linux NVMe multipath implementation:

- Does not prioritize optimized ANA paths

- Treats optimized and non-optimized paths as equal

- Uses round-robin across all paths

- Therefore sends part of the I/O over non-optimized (cross-controller) paths

- Latency fluctuation

- Lower performance under load

- Less clean failover during HyperMetro controller transitions

Environment Details

- Proxmox VE 9

- Kernel: (6.14.11-4-pve)

- nvme-cli version: (2.13)

- Storage: Huawei Dorado NVMe/TCP, HyperMetro active-active

The Question

Is there a way within the native NVMe multipath framework to:

Prefer or force usage of ANA “optimized” paths

Or automatically deprioritize / avoid “non-optimized” ANA paths

Without using a vendor-specific multipath plugin (e.g., UltraPath from Huawei but not compatible with Debian for the moment)