Long time Proxmox user, but first time graphics card virtualizer.

I've got a couple of Nvidia V100S 32G cards split between two servers. Nvidia drivers installed as per instructions on the host, and the vGPU itself works fine on the VM with GRID drivers. Licensing also works, as expected.

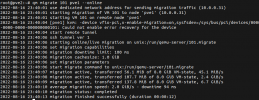

As there are a number of components involved here, I can imagine that the answer is probably rather complex, but is Nvidia vGPU live migration possible between two matching proxmox nodes with a mediated device? Am I missing some documentation that demonstrates how to live migrate a VM with a mediated device attached? When I attempt to do a migration, it errors out as there is a hostpci device attached, which I assume is expected.

Any help would be appreciated!

I've got a couple of Nvidia V100S 32G cards split between two servers. Nvidia drivers installed as per instructions on the host, and the vGPU itself works fine on the VM with GRID drivers. Licensing also works, as expected.

As there are a number of components involved here, I can imagine that the answer is probably rather complex, but is Nvidia vGPU live migration possible between two matching proxmox nodes with a mediated device? Am I missing some documentation that demonstrates how to live migrate a VM with a mediated device attached? When I attempt to do a migration, it errors out as there is a hostpci device attached, which I assume is expected.

Any help would be appreciated!