Hello Community,

I've the following Ceph question about PG's and OSD capacity:

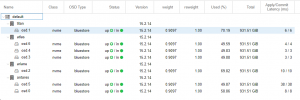

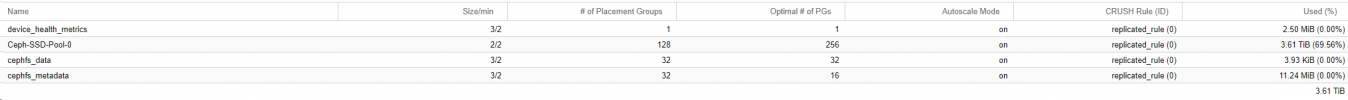

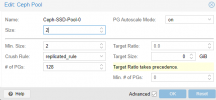

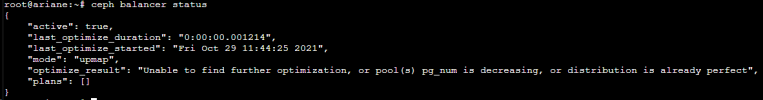

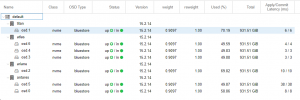

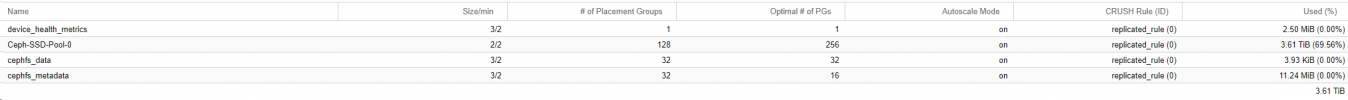

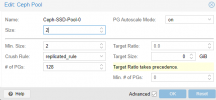

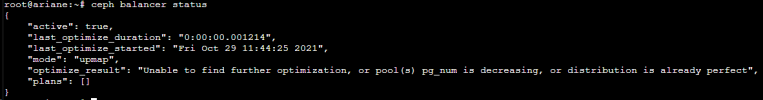

As you can see. The Optimal number of PG's for my main Pool (Ceph-SSD-Pool-0) is higher than the actual PG count of 193. Autoscale is not working as far as I can see then. There are no Target settings set yet. I'm new to Ceph and would like some of your opinion on if this is expected behavior or I should set something with Target ratio.

I'm asking this because the space left before adding the latest OSD was around 650GB. Now it's only 870GB. I added 1TB, I expected over 1 TB of free space after it.

Thanks in advance!

I've the following Ceph question about PG's and OSD capacity:

As you can see. The Optimal number of PG's for my main Pool (Ceph-SSD-Pool-0) is higher than the actual PG count of 193. Autoscale is not working as far as I can see then. There are no Target settings set yet. I'm new to Ceph and would like some of your opinion on if this is expected behavior or I should set something with Target ratio.

I'm asking this because the space left before adding the latest OSD was around 650GB. Now it's only 870GB. I added 1TB, I expected over 1 TB of free space after it.

Thanks in advance!

Last edited: