I am relatively inexperienced with Proxmox and Linux so please bear with me for not using proper terms or possibly asking a very basic question...

I have 3 Proxmox 8.1.3 nodes configured in a cluster. All 3 nodes are Lenovo TinyPC computers (2 x m920q & 1 x P360) with a 1GbE OEM port and a DELL branded Mellanox CX322A 10GbE SFP+ x2 NIC installed. All works well until I reboot my Unifi switches. When I do so, I lose connectivity to my headless nodes which requires me to soft reboot (short press) them via their power switch. Connectivity of other 1 GbE and 10 Gbe clients is restored automatically upon switch reboot so it is just the Proxmox nodes having the issue.

The 1GbE port of all 3 nodes is connected to a 24 port "core" switch connected to the UDMP router and the 10 GbE ports are connected via DAC (2 x that are in same rack) or FO (1 x as it is in another room) to Unifi 8 SFP+ port aggregation switches 1 or 2 levels downstream from the core switch. In all 3 cases the management of the Proxmox node is done via the OEM 1GbE port on the nodes. When I reboot all switches involved, the 1 GbE port stays down while it appears that the 10GbE ports come back up as of my latest test (but I am not 100% certain it is so in all cases as I think it happened to the 10Gbe NIC as well in the recent past). In this latest test, 1 node survived the switch reboot, while the other 2 did not.

My research on "Autostart" led me to posts that say it should be on for the bridges and off for the interfaces so that is what I have for all 3 nodes. As for "Active", I just noticed that I have a mix... on all 3 nodes, the 1Gbe port and 1 x 10GbE port are used so the 2nd 10GbE port should likely be set to No... anyhow it doesn't seem to be causing issues either way. Surprisingly, both 10GbE ports on Maximus node are not set to active but the one that is connected works fine regardless.

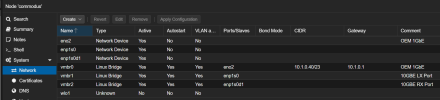

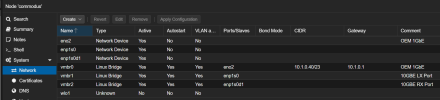

Commodus survived, here is its config:

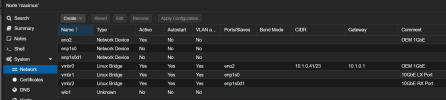

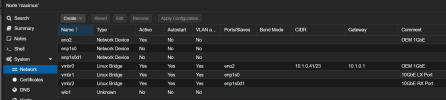

Maximus did not survive:

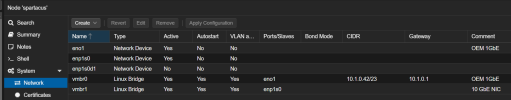

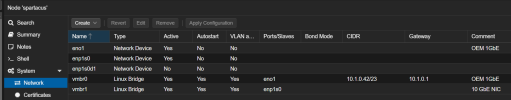

Spartacus did not survive:

Is there a way to get the node to attempt bringing up the network connection when the switch is back online?

I have 3 Proxmox 8.1.3 nodes configured in a cluster. All 3 nodes are Lenovo TinyPC computers (2 x m920q & 1 x P360) with a 1GbE OEM port and a DELL branded Mellanox CX322A 10GbE SFP+ x2 NIC installed. All works well until I reboot my Unifi switches. When I do so, I lose connectivity to my headless nodes which requires me to soft reboot (short press) them via their power switch. Connectivity of other 1 GbE and 10 Gbe clients is restored automatically upon switch reboot so it is just the Proxmox nodes having the issue.

The 1GbE port of all 3 nodes is connected to a 24 port "core" switch connected to the UDMP router and the 10 GbE ports are connected via DAC (2 x that are in same rack) or FO (1 x as it is in another room) to Unifi 8 SFP+ port aggregation switches 1 or 2 levels downstream from the core switch. In all 3 cases the management of the Proxmox node is done via the OEM 1GbE port on the nodes. When I reboot all switches involved, the 1 GbE port stays down while it appears that the 10GbE ports come back up as of my latest test (but I am not 100% certain it is so in all cases as I think it happened to the 10Gbe NIC as well in the recent past). In this latest test, 1 node survived the switch reboot, while the other 2 did not.

My research on "Autostart" led me to posts that say it should be on for the bridges and off for the interfaces so that is what I have for all 3 nodes. As for "Active", I just noticed that I have a mix... on all 3 nodes, the 1Gbe port and 1 x 10GbE port are used so the 2nd 10GbE port should likely be set to No... anyhow it doesn't seem to be causing issues either way. Surprisingly, both 10GbE ports on Maximus node are not set to active but the one that is connected works fine regardless.

Commodus survived, here is its config:

Maximus did not survive:

Spartacus did not survive:

Is there a way to get the node to attempt bringing up the network connection when the switch is back online?

Last edited: