Greetings,

So, I decided, to put two nodes into cluster.

Create cluster on node1, join cluster on node 2.

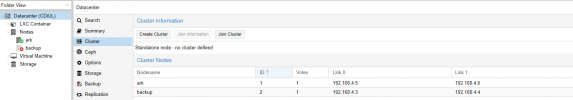

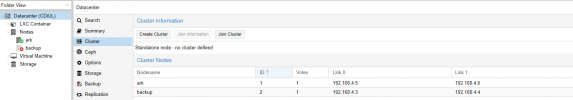

Datacenter on node 1 shows two nodes in Cluster Nodes, although it says Standalone node - no cluster defined above.

Command pvecm nodes on node1 only lists one node:

root@ark:~# pvecm nodes

Membership information

----------------------

Nodeid Votes Name

1 1 ark (local)

Tried with restarting pve-cluster service, no change.

I wanted to re-add node, so on nod2 I did

systemctl stop pve-cluster

systemctl stop corosync

pmxcfs -l

rm /etc/pve/corosync.conf

rm -r /etc/corosync/*

killall pmxcfs

systemctl start pve-cluster

expecting to remove node2 from this list, but its still here.

Only one command is not done, pvecm delnode 2, since I cannot list it with "pvecm nodes".

"pvecm status" returns

root@ark:~# pvecm status

Cluster information

-------------------

Name: CDiUL

Config Version: 2

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Tue Jan 26 16:11:05 2021

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 0x00000001

Ring ID: 1.5

Quorate: No

Votequorum information

----------------------

Expected votes: 2

Highest expected: 2

Total votes: 1

Quorum: 2 Activity blocked

Flags:

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 192.168.4.5 (local)

Please advise me how to remove node2 from cluster on node1 and then remove whole cluster from node1.

node2 is empty, freshly installed proxmox, so no data there, while node1 is production for some VMs..

Thank you.

So, I decided, to put two nodes into cluster.

Create cluster on node1, join cluster on node 2.

Datacenter on node 1 shows two nodes in Cluster Nodes, although it says Standalone node - no cluster defined above.

Command pvecm nodes on node1 only lists one node:

root@ark:~# pvecm nodes

Membership information

----------------------

Nodeid Votes Name

1 1 ark (local)

Tried with restarting pve-cluster service, no change.

I wanted to re-add node, so on nod2 I did

systemctl stop pve-cluster

systemctl stop corosync

pmxcfs -l

rm /etc/pve/corosync.conf

rm -r /etc/corosync/*

killall pmxcfs

systemctl start pve-cluster

expecting to remove node2 from this list, but its still here.

Only one command is not done, pvecm delnode 2, since I cannot list it with "pvecm nodes".

"pvecm status" returns

root@ark:~# pvecm status

Cluster information

-------------------

Name: CDiUL

Config Version: 2

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Tue Jan 26 16:11:05 2021

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 0x00000001

Ring ID: 1.5

Quorate: No

Votequorum information

----------------------

Expected votes: 2

Highest expected: 2

Total votes: 1

Quorum: 2 Activity blocked

Flags:

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 192.168.4.5 (local)

Please advise me how to remove node2 from cluster on node1 and then remove whole cluster from node1.

node2 is empty, freshly installed proxmox, so no data there, while node1 is production for some VMs..

Thank you.