Node maintenance mode UI

- Thread starter chrispage1

- Start date

-

- Tags

- maintenance pve-ha-crm

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

+1 on adding this to GUI. Also a indication like OP posted that the node is paused/in maintenance.

A lot of people seems to miss the maintenance mode ... can you explain what you use it for? I've never used it in VMware, i never saw the point in doing so in a cluster. As VMs are already taken care of with HA or manual migration, what should it do? How often are people that are not aware of maintenance playing around in your PVEs?

If maintenance mode is activated, resources are moved to other hosts. After deactivating maintenance mode, the previously moved resources are automatically migrated back to the previous host.A lot of people seems to miss the maintenance mode ... can you explain what you use it for? I've never used it in VMware, i never saw the point in doing so in a cluster. As VMs are already taken care of with HA or manual migration, what should it do? How often are people that are not aware of maintenance playing around in your PVEs?

But slightly different with Ceph OSDs: You do not want the OSDs to be replaced on other nodes just because of maintenance.

Thank you, so what would you do in a maintenance mode situtation with the hypervisor?If maintenance mode is activated, resources are moved to other hosts. After deactivating maintenance mode, the previously moved resources are automatically migrated back to the previous host.

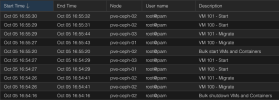

As I wrote, the VMs are already taken care of with HA, the migration part is already implemented and works as it should on reboot / shutdown including failback as you can see here:

This is a CEPH test cluster with three nodes and two VMs. Both VMs were on pve-ceph-02 and it got rebootet. The VMs were automatically migrated, one on each other node and as the rebooted node got up again, the VMs are both migrated back.

Thank you, so what would you do in a maintenance mode situtation with the hypervisor?

As I wrote, the VMs are already taken care of with HA, the migration part is already implemented and works as it should on reboot / shutdown including failback as you can see here:

View attachment 75827

This is a CEPH test cluster with three nodes and two VMs. Both VMs were on pve-ceph-02 and it got rebootet. The VMs were automatically migrated, one on each other node and as the rebooted node got up again, the VMs are both migrated back.

What if you want to test a different kernel or change other settings with some reboots? Maintenance is an easy way to prevent HA from migrating the VMs back.

Last edited:

That is a good point. Thank you for clarification. Until we have a UI button for this, the CLI option may be sufficient.What if you want to test a different kernel or change other settings with some reboots? Maintenance is an easy way to prevent HA from migrating the VMs back.

As of Proxmox 8.3.1 it will show which nodes are in maintenance mode on the UI however you still need to use the CMD line to move them in and out of maintenance mode.

I just found this while searching google. Any chance such a feature is in fact added to the GUI? It would also be nice to be able to do it at the VM level, so that you can shut down a VM and HA would ignore it.

Hmm, not sure what exactly you mean here. If you shutdown a guest via the Proxmox VE tooling, the HA state will change accordinly. If you set the HA state of that guest to "ignored" it will be as if it wasn't configured as HA.It would also be nice to be able to do it at the VM level, so that you can shut down a VM and HA would ignore it.

Hmm, not sure what exactly you mean here. If you shutdown a guest via the Proxmox VE tooling, the HA state will change accordinly. If you set the HA state of that guest to "ignored" it will be as if it wasn't configured as HA.

Oh I see, just found that option, yeah I guess that can accomplish the same thing.

My current practice is to set any VMs i will jeep running during maintenance to "ignored" in the HA manager and then I stop any VMs that will not be kept running. I then put all the nodes into maintenance mode and then I manually move the running VMs as needed while completing any maintenance. Once that is all done and the nodes are back to normal I make sure maintenance mode is off on all nodes and put those running VMs back to "started" in the HA manager which will then move them as required to be on the node they normally run on. I then manually start all other VMs and make sure everything comes up without issue.

Hi,

I could not help but want to shoot a question to anybody knowledgeable. This thread seems like the best place to do it, so without further ado...

I am looking for the ability to implement my own maintenance mode hooks that would work something like this:

1. operator triggers **maintenance mode** through the UI

2a. I specify somewhere under `/etc/pve/` a custom hook path with my own script to execute, identical to how the hook file option in `vzdump` works.

2b. My hook file requires a minimum of being notified about these conditions -- pre-(maintenance), post-(maintenance) and **target**. Said *target* can be one of the following: `proxmox || pve-ha || pbs`.

2c. The `pve-ha` target could be like when you execute any of the pve-ha-crm mode commands related to maintenance.

2d. The `pbs` target would align with the maintenance mode option that is a part of every Data Store you create.

2e. The `proxmox` target would align with any other method of toggling maintenance mode? I am assuming that the system itself has the possibility of triggering such an event and perhaps this target would be best aligned with such use?

For instance, I would like to execute a task when `pbs` subsystem is in the particular maintenance mode of **offline**. I would like to request that the `pve-storage` target for my data store be set to *disabled* so that everything that depends on this store can fail (more) gracefully, or even not try and run to begin with as my ultimate goal. I have already confirmed that one can indeed to this by editing `/etc/pve/storage.cfg` and adding the text `disable` under the target whom you wish. Another important task that comes to mind would be disabling the NFS storage disk that I have provisioned when the NAS VM is offline or otherwise unavailable.

I have found that by doing these two simple tasks, it can have a drastic reduction in the time it takes to shutdown the system. Otherwise, it stalls waiting for an event that will never come.

My apologies on the messy rough draft up above, but I do think that it gets my point across sufficiently. This is the first proposal for this I have seen. Thank you for any feedback one can provide to me and others.

Cheers,

Jeff

I could not help but want to shoot a question to anybody knowledgeable. This thread seems like the best place to do it, so without further ado...

I am looking for the ability to implement my own maintenance mode hooks that would work something like this:

1. operator triggers **maintenance mode** through the UI

2a. I specify somewhere under `/etc/pve/` a custom hook path with my own script to execute, identical to how the hook file option in `vzdump` works.

2b. My hook file requires a minimum of being notified about these conditions -- pre-(maintenance), post-(maintenance) and **target**. Said *target* can be one of the following: `proxmox || pve-ha || pbs`.

2c. The `pve-ha` target could be like when you execute any of the pve-ha-crm mode commands related to maintenance.

2d. The `pbs` target would align with the maintenance mode option that is a part of every Data Store you create.

2e. The `proxmox` target would align with any other method of toggling maintenance mode? I am assuming that the system itself has the possibility of triggering such an event and perhaps this target would be best aligned with such use?

For instance, I would like to execute a task when `pbs` subsystem is in the particular maintenance mode of **offline**. I would like to request that the `pve-storage` target for my data store be set to *disabled* so that everything that depends on this store can fail (more) gracefully, or even not try and run to begin with as my ultimate goal. I have already confirmed that one can indeed to this by editing `/etc/pve/storage.cfg` and adding the text `disable` under the target whom you wish. Another important task that comes to mind would be disabling the NFS storage disk that I have provisioned when the NAS VM is offline or otherwise unavailable.

I have found that by doing these two simple tasks, it can have a drastic reduction in the time it takes to shutdown the system. Otherwise, it stalls waiting for an event that will never come.

My apologies on the messy rough draft up above, but I do think that it gets my point across sufficiently. This is the first proposal for this I have seen. Thank you for any feedback one can provide to me and others.

Cheers,

Jeff

@i8degrees I think this would best be placed as a feature request in our bugtracker: https://bugzilla.proxmox.com

Feature requests in the forum can easily be forgotten or overlooked

Feature requests in the forum can easily be forgotten or overlooked

Hi,@i8degrees I think this would best be placed as a feature request in our bugtracker: https://bugzilla.proxmox.com

Feature requests in the forum can easily be forgotten or overlooked

Thank you for your response. I shall try this.

Cheers