I currently run 3 proxmox nodes in a cluster, pve1 pve2 pve3. One of these nodes has been continuously causing issues for me, pve1.

The most relevant specs for pve1 are

- CPU: AMD Ryzen 7950X

- MEM: 2x 48 GB DDR5 (Corsair Vengeance DDR5-5600 BK C40 DC, datasheet available here)

- MOBO: ASRock B650M Pro RS WiFi (datasheet available here)

Even though this looked very wrong, the vms in question still seemed to function as they should as they were still fully responsive to ssh & web queries (for the ones running webservers) so I didn't think much of it. After a simple reboot the problem "went away" and everything was back to normal.

After a few days post-reboot, this time the entire node went down and became fully unreachable. It was still "on" in sort of a zombie mode where I could see it being connected via the router, the computer in question was on, but the node would not respond to pings, ssh requests and all of its vms were shut down; the only way to reboot it was by doing a physical hard shut off.

The first thing I looked into was if I had faulty ram, I was using the newest high capacity DDR5 ram sticks after all.

- There were a total of 3 issues that popped up, two of them on test 9

- The memory was running at its advertised speed of 5600MT/s, which upon further investigation is not supported by the Ryzen 7590x which only supports up to 5200MT/s

- My BIOS was "severely" updated, including an update specifically stating support for high capacity 48gb memory sticks

all of these factors contributed to the initial test failing.

This second memory test ended up succeeding without any errors, implicating that the steps I had taken after the initial test resolved the issue.

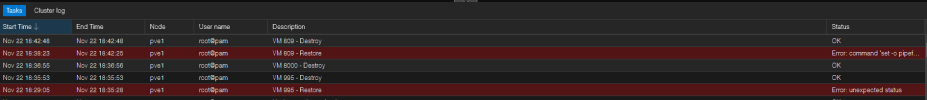

However, as you might have guessed this isn't the end of it. A few days after rebooting the node and it operating successfully, I attempted to restore a 5gb vm backup located on the "Backups" hdd located on pve1 which then seemed to do absolutely nothing for 15 minutes.

Shortly after stopping the backup as it was doing nothing, I deleted the server that had been incompletely created from said backup and re attempted the restoration, the result this time was that the entire node got the gray questionmark again, this time implying something else must be the problem and that my theory of the memory being the cause was incorrect.

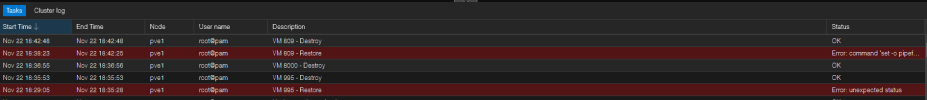

The tasks log displays both my attempts at backup restoration

A

The hdd_data volume seems to be locked.

Running a

Running the

The

Link to the output on pastebin

[PART 1, I aim to give as much information as possible regarding steps I took and investigation]

The most relevant specs for pve1 are

- CPU: AMD Ryzen 7950X

- MEM: 2x 48 GB DDR5 (Corsair Vengeance DDR5-5600 BK C40 DC, datasheet available here)

- MOBO: ASRock B650M Pro RS WiFi (datasheet available here)

Introduction

After creating this node, I noticed that after a few days of runtime it would eventually have all of its vms, containers and storages have a question mark, which also seemed to cut off the usage graphs for everything at the time of the event.Even though this looked very wrong, the vms in question still seemed to function as they should as they were still fully responsive to ssh & web queries (for the ones running webservers) so I didn't think much of it. After a simple reboot the problem "went away" and everything was back to normal.

After a few days post-reboot, this time the entire node went down and became fully unreachable. It was still "on" in sort of a zombie mode where I could see it being connected via the router, the computer in question was on, but the node would not respond to pings, ssh requests and all of its vms were shut down; the only way to reboot it was by doing a physical hard shut off.

Initial analysis

After the second incident occurred on this same node I could no longer write it off as a coincidence and begun investigating the problem, only to find out this seems to be a rather "common" problem.The first thing I looked into was if I had faulty ram, I was using the newest high capacity DDR5 ram sticks after all.

Memory test 1

When investigating the memory by running memtest86 for the first time over the span of about 8 hours, multiple things were noted:- There were a total of 3 issues that popped up, two of them on test 9

- The memory was running at its advertised speed of 5600MT/s, which upon further investigation is not supported by the Ryzen 7590x which only supports up to 5200MT/s

- My BIOS was "severely" updated, including an update specifically stating support for high capacity 48gb memory sticks

all of these factors contributed to the initial test failing.

Memory test 2

As the first test had failed and a ton of issues were noted as a result of my user error, I went ahead and updated the bios to officially support high capacity memory and downgraded their frequency to 4800, giving me some headroom below the officially supported speed of 5200 as stated by my processor's datasheet.This second memory test ended up succeeding without any errors, implicating that the steps I had taken after the initial test resolved the issue.

The second incident

After having fixed the issues with my memory which were pretty major, I believed that was the root cause of both previous incidents and kept going.However, as you might have guessed this isn't the end of it. A few days after rebooting the node and it operating successfully, I attempted to restore a 5gb vm backup located on the "Backups" hdd located on pve1 which then seemed to do absolutely nothing for 15 minutes.

Shortly after stopping the backup as it was doing nothing, I deleted the server that had been incompletely created from said backup and re attempted the restoration, the result this time was that the entire node got the gray questionmark again, this time implying something else must be the problem and that my theory of the memory being the cause was incorrect.

Subsequent investigation

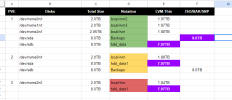

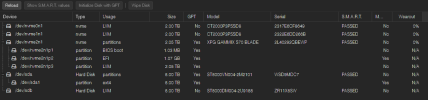

Immediately after the entire node went "gray ?" after the memory issues were resolved I begun investigating, here is the data:The tasks log displays both my attempts at backup restoration

A

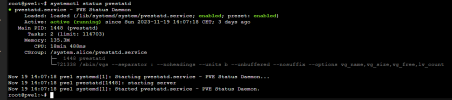

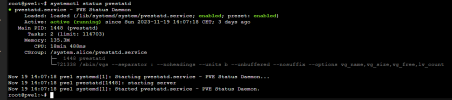

systemctl status pvestatd command indicated no errors

The hdd_data volume seems to be locked.

Code:

root@pve1:~# ls /var/lock/lvm

P_global V_hdd_dataRunning a

systemctl restart pvestatd command removed the question mark from the vms themselves, but the storages and graphs were still down.

Running the

pvesm status command froze completely, and shortly thereafter made the vms return into the question mark state

The

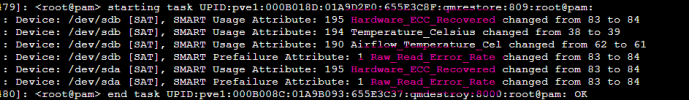

journalctl -r command returned the following output, the initial crash occurred at around 18:39 based on where the graphs went down:Link to the output on pastebin

[PART 1, I aim to give as much information as possible regarding steps I took and investigation]