Hello, I have a 4 node Proxmox 6 cluster that I am having issues with. I recently had to change the IP addresses and host names of two of the nodes in my cluster, and have managed to get that done. However I have one node (not one that was changed) that is now giving me troubles. The

If I run the commands to stop the pve-cluster and corosync processes and then use the local pve filesystem, I get the same errors. I can see that when it ocassionally loads a file's contents, it seems that the configuration has not been updated to match the new ones that all the other nodes have.

This is the corosync.conf file from a healthy node:

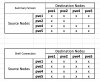

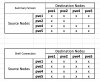

I can confirm that the configuration file is the same across the other three nodes. Additionally, the same node that I am having this issue on has issues connecting to other nodes in the cluster. All nodes can access the shell of the other nodes, however the node that is having issues can only access the summary or other node settings pages for itself and one other as can be seen in this screenshot of a table I put together. The node with the issue is pve2

/etc/pve directory is not allowing anything to be saved, and is also not receiving updates through Corosync. When one opens a file from the folder, it produces an [ Error reading lock file ./.corosync.conf.swp: Not enough data read ] error, or at other times an [ Error reading lock file ./.corosync.conf.swp: Unable to write swap file ] error code.

If I run the commands to stop the pve-cluster and corosync processes and then use the local pve filesystem, I get the same errors. I can see that when it ocassionally loads a file's contents, it seems that the configuration has not been updated to match the new ones that all the other nodes have.

This is the corosync.conf file from a healthy node:

Code:

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: pve1

nodeid: 1

quorum_votes: 1

ring0_addr: 10.0.0.4

}

node {

name: pve2

nodeid: 2

quorum_votes: 1

ring0_addr: 10.0.0.5

}

node {

name: pve4

nodeid: 3

quorum_votes: 1

ring0_addr: 10.0.0.7

}

node {

name: pve5

nodeid: 4

quorum_votes: 1

ring0_addr: 10.0.0.8

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: Cluster-01

config_version: 12

interface {

bindnetaddr: 10.0.0.4

ringnumber: 0

}

ip_version: ipv4

secauth: on

version: 2

}I can confirm that the configuration file is the same across the other three nodes. Additionally, the same node that I am having this issue on has issues connecting to other nodes in the cluster. All nodes can access the shell of the other nodes, however the node that is having issues can only access the summary or other node settings pages for itself and one other as can be seen in this screenshot of a table I put together. The node with the issue is pve2