A question I have had for a while and never got around to asking:

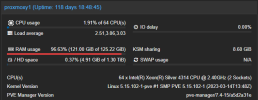

I have many Proxmox clusters which usually contain 3 nodes, Xeon 128Gb SSD. What I don't really have a feel for is what capacity those nodes have for the number of concurrent VM/containers.

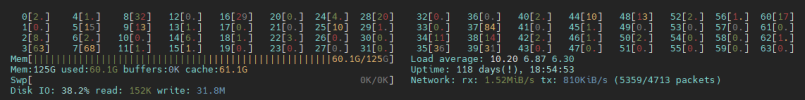

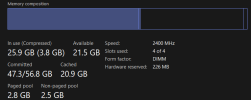

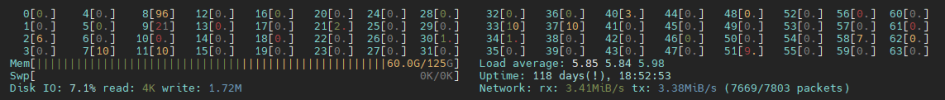

As an example I migrated 6 VM's which are between 4 and 8Gb RAM from a KVM server which only had 64Gb of RAM (which was never fully used) and on proxmox I see the RAM is pegged to 96% after a few weeks of running. There's no performance issue but I expected that node to be able to handle double that workload. If I spin up a new VM and give it 16 Gb of RAM the memory of the node stays ay around 96% and then if I destroy that VM it drops a bit before coming back. I assume then its basically caching with all available unused RAM - which is a good thing,

If I run free it seems to show that it really is using all that RAM with what it thinks is a tiny buffer.

Of course, in the interest of HA, the next problem is that provision on 3 nodes needs to run on 2 nodes at least temporarily otherwise HA can't work.

This node has twice as many CPU's, Memory and Disk than it previously had but I am not sure if I can actually add more workload to it.

Disk usage is moderate and CPU load is very low. Network IO is low and replication is on its own 10Gbit connection where 1Gbit would have been ample.

So... I don't know how to read memory usage. All that said, I don't think I have ever tried to migrate everything or start lots and see what happens, it just tends to run them fine.

I've seen others running dozens of VM's

I have many Proxmox clusters which usually contain 3 nodes, Xeon 128Gb SSD. What I don't really have a feel for is what capacity those nodes have for the number of concurrent VM/containers.

As an example I migrated 6 VM's which are between 4 and 8Gb RAM from a KVM server which only had 64Gb of RAM (which was never fully used) and on proxmox I see the RAM is pegged to 96% after a few weeks of running. There's no performance issue but I expected that node to be able to handle double that workload. If I spin up a new VM and give it 16 Gb of RAM the memory of the node stays ay around 96% and then if I destroy that VM it drops a bit before coming back. I assume then its basically caching with all available unused RAM - which is a good thing,

If I run free it seems to show that it really is using all that RAM with what it thinks is a tiny buffer.

Code:

root@proxmoxy3:~# free -h

total used free shared buff/cache available

Mem: 125Gi 119Gi 4.1Gi 66Mi 1.1Gi 5.3Gi

Swap: 0B 0B 0B

Of course, in the interest of HA, the next problem is that provision on 3 nodes needs to run on 2 nodes at least temporarily otherwise HA can't work.

This node has twice as many CPU's, Memory and Disk than it previously had but I am not sure if I can actually add more workload to it.

Disk usage is moderate and CPU load is very low. Network IO is low and replication is on its own 10Gbit connection where 1Gbit would have been ample.

So... I don't know how to read memory usage. All that said, I don't think I have ever tried to migrate everything or start lots and see what happens, it just tends to run them fine.

I've seen others running dozens of VM's