Hi All,

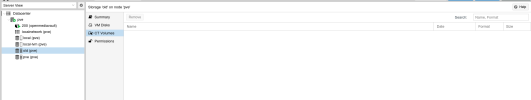

I've added a LVM group on my PVE, but from the WEB UI I don't see the VM disks inside it, that I can see with fdisk as below.

What's the issue?

Thank you!

Disk /dev/mapper/pve--OLD--95683659-vm--110--disk--0: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disklabel type: dos

Disk identifier: 0xb319051b

Device Boot Start End Sectors Size Id Type

/dev/mapper/pve--OLD--95683659-vm--110--disk--0-part1 * 2048 201328639 201326592 96G 83 Linux

/dev/mapper/pve--OLD--95683659-vm--110--disk--0-part2 201330686 209713151 8382466 4G 5 Extende

/dev/mapper/pve--OLD--95683659-vm--110--disk--0-part5 201330688 209713151 8382464 4G 82 Linux s

I've added a LVM group on my PVE, but from the WEB UI I don't see the VM disks inside it, that I can see with fdisk as below.

What's the issue?

Thank you!

Disk /dev/mapper/pve--OLD--95683659-vm--110--disk--0: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disklabel type: dos

Disk identifier: 0xb319051b

Device Boot Start End Sectors Size Id Type

/dev/mapper/pve--OLD--95683659-vm--110--disk--0-part1 * 2048 201328639 201326592 96G 83 Linux

/dev/mapper/pve--OLD--95683659-vm--110--disk--0-part2 201330686 209713151 8382466 4G 5 Extende

/dev/mapper/pve--OLD--95683659-vm--110--disk--0-part5 201330688 209713151 8382464 4G 82 Linux s