No login possible after clustering nodes

- Thread starter j.io

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Yes. You need quorum to log into the GUI. If you want to make modifications, one option is an external quorum device: https://pve.proxmox.com/wiki/Cluster_Manager#_corosync_external_vote_support

Thanks Matthias,Yes. You need quorum to log into the GUI. If you want to make modifications, one option is an external quorum device: https://pve.proxmox.com/wiki/Cluster_Manager#_corosync_external_vote_support

is this a bug or a feature? I am a private user. What if I have to remove one node from the cluster? Does the other node works as before?

Not sure if I would call it either. It's a consequence of how quorums work. When logging in, we lock a file.

However, without quorum, the cluster file system is not modifiable (as to ensure there's no inconsistent state), so the locking fails.

SSH still works though.

If you remove the node, the cluster should become quorate again, so it should be working again.

There are a few forum threads on this topic, so I would agree that it's could be communicated better to the user.

https://forum.proxmox.com/threads/p...into-web-manager-if-both-nodes-are-on.101613/

https://forum.proxmox.com/threads/i-can-not-able-to-login-gui-after-2nd-node-down.108820/

However, without quorum, the cluster file system is not modifiable (as to ensure there's no inconsistent state), so the locking fails.

SSH still works though.

If you remove the node, the cluster should become quorate again, so it should be working again.

There are a few forum threads on this topic, so I would agree that it's could be communicated better to the user.

https://forum.proxmox.com/threads/p...into-web-manager-if-both-nodes-are-on.101613/

https://forum.proxmox.com/threads/i-can-not-able-to-login-gui-after-2nd-node-down.108820/

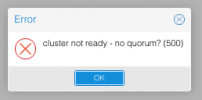

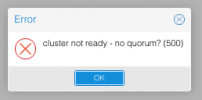

@Matthias. OK, now I made a mistake. I ran

Now this is what I get on any action I do ...

EDIT: OK, I could now restore my slave using this post: https://forum.proxmox.com/threads/proxmox-ve-6-removing-cluster-configuration.56259/#post-259203

The master node is still untouched, what do I have to do with it? Both nodes see each other in the GUI as offline. I read I have to remove a folder? But I am better waiting for your input from now on ... luckily all data is backed up, but still annoying. However, it was my fault.

pvecm delnode <NAME> with running VMs on the machine. Obviously, I did not shutdown the machine either. Strangely, on another node my backups do not work either now (either I am only backing up to the local drive in the same physical machine). What can I do now to somehow fix this best?Now this is what I get on any action I do ...

EDIT: OK, I could now restore my slave using this post: https://forum.proxmox.com/threads/proxmox-ve-6-removing-cluster-configuration.56259/#post-259203

The master node is still untouched, what do I have to do with it? Both nodes see each other in the GUI as offline. I read I have to remove a folder? But I am better waiting for your input from now on ... luckily all data is backed up, but still annoying. However, it was my fault.

Last edited:

It seems what you have already done, removing the second node from your cluster and resetting all corosync configurations there. (And perhaps adding it again after that)What can I do now to somehow fix this best?

It sounds like there is still a remnant configurations folder for the other node on each node. However, to make sure please first post the output ofI read I have to remove a folder?

pvecm status and cat /etc/pve/corosync.conf.Thinking in terms of slave and master is not useful when working with a Proxmox cluster. Each node should be able to do any management task.OK, I could now restore my slave using this post:

Every node in a Proxmox cluster gets assigned a certain number of votes (default 1), if the number of available votes are bigger than 50% of the total votes in the cluster, it is considered quorate and the cluster will be able to operate normally. Functionally, there are no "master" or "slave" nodes in Proxmox.

Thank you for your answer.

pvecm status

Code:

Cluster information

-------------------

Name: node1

Config Version: 2

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon Jul 4 18:02:49 2022

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 0x00000001

Ring ID: 1.6a

Quorate: Yes

Votequorum information

----------------------

Expected votes: 1

Highest expected: 1

Total votes: 1

Quorum: 1

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 10.0.3.2 (local)cat /etc/pve/corosync.conf

Code:

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: node1

nodeid: 1

quorum_votes: 1

ring0_addr: 10.0.3.2

}

node {

name: node2

nodeid: 2

quorum_votes: 1

ring0_addr: 10.0.3.3

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: node1

config_version: 2

interface {

linknumber: 0

}

ip_version: ipv4-6

link_mode: passive

secauth: on

version: 2

}Are both outputs from node1? The output of both commands from node2 could also be useful here.

It is a bit weird though, now that I read it again.

1. In the very beginning when you issued

2. As far as I am aware, the GUI uses the corosync config to determine what to display in the GUI. The commands from the post you linked above should have also removed the corosync config on node2, though. It seems like they accidentally synced again (?)

I'd advise you to again try the steps in the post above again and separate the node without reinstalling.

When the nodes no longer see each other and are no longer visible in the config, it should be safe to remove the configurations folder in

It is a bit weird though, now that I read it again.

1. In the very beginning when you issued

pvecm delnode for the first time, the node should have been removed from the corosync config already.2. As far as I am aware, the GUI uses the corosync config to determine what to display in the GUI. The commands from the post you linked above should have also removed the corosync config on node2, though. It seems like they accidentally synced again (?)

I'd advise you to again try the steps in the post above again and separate the node without reinstalling.

When the nodes no longer see each other and are no longer visible in the config, it should be safe to remove the configurations folder in

/etc/pve/nodes/<nodename>. After that you should be able to add the node to the cluster again, if you want to do so.That worked perfectly. All solved. Thanks a ton. Great forum!Are both outputs from node1? The output of both commands from node2 could also be useful here.

It is a bit weird though, now that I read it again.

1. In the very beginning when you issuedpvecm delnodefor the first time, the node should have been removed from the corosync config already.

2. As far as I am aware, the GUI uses the corosync config to determine what to display in the GUI. The commands from the post you linked above should have also removed the corosync config on node2, though. It seems like they accidentally synced again (?)

I'd advise you to again try the steps in the post above again and separate the node without reinstalling.

When the nodes no longer see each other and are no longer visible in the config, it should be safe to remove the configurations folder in/etc/pve/nodes/<nodename>. After that you should be able to add the node to the cluster again, if you want to do so.