Hi guys,

I got an issue when trying to setup a local network for my containers and vms.

I setup the host network to the outside internet, during install. That worked fine.

Then an added local network according to this article: Proxmox Wiki

But my test container has no DNS available.

Pinging any IP works fine, but as soon as I try to resolve a domain it says:

Details:

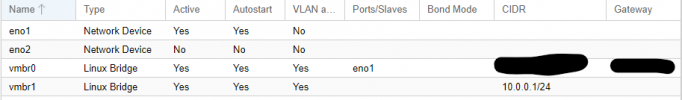

Interfaces Host:

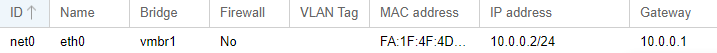

Interface Container:

Resolve Container:

Proxmox verison:

I got an issue when trying to setup a local network for my containers and vms.

I setup the host network to the outside internet, during install. That worked fine.

Then an added local network according to this article: Proxmox Wiki

But my test container has no DNS available.

Pinging any IP works fine, but as soon as I try to resolve a domain it says:

Code:

$ ping google.com

ping: google.com: Temporary failure in name resolutionDetails:

Interfaces Host:

Code:

$ cat /etc/network/interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet static

address 185.94.252.29/24

gateway 185.94.252.1

iface eno2 inet manual

auto vmbr0

iface vmbr0 inet static

address 10.10.10.1/24

bridge-ports none

bridge-stp off

bridge-fd 0

post-up echo 1 > /proc/sys/net/ipv4/ip_forward

post-up iptables -t nat -A POSTROUTING -s '10.10.10.0/24' -o eno1 -j MASQUERADE

post-down iptables -t nat -D POSTROUTING -s '10.10.10.0/24' -o eno1 -j MASQUERADE

post-up iptables -t raw -I PREROUTING -i fwbr+ -j CT --zone 1

post-down iptables -t raw -D PREROUTING -i fwbr+ -j CT --zone 1Interface Container:

Code:

$ cat /etc/network/interfaces

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 10.10.10.2/24

gateway 10.10.10.1Resolve Container:

Code:

$ cat /etc/resolv.conf

# --- BEGIN PVE ---

search domain.internal

nameserver 8.8.8.8

# --- END PVE ---Proxmox verison:

Code:

proxmox-ve: 7.1-1 (running kernel: 5.13.19-2-pve)

pve-manager: 7.1-12 (running version: 7.1-12/b3c09de3)

pve-kernel-helper: 7.1-14

pve-kernel-5.13: 7.1-9

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 15.2.15-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-7

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.1-5

libpve-guest-common-perl: 4.1-1

libpve-http-server-perl: 4.1-1

libpve-storage-perl: 7.1-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.11-1

lxcfs: 4.0.11-pve1

novnc-pve: 1.3.0-2

proxmox-backup-client: 2.1.5-1

proxmox-backup-file-restore: 2.1.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-7

pve-cluster: 7.1-3

pve-container: 4.1-4

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-6

pve-ha-manager: 3.3-3

pve-i18n: 2.6-2

pve-qemu-kvm: 6.1.1-2

pve-xtermjs: 4.16.0-1

qemu-server: 7.1-4

smartmontools: 7.2-1

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.4-pve1

Last edited: