FIXED: See solution here: https://forum.proxmox.com/threads/n...ot-exist-lvs-data-corrupted.86664/post-380359

Hello all,

I had a UPS go bad and my Proxmox machine shutdown incorrectly (power essentially just pulled). Now I've reboot it and I can't start any Containers or Virtual Machines.

When I run something like

I think the most relevant info is that

After doing some Googling and reading on these forums I've also run

which gave

and

which gave

I believe that it is telling/relevant that

And so I think perhaps the "LVM Metadata" is corrupted? Now keep in mind, I'm amateur enough to not really even know what that means yet. A lot of solutions indicate that perhaps the meta data partition is out of space. I'm not sure if this is my problem. I did find these blog posts about resizing/repairing a thin pool, is it relevant?

Hoping to get some advice before diving in.

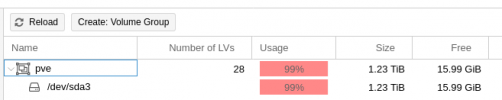

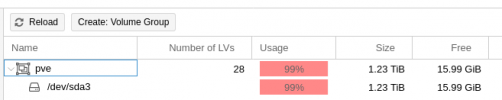

Also if it is relevant here is screenshot from the Web GUI of Node/Disks/LVM. It says Usage is 99.9%, but it also says that there is 15.99GiB free, so I'm a bit confused.

Can anyone shed some light on my issue?

Thank you so much in advance.

- Sean

Hello all,

I had a UPS go bad and my Proxmox machine shutdown incorrectly (power essentially just pulled). Now I've reboot it and I can't start any Containers or Virtual Machines.

When I run something like

sudo lxc-start -n 117 -F -l DEBUG -o /tmp/lxc-117.log I get (some other INFO messages redacted to stay under the 15,000 word limit on posts):lxc-start 117 20210327214616.161 INFO conf - conf.c:run_script_argv:356 - Executing script "/usr/share/lxc/hooks/lxc-pve-prestart-hook" for container "117", config section "lxc"

lxc-start 117 20210327214616.880 DEBUG conf - conf.c:run_buffer:326 - Script exec /usr/share/lxc/hooks/lxc-pve-prestart-hook 117 lxc pre-start with output: mount: special device /dev/pve/vm-117-disk-0 does not exist

lxc-start 117 20210327214616.880 DEBUG conf - conf.c:run_buffer:326 - Script exec /usr/share/lxc/hooks/lxc-pve-prestart-hook 117 lxc pre-start with output: command 'mount /dev/pve/vm-117-disk-0 /var/lib/lxc/117/rootfs//' failed: exit code 32

lxc-start 117 20210327214616.889 ERROR conf - conf.c:run_buffer:335 - Script exited with status 32

lxc-start 117 20210327214616.889 ERROR start - start.c:lxc_init:861 - Failed to run lxc.hook.pre-start for container "117"

lxc-start 117 20210327214616.889 ERROR start - start.c:__lxc_start:1944 - Failed to initialize container "117"

lxc-start 117 20210327214616.889 ERROR lxc_start - tools/lxc_start.c:main:330 - The container failed to start

lxc-start 117 20210327214616.889 ERROR lxc_start - tools/lxc_start.c:main:336 - Additional information can be obtained by setting the --logfile and --logpriority options

I think the most relevant info is that

special device /dev/pve/vm-117-disk-0 does not existAfter doing some Googling and reading on these forums I've also run

lsblk -f which gaveNAME FSTYPE LABEL UUID MOUNTPOINT

sda

├─sda1

├─sda2 vfat BF95-30FC

└─sda3 LVM2_member Ok85Dk-zsEx-oXEy-oOlv-Jqyk-Mokd-XPw0qv

├─pve-swap swap fa815645-5d25-4930-bfc0-23f320e7b81e [SWAP]

└─pve-root ext4 97d9a641-b756-46c2-99ba-388648f793d8 /

lvs -awhich gave

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data pve twi---tz-- 1.09t

[data_tdata] pve Twi------- 1.09t

[data_tmeta] pve ewi------- 11.36g

[lvol0_pmspare] pve ewi------- 11.36g

root pve -wi-ao---- 96.00g

swap pve -wi-ao---- 8.00g

vm-100-disk-0 pve Vwi---tz-- 8.00g data

vm-101-disk-0 pve Vwi---tz-- 8.00g data

vm-102-disk-0 pve Vwi---tz-- 128.00g data

vm-103-disk-1 pve Vwi---tz-- 8.00g data

vm-104-disk-1 pve Vwi---tz-- 8.00g data

vm-105-disk-1 pve Vwi---tz-- 128.00g data

vm-106-disk-0 pve Vwi---tz-- 8.00g data

vm-107-disk-1 pve Vwi---tz-- 8.00g data

vm-108-disk-1 pve Vwi---tz-- 16.00g data

vm-109-disk-0 pve Vwi---tz-- 8.00g data

vm-110-disk-1 pve Vwi---tz-- 8.00g data

vm-111-disk-1 pve Vwi---tz-- 8.00g data

vm-112-disk-1 pve Vwi---tz-- 8.00g data

vm-113-disk-0 pve Vwi---tz-- 32.00g data

vm-114-disk-0 pve Vwi---tz-- 8.00g data

vm-115-disk-0 pve Vwi---tz-- 8.00g data

vm-116-disk-0 pve Vwi---tz-- 8.00g data

vm-117-disk-0 pve Vwi---tz-- 10.00g data

vm-118-disk-0 pve Vwi---tz-- 8.00g data

vm-119-disk-1 pve Vwi---tz-- 8.00g data

vm-120-disk-0 pve Vwi---tz-- 8.00g data

vm-121-disk-0 pve Vwi---tz-- 8.00g data

vm-122-disk-0 pve Vwi---tz-- 8.00g data

vm-123-disk-0 pve Vwi---tz-- 8.00g data

vm-124-disk-0 pve Vwi---tz-- 32.00g data

and

vgs -awhich gave

VG #PV #LV #SN Attr VSize VFree

pve 1 28 0 wz--n- 1.23t 15.99g

I believe that it is telling/relevant that

lvs -a essentially gives no information. Other folks' examples had lots of % usage data. Running lvs -av givesLV VG #Seg Attr LSize Maj Min KMaj KMin Pool Origin Data% Meta% Move Cpy%Sync Log Convert LV UUID LProfile

data pve 1 twi---tz-- 1.09t -1 -1 -1 -1 AabVQ4-N9ne-c27m-G2Es-VKcq-5lIC-RP5tBE

[data_tdata] pve 1 Twi------- 1.09t -1 -1 -1 -1 IJmFPg-1djT-XdEZ-qvXC-FB1O-tGyr-bYxoAj

[data_tmeta] pve 1 ewi------- 11.36g -1 -1 -1 -1 xKoJ53-9iGB-6nm7-T06r-0CCe-SiIW-Gg3a9I

[lvol0_pmspare] pve 1 ewi------- 11.36g -1 -1 -1 -1 lNZ7ft-PkgT-KsZt-l5cQ-BEtk-9tSz-V2AAp4

root pve 1 -wi-ao---- 96.00g -1 -1 253 1 WtE4DK-KZdT-SKiW-Rqfa-UcgR-arIY-fn5JZN

swap pve 1 -wi-ao---- 8.00g -1 -1 253 0 uOOvkv-ZVVw-s416-1OZY-8r4c-UssV-c7MYji

vm-100-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data ru8g6E-MPXK-m9M0-KPI2-2wxV-hFV5-uaAH6H

vm-101-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data sEhxiu-fFqt-JxQn-86By-lFCn-n182-QIIu3v

vm-102-disk-0 pve 1 Vwi---tz-- 128.00g -1 -1 -1 -1 data EA4P3M-mf1Y-xyYj-7yrx-ZCrX-aEb1-n0MsBf

vm-103-disk-1 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data 6TdrnE-5ci4-wys0-NGqL-NL7c-mHc5-2P9pkG

vm-104-disk-1 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data gCjlNm-ILBw-fr6D-Iel3-Q9ec-2RcZ-7x0Bfm

vm-105-disk-1 pve 1 Vwi---tz-- 128.00g -1 -1 -1 -1 data E4i6xO-CtTz-Otul-EWTb-vaaY-1QOC-2gOyZI

vm-106-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data xuODxA-XS6I-TuYJ-iwrp-67SB-f6kJ-P445Ev

vm-107-disk-1 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data bA97Tm-bXbD-LgZ3-B8fa-suTw-KeHk-xHRl4Y

vm-108-disk-1 pve 1 Vwi---tz-- 16.00g -1 -1 -1 -1 data fAjAod-6x6g-xQ5S-Dd4b-BC10-isiw-cI1Nft

vm-109-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data X7TrO5-Gq8D-0jLP-aJ8j-0LO6-0OV4-KcEFfF

vm-110-disk-1 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data CjKN8o-0HIz-GxY0-ddL4-3Vuk-Qoqj-yB99Ag

vm-111-disk-1 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data NL9d3o-Nmoh-zrjc-6uhU-5mW2-qCKS-vwa8RH

vm-112-disk-1 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data 0jywWb-gw2m-haW5-Lwz9-T03A-EkrL-o0r42b

vm-113-disk-0 pve 1 Vwi---tz-- 32.00g -1 -1 -1 -1 data jkgK6e-6hfO-jYNZ-jZi4-0kzE-kMwL-2j3A2i

vm-114-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data rxHUOd-Fx1G-35wT-hkdT-xgF9-AjII-9bfdam

vm-115-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data 5URXuI-cj1Q-T0O3-rJMy-TTIg-rqPa-DB2BJr

vm-116-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data J2BeJI-VcWi-Nwqk-ypNX-Zo2j-VcWr-RM0jAh

vm-117-disk-0 pve 1 Vwi---tz-- 10.00g -1 -1 -1 -1 data lbuRX1-O3Lf-Pexd-34fo-1sN0-Kjln-Vh6HZv

vm-118-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data 1yBvBG-v5v9-z8st-w6XQ-3vyv-jwz6-wMmMkB

vm-119-disk-1 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data cVcTKP-sV6w-9m4J-QXMI-dg5I-xmk2-4SM6kO

vm-120-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data PlD9qf-gz9W-mcm1-ecZh-xAh5-aFdT-65kQfo

vm-121-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data dBnKj9-MQeV-2Q0i-Mmr7-KZFA-6NU6-t9LTtf

vm-122-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data Z9DONH-G6TP-0TMd-3JcG-E0A4-4xov-5ViBOn

vm-123-disk-0 pve 1 Vwi---tz-- 8.00g -1 -1 -1 -1 data BYEMNb-dW4s-XHxv-dnkz-n2zG-3r2A-lSiOdv

vm-124-disk-0 pve 1 Vwi---tz-- 32.00g -1 -1 -1 -1 data lCVJRb-v7Nc-TRAa-5x41-qrtx-5YWW-3eG2qD

And so I think perhaps the "LVM Metadata" is corrupted? Now keep in mind, I'm amateur enough to not really even know what that means yet. A lot of solutions indicate that perhaps the meta data partition is out of space. I'm not sure if this is my problem. I did find these blog posts about resizing/repairing a thin pool, is it relevant?

- https://www.unixrealm.com/repair-a-thin-pool/

- https://charlmert.github.io/blog/2017/06/15/lvm-metadata-repair/

Hoping to get some advice before diving in.

Also if it is relevant here is screenshot from the Web GUI of Node/Disks/LVM. It says Usage is 99.9%, but it also says that there is 15.99GiB free, so I'm a bit confused.

Can anyone shed some light on my issue?

Thank you so much in advance.

- Sean

Last edited: