Last edited:

[SOLVED] No access to Proxmox web GUI only SSH

- Thread starter dasc

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi JayrRun in your root shell

Code:pvecm updatecerts --force

Thanks for your reply.

I've tried your suggestion ,and find the result:

ipcc_send_rec[1] failed: Connection refused

ipcc_send_rec[2] failed: Connection refused

ipcc_send_rec[3] failed: Connection refused

Unable to load access control list: Connection refused

Rgds

Are you running a cluster?Hi Jayr

Thanks for your reply.

I've tried your suggestion ,and find the result:

ipcc_send_rec[1] failed: Connection refused

ipcc_send_rec[2] failed: Connection refused

ipcc_send_rec[3] failed: Connection refused

Unable to load access control list: Connection refused

Rgds

ls /etc/pve, is it mounted?

Hi JayrAre you running a cluster?

ls /etc/pve, is it mounted?

I'm not running a cluster and it's not mounted

Rgds

Then your pve-cluster service is probably not running. You could check that withsystemctl status pve-clusterand see its logs why it failed.

Go in the webUI to Datacenter -> Storage -> Add, choose the correct storage type und point it to your existing disks.Thanks;

I dig dipper and I found that i have no space on boot drive .I decided for fresh installation ,but now my question is how to add to that new installation the drives where I have my backups ???.Attached lvs

How did you store your backups? If it was something like a ext4 partition you would for example need to mount it first (using fstab or systemd) and then adding a Directory storage pointing to that mountpoint.

Last edited:

Done and working;Go in the webUI to Datacenter -> Storage -> Add, choose the correct storage type und point it to your existing disks.

How did you store your backups? If it was something like a ext4 partition you would for example need to mount it first (using fstab or systemd) and then adding a Directory storage pointing to that mountpoint.

Thank you so much for your amazing support

Don't forget to runDone and working;

Thank you so much for your amazing support

pvesm set --is_mountpoint yes YourStorageName in case it is a directroy storage.Hi ThereDon't forget to runpvesm set --is_mountpoint yes YourStorageNamein case it is a directroy storage.

Sorry to disturb you again- I dis pvesm set --is_mountpoint yes YourStorageName and now the both backup devices appear with a question mark and nothing inside.

What am I missing ? Again your support will be welcome.

Rgds

Then it does what it is supposed to do. Block that Directroy storage when your filesystem isn't mounted. If it wouldn't do that then your backups would end up on your root filesystem (so your system disk) instead of your backup disk. Sooner or later these backups would fill up your root filesystem until it is 100% full and then your PVE server would stop working.Sorry to disturb you again- I dis pvesm set --is_mountpoint yes YourStorageName and now the both backup devices appear with a question mark and nothing inside.

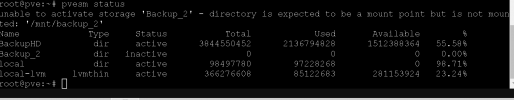

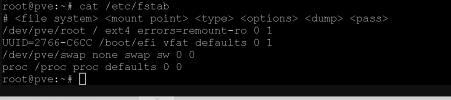

What are the outputs of...?:

lsblkcat /etc/pve/storage.confcat /etc/fstabpvesm statusBecause either your backup disk isn'T mounted properly or you used a subfolder on that backup disk as a directory storage, where you then might need to edit the "--is_mountpoint" from "yes" to "/mountpoint/used/in/fstab".

Hi Thanks for your quick replyThen it does what it is supposed to do. Block that Directroy storage when your filesystem isn't mounted. If it wouldn't do that then your backups would end up on your root filesystem (so your system disk) instead of your backup disk. Sooner or later these backups would fill up your root filesystem until it is 100% full and then your PVE server would stop working.

What are the outputs of...?:

lsblk

cat /etc/pve/storage.conf

cat /etc/fstab

pvesm status

Because either your backup disk isn'T mounted properly or you used a subfolder on that backup disk as a directory storage, where you then might need to edit the "--is_mountpoint" from "yes" to "/mountpoint/used/in/fstab".

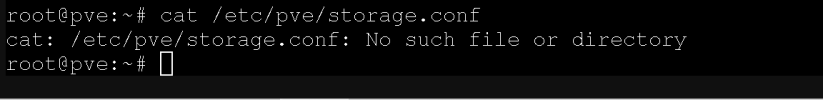

Please find in attached files info requested

Attachments

ssd is backup but not yet mountedHi There

Sorry to disturb you again- I dis pvesm set --is_mountpoint yes YourStorageName and now the both backup devices appear with a question mark and nothing inside.

What am I missing ? Again your support will be welcome.

Rgds

Code:

root@pve:~# cat /etc/pve/storage.conf

cat: /etc/pve/storage.conf: No such file or directory

root@pve:~# cat /etc/fstab

# <file system> <mount point> <type> <options> <dump> <pass>

/dev/pve/root / ext4 errors=remount-ro 0 1

UUID=2766-C6CC /boot/efi vfat defaults 0 1

/dev/pve/swap none swap sw 0 0

proc /proc proc defaults 0 0

root@pve:~# pvesm status

unable to activate storage 'Backup_2' - directory is expected to be a mount point but is not mounted: '/mnt/backup_2'

Name Type Status Total Used Available %

BackupHD dir active 3844550452 2136794828 1512388364 55.58%

Backup_2 dir inactive 0 0 0 0.00%

local dir active 98497780 97228268 0 98.71%

local-lvm lvmthin active 366276608 85122683 281153924 23.24%

root@pve:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 894.3G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 512M 0 part

└─sda3 8:3 0 893.8G 0 part

├─pve--OLD--0CAD09EA-swap 253:0 0 8G 0 lvm

├─pve--OLD--0CAD09EA-root 253:1 0 112G 0 lvm

├─pve--OLD--0CAD09EA-data_tmeta 253:5 0 7.7G 0 lvm

│ └─pve--OLD--0CAD09EA-data-tpool 253:8 0 758.3G 0 lvm

│ ├─pve--OLD--0CAD09EA-data 253:9 0 758.3G 1 lvm

│ ├─pve--OLD--0CAD09EA-vm--104--disk--0 253:10 0 8G 0 lvm

│ ├─pve--OLD--0CAD09EA-vm--202--disk--0 253:11 0 40G 0 lvm

│ └─pve--OLD--0CAD09EA-vm--103--disk--0 253:12 0 20G 0 lvm

└─pve--OLD--0CAD09EA-data_tdata 253:7 0 758.3G 0 lvm

└─pve--OLD--0CAD09EA-data-tpool 253:8 0 758.3G 0 lvm

├─pve--OLD--0CAD09EA-data 253:9 0 758.3G 1 lvm

├─pve--OLD--0CAD09EA-vm--104--disk--0 253:10 0 8G 0 lvm

├─pve--OLD--0CAD09EA-vm--202--disk--0 253:11 0 40G 0 lvm

└─pve--OLD--0CAD09EA-vm--103--disk--0 253:12 0 20G 0 lvm

sdb 8:16 0 3.6T 0 disk

└─sdb1 8:17 0 3.6T 0 part /mnt/Backup

sdc 8:32 0 476.9G 0 disk

├─sdc1 8:33 0 1007K 0 part

├─sdc2 8:34 0 512M 0 part /boot/efi

└─sdc3 8:35 0 476.4G 0 part

├─pve-swap 253:2 0 8G 0 lvm [SWAP]

├─pve-root 253:3 0 96G 0 lvm /

├─pve-data_tmeta 253:4 0 3.6G 0 lvm

│ └─pve-data-tpool 253:13 0 349.3G 0 lvm

│ ├─pve-data 253:14 0 349.3G 1 lvm

│ ├─pve-vm--101--disk--0 253:15 0 4M 0 lvm

│ ├─pve-vm--101--disk--1 253:16 0 60G 0 lvm

│ ├─pve-vm--100--disk--0 253:17 0 30G 0 lvm

│ ├─pve-vm--102--disk--0 253:18 0 8G 0 lvm

│ ├─pve-vm--103--disk--0 253:19 0 20G 0 lvm

│ ├─pve-vm--104--disk--0 253:20 0 30G 0 lvm

│ └─pve-vm--106--disk--0 253:21 0 10G 0 lvm

└─pve-data_tdata 253:6 0 349.3G 0 lvm

└─pve-data-tpool 253:13 0 349.3G 0 lvm

├─pve-data 253:14 0 349.3G 1 lvm

├─pve-vm--101--disk--0 253:15 0 4M 0 lvm

├─pve-vm--101--disk--1 253:16 0 60G 0 lvm

├─pve-vm--100--disk--0 253:17 0 30G 0 lvm

├─pve-vm--102--disk--0 253:18 0 8G 0 lvm

├─pve-vm--103--disk--0 253:19 0 20G 0 lvm

├─pve-vm--104--disk--0 253:20 0 30G 0 lvm

└─pve-vm--106--disk--0 253:21 0 10G 0 lvm

sdd 8:48 0 3.6T 0 disk

└─sdd1 8:49 0 3.6T 0 part

root@pve:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content backup,iso,vztmpl

shared 1

lvmthin: local-lvm

thinpool data

vgname pve

content rootdir,images

dir: Backup_2

path /mnt/backup_2

content backup,images,iso,rootdir,vztmpl,snippets

is_mountpoint yes

prune-backups keep-all=1

shared 1

dir: BackupHD

path /mnt/Backup

content backup,images,iso,rootdir,snippets,vztmpl

prune-backups keep-all=1

shared 1

root@pve:~#Sorry, should becat /etc/pve/storage.cfgnotcat /etc/pve/storage.conf.

And is sdd used for your "Backup_2" storage? that output is cut off.

How did you mount the HDD "Backup_2" is referring to?

Ok it's goodssd is backup but not yet mounted

Code:root@pve:~# cat /etc/pve/storage.conf cat: /etc/pve/storage.conf: No such file or directory root@pve:~# cat /etc/fstab # <file system> <mount point> <type> <options> <dump> <pass> /dev/pve/root / ext4 errors=remount-ro 0 1 UUID=2766-C6CC /boot/efi vfat defaults 0 1 /dev/pve/swap none swap sw 0 0 proc /proc proc defaults 0 0 root@pve:~# pvesm status unable to activate storage 'Backup_2' - directory is expected to be a mount point but is not mounted: '/mnt/backup_2' Name Type Status Total Used Available % BackupHD dir active 3844550452 2136794828 1512388364 55.58% Backup_2 dir inactive 0 0 0 0.00% local dir active 98497780 97228268 0 98.71% local-lvm lvmthin active 366276608 85122683 281153924 23.24% root@pve:~# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 894.3G 0 disk ├─sda1 8:1 0 1007K 0 part ├─sda2 8:2 0 512M 0 part └─sda3 8:3 0 893.8G 0 part ├─pve--OLD--0CAD09EA-swap 253:0 0 8G 0 lvm ├─pve--OLD--0CAD09EA-root 253:1 0 112G 0 lvm ├─pve--OLD--0CAD09EA-data_tmeta 253:5 0 7.7G 0 lvm │ └─pve--OLD--0CAD09EA-data-tpool 253:8 0 758.3G 0 lvm │ ├─pve--OLD--0CAD09EA-data 253:9 0 758.3G 1 lvm │ ├─pve--OLD--0CAD09EA-vm--104--disk--0 253:10 0 8G 0 lvm │ ├─pve--OLD--0CAD09EA-vm--202--disk--0 253:11 0 40G 0 lvm │ └─pve--OLD--0CAD09EA-vm--103--disk--0 253:12 0 20G 0 lvm └─pve--OLD--0CAD09EA-data_tdata 253:7 0 758.3G 0 lvm └─pve--OLD--0CAD09EA-data-tpool 253:8 0 758.3G 0 lvm ├─pve--OLD--0CAD09EA-data 253:9 0 758.3G 1 lvm ├─pve--OLD--0CAD09EA-vm--104--disk--0 253:10 0 8G 0 lvm ├─pve--OLD--0CAD09EA-vm--202--disk--0 253:11 0 40G 0 lvm └─pve--OLD--0CAD09EA-vm--103--disk--0 253:12 0 20G 0 lvm sdb 8:16 0 3.6T 0 disk └─sdb1 8:17 0 3.6T 0 part /mnt/Backup sdc 8:32 0 476.9G 0 disk ├─sdc1 8:33 0 1007K 0 part ├─sdc2 8:34 0 512M 0 part /boot/efi └─sdc3 8:35 0 476.4G 0 part ├─pve-swap 253:2 0 8G 0 lvm [SWAP] ├─pve-root 253:3 0 96G 0 lvm / ├─pve-data_tmeta 253:4 0 3.6G 0 lvm │ └─pve-data-tpool 253:13 0 349.3G 0 lvm │ ├─pve-data 253:14 0 349.3G 1 lvm │ ├─pve-vm--101--disk--0 253:15 0 4M 0 lvm │ ├─pve-vm--101--disk--1 253:16 0 60G 0 lvm │ ├─pve-vm--100--disk--0 253:17 0 30G 0 lvm │ ├─pve-vm--102--disk--0 253:18 0 8G 0 lvm │ ├─pve-vm--103--disk--0 253:19 0 20G 0 lvm │ ├─pve-vm--104--disk--0 253:20 0 30G 0 lvm │ └─pve-vm--106--disk--0 253:21 0 10G 0 lvm └─pve-data_tdata 253:6 0 349.3G 0 lvm └─pve-data-tpool 253:13 0 349.3G 0 lvm ├─pve-data 253:14 0 349.3G 1 lvm ├─pve-vm--101--disk--0 253:15 0 4M 0 lvm ├─pve-vm--101--disk--1 253:16 0 60G 0 lvm ├─pve-vm--100--disk--0 253:17 0 30G 0 lvm ├─pve-vm--102--disk--0 253:18 0 8G 0 lvm ├─pve-vm--103--disk--0 253:19 0 20G 0 lvm ├─pve-vm--104--disk--0 253:20 0 30G 0 lvm └─pve-vm--106--disk--0 253:21 0 10G 0 lvm sdd 8:48 0 3.6T 0 disk └─sdd1 8:49 0 3.6T 0 part root@pve:~# cat /etc/pve/storage.cfg dir: local path /var/lib/vz content backup,iso,vztmpl shared 1 lvmthin: local-lvm thinpool data vgname pve content rootdir,images dir: Backup_2 path /mnt/backup_2 content backup,images,iso,rootdir,vztmpl,snippets is_mountpoint yes prune-backups keep-all=1 shared 1 dir: BackupHD path /mnt/Backup content backup,images,iso,rootdir,snippets,vztmpl prune-backups keep-all=1 shared 1 root@pve:~#

OK it's done ; 2 T out of 4T -goodJup, so "Backup_2" isn't available because you didn't mount it yet. Once you mount it that it should be available.

But your "BackupHD" got no "is_mountpoint yes". You should run apvesm set --is_mountpoint yes BackupHDtoo.

Another question please.Local PVE is almost full even with the old instance .How to remove that garbish?

First you should find out what filled your root filesystem up. Good point to start would be running:Another question please.Local PVE is almost full even with the old instance .How to remove that garbish?

sudo du -a / 2>/dev/null | sort -n -r | head -n 30find / -type f -printf '%s %p\n' | sort -nr | head -30In case you already wrote your backups to the root filesystem instead of your backups disks (because "is_mountpoint yes" wasn't enabled back then to prevent that) you would first need to unmount your backup disks, to be able to see if there are some files existing in those two mountpoints (ten should be empty then).

Last edited:

Attached result of both commndsFirst you should find out what filled your root filesystem up. Good point to start would be running:

sudo du -a / 2>/dev/null | sort -n -r | head -n 30

find / -type f -printf '%s %p\n' | sort -nr | head -30

In case you already wrote your backups to the root filesystem instead of your backups disks (because "is_mountpoint yes" wasn't enabled back then to prevent that) you would first need to unmount your backup disks, to be able to see if there are some files existing in those two mountpoints (ten should be empty then).

Code:

root@pve:~# sudo du -a / 2>/dev/null | sort -n -r | head -n 30

3974349496 /

3970790976 /mnt

2136794820 /mnt/Backup

2098201208 /mnt/Backup/images

2098201204 /mnt/Backup/images/202

2098201200 /mnt/Backup/images/202/vm-202-disk-0.raw

1740251524 /mnt/Backup_2

1729793464 /mnt/Backup_2/dump

822763868 /mnt/Backup_2/dump/vzdump-qemu-202-2022_12_12-09_46_42.vma.zst

822256420 /mnt/Backup_2/dump/vzdump-qemu-202-2022_11_24-00_10_14.vma.zst

92237712 /mnt/backup

82067512 /mnt/backup/dump

38593572 /mnt/Backup/dump

36347564 /mnt/backup/dump/vzdump-qemu-101-2022_12_15-22_47_52.vma.zst

30670992 /mnt/Backup_2/dump/vzdump-qemu-101-2022_12_11-00_00_05.vma.zst

29860284 /mnt/Backup_2/dump/vzdump-qemu-101-2022_12_12-00_00_01.vma.zst

29007216 /mnt/Backup/dump/vzdump-qemu-101-2022_10_23-00_00_03.vma.zst

27295980 /mnt/backup/dump/vzdump-qemu-101-2022_12_14-17_14_07.vma.zst

10458020 /mnt/Backup_2/images

10458016 /mnt/Backup_2/images/103

10458012 /mnt/Backup_2/images/103/vm-103-disk-0.raw

10170176 /mnt/backup/images

8112136 /mnt/backup/dump/vzdump-lxc-103-2022_12_14-21_01_22.tar.zst

8080604 /mnt/Backup_2/dump/vzdump-lxc-103-2022_12_12-00_07_49.tar.zst

8080576 /mnt/Backup_2/dump/vzdump-lxc-103-2022_12_11-00_13_53.tar.zst

8080360 /mnt/Backup_2/dump/vzdump-lxc-103-2022_12_10-00_09_44.tar.zst

8080144 /mnt/Backup/dump/vzdump-lxc-103-2022_10_23-00_06_38.tar.zst

5958656 /mnt/backup/images/105

5958648 /mnt/backup/images/105/vm-105-disk-1.raw

5346336 /mnt/backup/dump/vzdump-qemu-106-2022_12_17-01_07_22.vma.zst

Code:

root@pve:~# find / -type f -printf '%s %p\n' | sort -nr | head -30

find: ‘/proc/89064/task/89064/fdinfo/5’: No such file or directory

find: ‘/proc/89064/fdinfo/6’: No such file or directory

140737471590400 /proc/kcore

2199359062016 /mnt/backup/images/106/vm-106-disk-0.qcow2

2148557389824 /mnt/Backup/images/202/vm-202-disk-0.raw

842510068491 /mnt/Backup_2/dump/vzdump-qemu-202-2022_12_12-09_46_42.vma.zst

841990460150 /mnt/Backup_2/dump/vzdump-qemu-202-2022_11_24-00_10_14.vma.zst

37219890260 /mnt/backup/dump/vzdump-qemu-101-2022_12_15-22_47_52.vma.zst

34359738368 /mnt/backup/images/105/vm-105-disk-1.raw

31407081740 /mnt/Backup_2/dump/vzdump-qemu-101-2022_12_11-00_00_05.vma.zst

30576918884 /mnt/Backup_2/dump/vzdump-qemu-101-2022_12_12-00_00_01.vma.zst

29703382363 /mnt/Backup/dump/vzdump-qemu-101-2022_10_23-00_00_03.vma.zst

27951072171 /mnt/backup/dump/vzdump-qemu-101-2022_12_14-17_14_07.vma.zst

21474836480 /mnt/Backup_2/images/103/vm-103-disk-0.raw

8306822843 /mnt/backup/dump/vzdump-lxc-103-2022_12_14-21_01_22.tar.zst

8274533038 /mnt/Backup_2/dump/vzdump-lxc-103-2022_12_12-00_07_49.tar.zst

8274505708 /mnt/Backup_2/dump/vzdump-lxc-103-2022_12_11-00_13_53.tar.zst

8274282085 /mnt/Backup_2/dump/vzdump-lxc-103-2022_12_10-00_09_44.tar.zst

8274062843 /mnt/Backup/dump/vzdump-lxc-103-2022_10_23-00_06_38.tar.zst

5474614574 /mnt/backup/dump/vzdump-qemu-106-2022_12_17-01_07_22.vma.zst

2120893478 /mnt/backup/dump/vzdump-qemu-105-2022_12_17-01_05_46.vma.zst

1514031971 /mnt/backup/dump/vzdump-qemu-106-2022_12_16-16_33_27.vma.zst

963513459 /mnt/Backup/dump/vzdump-lxc-100-2022_10_21-00_00_00.tar.zst

959602536 /mnt/backup_2/dump/vzdump-lxc-100-2022_12_17-03_00_01.tar.zst

867613258 /mnt/backup/dump/vzdump-lxc-104-2022_12_16-09_44_47.tar.zst

291667044 /mnt/backup_2/dump/vzdump-lxc-102-2022_12_17-09_17_18.tar.zst

291562431 /mnt/backup_2/dump/vzdump-lxc-102-2022_12_17-03_01_41.tar.zst

291531245 /mnt/backup/dump/vzdump-lxc-102-2022_12_17-01_05_22.tar.zst

290447472 /mnt/backup/dump/vzdump-lxc-102-2022_12_14-17_13_34.tar.zst

289448189 /mnt/Backup/dump/vzdump-lxc-104-2022_10_23-01_04_51.tar.zst

289264024 /mnt/Backup/dump/vzdump-lxc-104-2022_10_22-02_24_41.tar.zst

268435456 /sys/devices/pci0000:00/0000:00:02.0/resource2_wc

root@pve:~# find / -type f -printf '%s %p\n' | sort -nr | head -30

find: ‘/proc/89555/task/89555/fdinfo/5’: No such file or directory

find: ‘/proc/89555/fdinfo/6’: No such file or directory

140737471590400 /proc/kcore

2199359062016 /mnt/backup/images/106/vm-106-disk-0.qcow2

2148557389824 /mnt/Backup/images/202/vm-202-disk-0.raw

842510068491 /mnt/Backup_2/dump/vzdump-qemu-202-2022_12_12-09_46_42.vma.zst

841990460150 /mnt/Backup_2/dump/vzdump-qemu-202-2022_11_24-00_10_14.vma.zst

37219890260 /mnt/backup/dump/vzdump-qemu-101-2022_12_15-22_47_52.vma.zst

34359738368 /mnt/backup/images/105/vm-105-disk-1.raw

31407081740 /mnt/Backup_2/dump/vzdump-qemu-101-2022_12_11-00_00_05.vma.zst

30576918884 /mnt/Backup_2/dump/vzdump-qemu-101-2022_12_12-00_00_01.vma.zst

29703382363 /mnt/Backup/dump/vzdump-qemu-101-2022_10_23-00_00_03.vma.zst

27951072171 /mnt/backup/dump/vzdump-qemu-101-2022_12_14-17_14_07.vma.zst

21474836480 /mnt/Backup_2/images/103/vm-103-disk-0.raw

8306822843 /mnt/backup/dump/vzdump-lxc-103-2022_12_14-21_01_22.tar.zst

8274533038 /mnt/Backup_2/dump/vzdump-lxc-103-2022_12_12-00_07_49.tar.zst

8274505708 /mnt/Backup_2/dump/vzdump-lxc-103-2022_12_11-00_13_53.tar.zst

8274282085 /mnt/Backup_2/dump/vzdump-lxc-103-2022_12_10-00_09_44.tar.zst

8274062843 /mnt/Backup/dump/vzdump-lxc-103-2022_10_23-00_06_38.tar.zst

5474614574 /mnt/backup/dump/vzdump-qemu-106-2022_12_17-01_07_22.vma.zst

2120893478 /mnt/backup/dump/vzdump-qemu-105-2022_12_17-01_05_46.vma.zst

1514031971 /mnt/backup/dump/vzdump-qemu-106-2022_12_16-16_33_27.vma.zst

963513459 /mnt/Backup/dump/vzdump-lxc-100-2022_10_21-00_00_00.tar.zst

959602536 /mnt/backup_2/dump/vzdump-lxc-100-2022_12_17-03_00_01.tar.zst

867613258 /mnt/backup/dump/vzdump-lxc-104-2022_12_16-09_44_47.tar.zst

291667044 /mnt/backup_2/dump/vzdump-lxc-102-2022_12_17-09_17_18.tar.zst

291562431 /mnt/backup_2/dump/vzdump-lxc-102-2022_12_17-03_01_41.tar.zst

291531245 /mnt/backup/dump/vzdump-lxc-102-2022_12_17-01_05_22.tar.zst

290447472 /mnt/backup/dump/vzdump-lxc-102-2022_12_14-17_13_34.tar.zst

289448189 /mnt/Backup/dump/vzdump-lxc-104-2022_10_23-01_04_51.tar.zst

289264024 /mnt/Backup/dump/vzdump-lxc-104-2022_10_22-02_24_41.tar.zst

268435456 /sys/devices/pci0000:00/0000:00:02.0/resource2_wc

root@pve:~#