Hello,

So we are currently facing one issue with one nfs mount which was mounted on our cluster. We only used it for VM backups.

We started facing this issue after our NFS server(debian 11) was rebooted.

pvestatd is flooding the log with:

Sep 14 23:06:00 node01 pvestatd[3331]: storage 'nfsbackup' is not online

Sep 14 23:06:00 node01 pvestatd[3331]: status update time (10.175 seconds)

Sep 14 23:06:10 node01 pvestatd[3331]: storage 'nfsbackup' is not online

Sep 14 23:06:10 node01 pvestatd[3331]: status update time (10.176 seconds)

Sep 14 23:06:21 node01 pvestatd[3331]: storage 'nfsbackup' is not online

Sep 14 23:06:21 node01 pvestatd[3331]: status update time (10.177 seconds)

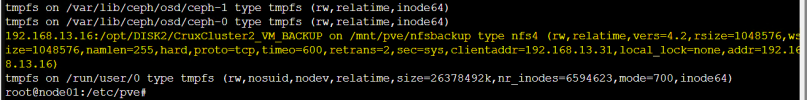

1. STORAGE.CFG file

root@node01:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content vztmpl,iso,backup

zfspool: local-zfs

pool rpool/data

content rootdir,images

sparse 1

rbd: ceph-NVMe

content rootdir,images

krbd 0

pool ceph-NVMe

nfs: nfsbackup

export /opt/DISK2/CruxCluster2_VM_BACKUP

path /mnt/pve/nfsbackup

server 192.168.13.16

content iso,backup

prune-backups keep-last=3

2. PVE VERSION

#pveversion

pve-manager/7.3-3/c3928077 (running kernel: 5.15.74-1-pve

Kindly help in how to de resolve this with the minimum downtime as this is a production cluster.

So we are currently facing one issue with one nfs mount which was mounted on our cluster. We only used it for VM backups.

We started facing this issue after our NFS server(debian 11) was rebooted.

pvestatd is flooding the log with:

Sep 14 23:06:00 node01 pvestatd[3331]: storage 'nfsbackup' is not online

Sep 14 23:06:00 node01 pvestatd[3331]: status update time (10.175 seconds)

Sep 14 23:06:10 node01 pvestatd[3331]: storage 'nfsbackup' is not online

Sep 14 23:06:10 node01 pvestatd[3331]: status update time (10.176 seconds)

Sep 14 23:06:21 node01 pvestatd[3331]: storage 'nfsbackup' is not online

Sep 14 23:06:21 node01 pvestatd[3331]: status update time (10.177 seconds)

1. STORAGE.CFG file

root@node01:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content vztmpl,iso,backup

zfspool: local-zfs

pool rpool/data

content rootdir,images

sparse 1

rbd: ceph-NVMe

content rootdir,images

krbd 0

pool ceph-NVMe

nfs: nfsbackup

export /opt/DISK2/CruxCluster2_VM_BACKUP

path /mnt/pve/nfsbackup

server 192.168.13.16

content iso,backup

prune-backups keep-last=3

2. PVE VERSION

#pveversion

pve-manager/7.3-3/c3928077 (running kernel: 5.15.74-1-pve

Kindly help in how to de resolve this with the minimum downtime as this is a production cluster.