we simply check if it's mounted and if yes parse the output of /proc/mounts

can you post the output of

?

Hello Dominik,

Here is the requested info

root@node16-84:~# cat /proc/mounts

sysfs /sys sysfs rw,nosuid,nodev,noexec,relatime 0 0

proc /proc proc rw,relatime 0 0

udev /dev devtmpfs rw,nosuid,relatime,size=264130948k,nr_inodes=66032737,mode=755,inode64 0 0

devpts /dev/pts devpts rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000 0 0

tmpfs /run tmpfs rw,nosuid,nodev,noexec,relatime,size=52832916k,mode=755,inode64 0 0

rpool/ROOT/pve-1 / zfs rw,relatime,xattr,posixacl,casesensitive 0 0

securityfs /sys/kernel/security securityfs rw,nosuid,nodev,noexec,relatime 0 0

tmpfs /dev/shm tmpfs rw,nosuid,nodev,inode64 0 0

tmpfs /run/lock tmpfs rw,nosuid,nodev,noexec,relatime,size=5120k,inode64 0 0

cgroup2 /sys/fs/cgroup cgroup2 rw,nosuid,nodev,noexec,relatime 0 0

pstore /sys/fs/pstore pstore rw,nosuid,nodev,noexec,relatime 0 0

bpf /sys/fs/bpf bpf rw,nosuid,nodev,noexec,relatime,mode=700 0 0

systemd-1 /proc/sys/fs/binfmt_misc autofs rw,relatime,fd=30,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=28326 0 0

mqueue /dev/mqueue mqueue rw,nosuid,nodev,noexec,relatime 0 0

debugfs /sys/kernel/debug debugfs rw,nosuid,nodev,noexec,relatime 0 0

tracefs /sys/kernel/tracing tracefs rw,nosuid,nodev,noexec,relatime 0 0

hugetlbfs /dev/hugepages hugetlbfs rw,relatime,pagesize=2M 0 0

fusectl /sys/fs/fuse/connections fusectl rw,nosuid,nodev,noexec,relatime 0 0

configfs /sys/kernel/config configfs rw,nosuid,nodev,noexec,relatime 0 0

ramfs /run/credentials/systemd-sysusers.service ramfs ro,nosuid,nodev,noexec,relatime,mode=700 0 0

ramfs /run/credentials/systemd-sysctl.service ramfs ro,nosuid,nodev,noexec,relatime,mode=700 0 0

ramfs /run/credentials/systemd-tmpfiles-setup-dev.service ramfs ro,nosuid,nodev,noexec,relatime,mode=700 0 0

rpool/var-lib-vz /var/lib/vz zfs rw,relatime,xattr,noacl,casesensitive 0 0

rpool /rpool zfs rw,relatime,xattr,noacl,casesensitive 0 0

rpool/ROOT /rpool/ROOT zfs rw,relatime,xattr,noacl,casesensitive 0 0

rpool/data /rpool/data zfs rw,relatime,xattr,noacl,casesensitive 0 0

ramfs /run/credentials/systemd-tmpfiles-setup.service ramfs ro,nosuid,nodev,noexec,relatime,mode=700 0 0

binfmt_misc /proc/sys/fs/binfmt_misc binfmt_misc rw,nosuid,nodev,noexec,relatime 0 0

sunrpc /run/rpc_pipefs rpc_pipefs rw,relatime 0 0

lxcfs /var/lib/lxcfs fuse.lxcfs rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other 0 0

/dev/fuse /etc/pve fuse rw,nosuid,nodev,relatime,user_id=0,group_id=0,default_permissions,allow_other 0 0

172.16.16.195:/volume1/pvedatastore /mnt/pve/pvedatastore-16195 nfs4 rw,relatime,vers=4.0,rsize=131072,wsize=131072,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=172.16.16.84,local_lock=none,addr=172.16.16.195 0 0

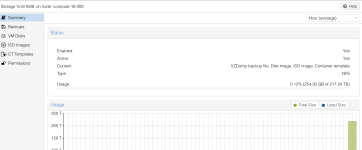

10.88.90.38:/n04 /mnt/pve/tintri1638 nfs4 rw,relatime,vers=4.1,rsize=262264,wsize=262144,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=null,clientaddr=10.88.90.84,local_lock=none,addr=10.88.90.38 0 0

tmpfs /run/user/0 tmpfs rw,nosuid,nodev,relatime,size=52832912k,nr_inodes=13208228,mode=700,inode64 0 0

Thank you for looking into this