Hello,

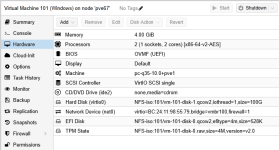

I have a new Proxmox environment with only a single VM. When I try to create a new Windows VM, and select Windows as the guest OS, the machine boots into startup repair even if I have not attached any media. If I create a brand-new VM and do attach installation media, it still starts up into the startup repair and not into the installation ISO without going to the boot menu.

Is this intended? Shouldn't a new VM with formatted disks have absolutely no data on them?

Thank you,

I have a new Proxmox environment with only a single VM. When I try to create a new Windows VM, and select Windows as the guest OS, the machine boots into startup repair even if I have not attached any media. If I create a brand-new VM and do attach installation media, it still starts up into the startup repair and not into the installation ISO without going to the boot menu.

Is this intended? Shouldn't a new VM with formatted disks have absolutely no data on them?

Thank you,