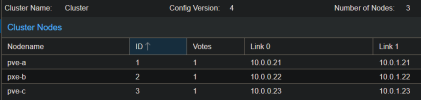

I purchased a 3rd server (pve-c) and setup all three with proxmox a couple weeks ago as a cluster with ceph as the underlying storage (using hci).

Since then, twice I've received an email saying the new server (pve-c) was being fenced.

When I log in everything seems normal, but I can see a vm was moved to another server and another was shutdown.

This is my first time using proxmox, so my first issue to investigate, how do I look into this fencing? The server seems ok so I'm not sure what could be failing. When I look at the 'System Log' via the gui at the time of the email I don't see anything unusual.

Additional detail: I looked at ceph and it showed the 'manager' was down, which was on a different server pve-a. Not sure why that was stopped so I clicked start and I created a second manager on pve-b. The manager on pve-b went into standby, and the manager on pve-a started, after a few seconds ceph showed HEALTH_OK.

Since then, twice I've received an email saying the new server (pve-c) was being fenced.

Code:

The node 'pve-c' failed and needs manual intervention.

The PVE HA manager tries to fence it and recover the configured HA resources to a healthy node if possible.When I log in everything seems normal, but I can see a vm was moved to another server and another was shutdown.

This is my first time using proxmox, so my first issue to investigate, how do I look into this fencing? The server seems ok so I'm not sure what could be failing. When I look at the 'System Log' via the gui at the time of the email I don't see anything unusual.

Additional detail: I looked at ceph and it showed the 'manager' was down, which was on a different server pve-a. Not sure why that was stopped so I clicked start and I created a second manager on pve-b. The manager on pve-b went into standby, and the manager on pve-a started, after a few seconds ceph showed HEALTH_OK.

Last edited: