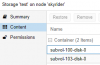

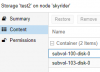

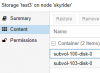

I currently have 4 storages. Local, local-zfs, nginx-storage and test2. Apparently all new containers I've created, is added to all storages content (with the exception of local-zfs):

Is there any reason why all containers that I create are added to all custom storages under content that I've created? While I specifically tagged the storage the container should use when I created it.

All these storages are ZFS, including its main partition. I've been trying to solve this for hours, and I just can't seem to get the solution. I'm still new to proxmox, but I'm learning

Thanks in advance!

Regards,

Skyrider

Is there any reason why all containers that I create are added to all custom storages under content that I've created? While I specifically tagged the storage the container should use when I created it.

All these storages are ZFS, including its main partition. I've been trying to solve this for hours, and I just can't seem to get the solution. I'm still new to proxmox, but I'm learning

Thanks in advance!

Regards,

Skyrider