There should be more messages to the health warning. How does your "ceph osd tree", "ceph osd dump" and complete ceph status look like?

ceph osd tree

ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY

-1 4.74995 root default

-3 2.69997 host bambino

1 0.90997 osd.1 up 1.00000 1.00000

2 0.89000 osd.2 up 1.00000 1.00000

5 0.89999 osd.5 up 1.00000 1.00000

-5 0 host fagiolo

-6 2.04999 host bomber

6 0.89999 osd.6 up 1.00000 1.00000

7 0.25000 osd.7 up 1.00000 1.00000

0 0.89999 osd.0 up 1.00000 1.00000

Note: I have 2 OSD nodes, the third (fagiolo) is only monitor

ceph osd dump

epoch 3763

fsid e3b8320a-5149-4269-93f4-eeddef3597b2

created 2016-11-12 18:26:17.031319

modified 2017-09-18 12:54:22.050054

flags

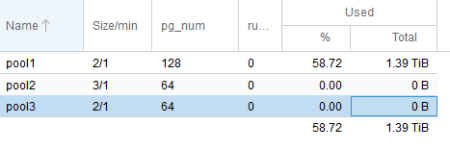

pool 2 'pool1' replicated size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 128 pgp_num 128 last_change 3665 flags hashpspool stripe_width 0

removed_snaps [1~d,14~c,23~2c]

pool 8 'pool2' replicated size 3 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 64 pgp_num 64 last_change 3763 flags hashpspool stripe_width 0

max_osd 8

osd.0 up in weight 1 up_from 3621 up_thru 3758 down_at 3620 last_clean_interv al [3606,3612) 10.10.10.13:6804/3158 10.10.10.13:6805/3158 10.10.10.13:6806/3158 10.10.10.13:6807/3158 exists,up da845d9b-c1b8-4982-80bf-a3bbde7cfa9a

osd.1 up in weight 1 up_from 3619 up_thru 3758 down_at 3618 last_clean_interv al [2773,3612) 10.10.10.10:6804/3801 10.10.10.10:6805/3801 10.10.10.10:6806/3801 10.10.10.10:6807/3801 exists,up 4c114795-8e8b-4009-bb7c-194ff1cf81d2

osd.2 up in weight 1 up_from 3617 up_thru 3758 down_at 3616 last_clean_interv al [2770,3612) 10.10.10.10:6800/3543 10.10.10.10:6801/3543 10.10.10.10:6802/3543 10.10.10.10:6803/3543 exists,up 4173da76-ee9f-4d0a-b7b2-a4c73e827409

osd.5 up in weight 1 up_from 3618 up_thru 3758 down_at 3617 last_clean_interv al [2772,3612) 10.10.10.10:6808/4021 10.10.10.10:6809/4021 10.10.10.10:6810/4021 10.10.10.10:6811/4021 exists,up d922a134-13a0-4bb5-b4f2-cd605f31a84f

osd.6 up in weight 1 up_from 3619 up_thru 3758 down_at 3618 last_clean_interv al [3607,3612) 10.10.10.13:6800/2920 10.10.10.13:6801/2920 10.10.10.13:6802/2920 10.10.10.13:6803/2920 exists,up 087e17a3-5109-4c92-be6a-59222a85af90

osd.7 up in weight 1 up_from 3614 up_thru 3758 down_at 3613 last_clean_interv al [3603,3612) 10.10.10.13:6808/3376 10.10.10.13:6809/3376 10.10.10.13:6810/3376 10.10.10.13:6811/3376 exists,up d59b4a73-a552-4365-b9a3-04c5f6898695

This is the current log:

2017-09-19 09:32:07.177900 mon.0 10.10.10.4:6789/0 185338 : cluster [INF] pgmap v16033508: 192 pgs: 64 active+undersized+degraded, 128 active+clean; 1423 GB data, 3069 GB used, 2424 GB / 5541 GB avail; 112 kB/s rd, 1469 kB/s wr, 87 op/s