I'm having an issue with the VMs on my PVE server, that may well be two issues, or could be just one. I'm spinning up a VM to run more tests as I type this, but I wanted to try and get the ball rolling looking for a fix here while I wait.

I first noticed the issue when working with some Ubuntu VMs, and it presented as the network being slow to come up. In some cases, if I opened the console to the VM and ran a ping to anywhere, after a few request timeouts, everything would wake up and behave nicely. In others, it would be stubborn, and not come online for 15-30 minutes, even with a ping running. On the Linux VMs, it doesn't seem to become unstable after coming online, but those systems don't see a ton of traffic, and there don't seem to be any error messages in any logs either on the PVE host or the VMs to be able to determine if that's the case.

This week, I've been trying to spin up a Windows 11 desktop for a contractor to remote into, and it's been having a severe enough variant of that issue that I ended up digging into it further. On the Windows VM (using the virtio network driver, though the same issue was present when I tested with both E1000 and RTL8139), the issue will arise after using the network - it will see the internet, and allow me to access a website or two, but won't stay connected long enough to activate the copy of Windows.

In troubleshooting I noticed that the Windows VM can ping the PVE host by IP just fine, but it can't ping any of the other VMs running on PVE, anything else on the local network, or anything beyond the gateway. The odd part is, it still gets DHCP from the network, which I can observe through changing the IP of its static lease at the DHCP server, and refreshing it on the VM. It will obtain the new IP even when Windows is unable to route beyond the PVE host.

This seems to be a routing issue, but as I'm kind of new to Proxmox, the inconsistent nature of it, as well as it not throwing any errors on host or VM, is throwing me for a loop. Any help someone could provide would be greatly appreciated.

Info on my environment, from requested fields in someone else's post for a similar issue:

- PVE version: 8.4.0

- Cluster configuration: Single PVE server

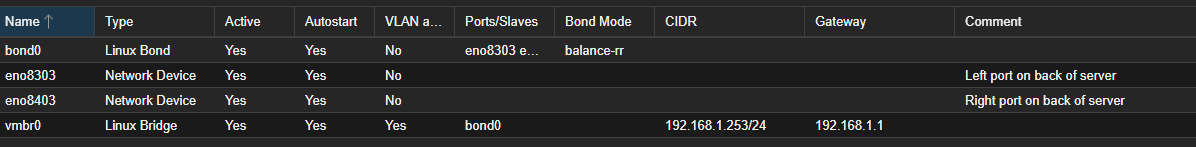

- Network configuration: Everything is the defaults at install, aside from bridging the two physical ports on the server

- VM OS: Ubuntu 24.04, and Windows 11 Pro

- VM network configuration: Defaults, other than manual IP configuration on Ubuntu. DHCP on Windows, with static lease configured on DHCP server

- VM logs during the issue: No errors present

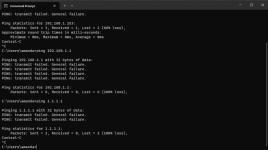

- Network traces during the issue: Traceroute doesn't get past the PVE server, PVE server pings, nothing beyond the PVE server itself pings or gets traffic, even the other VMs

- What is the state of the VM during the issue? Can you access it via a console? Can you run troubleshooting commands?: I can access the VMs via console just fine, but network connectivity beyond that is nil

I first noticed the issue when working with some Ubuntu VMs, and it presented as the network being slow to come up. In some cases, if I opened the console to the VM and ran a ping to anywhere, after a few request timeouts, everything would wake up and behave nicely. In others, it would be stubborn, and not come online for 15-30 minutes, even with a ping running. On the Linux VMs, it doesn't seem to become unstable after coming online, but those systems don't see a ton of traffic, and there don't seem to be any error messages in any logs either on the PVE host or the VMs to be able to determine if that's the case.

This week, I've been trying to spin up a Windows 11 desktop for a contractor to remote into, and it's been having a severe enough variant of that issue that I ended up digging into it further. On the Windows VM (using the virtio network driver, though the same issue was present when I tested with both E1000 and RTL8139), the issue will arise after using the network - it will see the internet, and allow me to access a website or two, but won't stay connected long enough to activate the copy of Windows.

In troubleshooting I noticed that the Windows VM can ping the PVE host by IP just fine, but it can't ping any of the other VMs running on PVE, anything else on the local network, or anything beyond the gateway. The odd part is, it still gets DHCP from the network, which I can observe through changing the IP of its static lease at the DHCP server, and refreshing it on the VM. It will obtain the new IP even when Windows is unable to route beyond the PVE host.

This seems to be a routing issue, but as I'm kind of new to Proxmox, the inconsistent nature of it, as well as it not throwing any errors on host or VM, is throwing me for a loop. Any help someone could provide would be greatly appreciated.

Info on my environment, from requested fields in someone else's post for a similar issue:

- PVE version: 8.4.0

- Cluster configuration: Single PVE server

- Network configuration: Everything is the defaults at install, aside from bridging the two physical ports on the server

- VM OS: Ubuntu 24.04, and Windows 11 Pro

- VM network configuration: Defaults, other than manual IP configuration on Ubuntu. DHCP on Windows, with static lease configured on DHCP server

- VM logs during the issue: No errors present

- Network traces during the issue: Traceroute doesn't get past the PVE server, PVE server pings, nothing beyond the PVE server itself pings or gets traffic, even the other VMs

- What is the state of the VM during the issue? Can you access it via a console? Can you run troubleshooting commands?: I can access the VMs via console just fine, but network connectivity beyond that is nil