I'm about to upgrade my homelab with a proper router and switch. I feel like it will be a good time to make changes to my proxmox config during that time. Currently I have proxmox installed on an r720 that has a quad nic, which I will be upgrading as well. I will go from the quad 1gb, to either a quad sfp+ or dual sfp+ dual 1gb. I am really only running two vms on it right now. 1 is a truenas vm and the other an ubuntu server running docker and lots of random services.

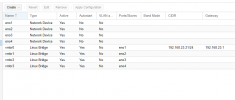

Currently I've just linked each vm to a physical nic. I think it would be better to create a bond of the quad nic and then I will share that nic with all the vm's on proxmox? Is that accurate? Will that not work with either quad nic scenario, or only if I use the quad SFP+? or am I thinking about this completely wrong and need to give each vm it's own nic like i've been doing?

Currently I've just linked each vm to a physical nic. I think it would be better to create a bond of the quad nic and then I will share that nic with all the vm's on proxmox? Is that accurate? Will that not work with either quad nic scenario, or only if I use the quad SFP+? or am I thinking about this completely wrong and need to give each vm it's own nic like i've been doing?

Last edited: