For several weeks now, I've been struggling to improve the performance of ceph on 3 nodes. Each node has 4 disks of 6 TB. + one NVME 1 TB where Rocksdb / Wal are taken out. I can't seem to get ceph to run fast enough.

Below are my config files and test results:

pveversion -v

ceph.conf

crushmap

interfaces

hdparm -tT --direct /dev/sdX

lspci

When testing, the exchange rate between nodes does not rise above 1-1.5 Gbps

ethtool -g -k -a

What is the optimal testing mechanism? I am using the following commands:

Maybe I'm testing wrong? Or does it still need to be optimized somehow?

Below are my config files and test results:

pveversion -v

Code:

proxmox-ve: 7.4-1 (running kernel: 5.15.107-2-pve)

pve-manager: 7.4-3 (running version: 7.4-3/9002ab8a)

pve-kernel-5.15: 7.4-3

pve-kernel-5.15.107-2-pve: 5.15.107-2

ceph: 17.2.6-pve1

ceph-fuse: 17.2.6-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4-3

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-1

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.6

libpve-storage-perl: 7.4-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

openvswitch-switch: 2.15.0+ds1-2+deb11u4

proxmox-backup-client: 2.4.2-1

proxmox-backup-file-restore: 2.4.2-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.7.0

pve-cluster: 7.3-3

pve-container: 4.4-3

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-2

pve-firewall: 4.3-2

pve-firmware: 3.6-5

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-1

qemu-server: 7.4-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.11-pve1ceph.conf

Code:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.50.251.0/24

fsid = 7d00b675-2f1e-47ff-a71c-b95d1745bc39

mon_allow_pool_delete = true

mon_host = 10.50.250.1 10.50.250.2 10.50.250.3

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.50.250.0/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mon.nd01]

public_addr = 10.50.250.1

[mon.nd02]

public_addr = 10.50.250.2

[mon.nd03]

public_addr = 10.50.250.3crushmap

Code:

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable chooseleaf_stable 1

tunable straw_calc_version 1

tunable allowed_bucket_algs 54

# devices

device 0 osd.0 class hdd

device 1 osd.1 class hdd

device 2 osd.2 class hdd

device 3 osd.3 class hdd

device 4 osd.4 class hdd

device 5 osd.5 class hdd

device 6 osd.6 class hdd

device 7 osd.7 class hdd

device 8 osd.8 class hdd

device 9 osd.9 class hdd

device 10 osd.10 class hdd

device 11 osd.11 class hdd

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 zone

type 10 region

type 11 root

# buckets

host nd01 {

id -3 # do not change unnecessarily

id -2 class hdd # do not change unnecessarily

id -9 class nvme # do not change unnecessarily

# weight 22.70319

alg straw2

hash 0 # rjenkins1

item osd.0 weight 5.67580

item osd.1 weight 5.67580

item osd.2 weight 5.67580

item osd.3 weight 5.67580

}

host nd02 {

id -5 # do not change unnecessarily

id -4 class hdd # do not change unnecessarily

id -10 class nvme # do not change unnecessarily

# weight 22.70319

alg straw2

hash 0 # rjenkins1

item osd.4 weight 5.67580

item osd.5 weight 5.67580

item osd.6 weight 5.67580

item osd.7 weight 5.67580

}

host nd03 {

id -7 # do not change unnecessarily

id -6 class hdd # do not change unnecessarily

id -11 class nvme # do not change unnecessarily

# weight 22.70319

alg straw2

hash 0 # rjenkins1

item osd.8 weight 5.67580

item osd.9 weight 5.67580

item osd.10 weight 5.67580

item osd.11 weight 5.67580

}

root default {

id -1 # do not change unnecessarily

id -8 class hdd # do not change unnecessarily

id -12 class nvme # do not change unnecessarily

# weight 68.10956

alg straw2

hash 0 # rjenkins1

item nd01 weight 22.70319

item nd02 weight 22.70319

item nd03 weight 22.70319

}

# rules

rule replicated_rule {

id 0

type replicated

step take default

step chooseleaf firstn 0 type host

step emit

}

# end crush mapinterfaces

Code:

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet manual

mtu 9216

auto eno2

iface eno2 inet manual

mtu 9216

auto mgmt

iface mgmt inet static

address 10.50.253.1/24

gateway 10.50.253.254

ovs_type OVSIntPort

ovs_bridge vmbr0

auto cluster

iface cluster inet static

address 10.50.252.1/24

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options tag=4043

auto cephcluster

iface cephcluster inet static

address 10.50.251.1/24

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options tag=4045

auto cephpublic

iface cephpublic inet static

address 10.50.250.1/24

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options tag=4053

auto bond0

iface bond0 inet manual

ovs_bonds eno1 eno2

ovs_type OVSBond

ovs_bridge vmbr0

ovs_mtu 9216

ovs_options lacp=active bond_mode=balance-tcp

auto vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports bond0 mgmt cluster cephcluster cephpublic

ovs_mtu 9216hdparm -tT --direct /dev/sdX

Code:

/dev/sda:

Timing O_DIRECT cached reads: 864 MB in 2.00 seconds = 431.80 MB/sec

SG_IO: bad/missing sense data, sb[]: 72 05 20 00 00 00 00 1c 02 06 00 00 cf 00 00 00 03 02 00 01 80 0e 00 00 00 00 00 00 00 00 00 00

Timing O_DIRECT disk reads: 738 MB in 3.00 seconds = 245.60 MB/sec

/dev/sdb:

Timing O_DIRECT cached reads: 856 MB in 2.00 seconds = 427.35 MB/sec

SG_IO: bad/missing sense data, sb[]: 72 05 20 00 00 00 00 1c 02 06 00 00 cf 00 00 00 03 02 00 01 80 0e 00 00 00 00 00 00 00 00 00 00

Timing O_DIRECT disk reads: 770 MB in 3.01 seconds = 256.21 MB/sec

/dev/sdc:

Timing O_DIRECT cached reads: 868 MB in 2.00 seconds = 434.24 MB/sec

SG_IO: bad/missing sense data, sb[]: 72 05 20 00 00 00 00 1c 02 06 00 00 cf 00 00 00 03 02 00 01 80 0e 00 00 00 00 00 00 00 00 00 00

Timing O_DIRECT disk reads: 752 MB in 3.00 seconds = 250.44 MB/sec

/dev/sdd:

Timing O_DIRECT cached reads: 860 MB in 2.00 seconds = 429.65 MB/sec

SG_IO: bad/missing sense data, sb[]: 72 05 20 00 00 00 00 1c 02 06 00 00 cf 00 00 00 03 02 00 01 80 0e 00 00 00 00 00 00 00 00 00 00

Timing O_DIRECT disk reads: 762 MB in 3.00 seconds = 253.97 MB/sec

/dev/nvme0n1:

Timing O_DIRECT cached reads: 4970 MB in 1.99 seconds = 2492.82 MB/sec

Timing O_DIRECT disk reads: 6850 MB in 3.00 seconds = 2283.01 MB/seclspci

Code:

03:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

03:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)When testing, the exchange rate between nodes does not rise above 1-1.5 Gbps

ethtool -g -k -a

Code:

Ring parameters for eno1:

Pre-set maximums:

RX: 512

RX Mini: n/a

RX Jumbo: n/a

TX: 512

Current hardware settings:

RX: 512

RX Mini: n/a

RX Jumbo: n/a

TX: 512

Pause parameters for eno1:

Autonegotiate: off

RX: on

TX: on

Features for eno1:

rx-checksumming: on

tx-checksumming: on

tx-checksum-ipv4: off [fixed]

tx-checksum-ip-generic: on

tx-checksum-ipv6: off [fixed]

tx-checksum-fcoe-crc: on [fixed]

tx-checksum-sctp: on

scatter-gather: on

tx-scatter-gather: on

tx-scatter-gather-fraglist: off [fixed]

tcp-segmentation-offload: on

tx-tcp-segmentation: on

tx-tcp-ecn-segmentation: off [fixed]

tx-tcp-mangleid-segmentation: off

tx-tcp6-segmentation: on

generic-segmentation-offload: on

generic-receive-offload: on

large-receive-offload: off

rx-vlan-offload: on

tx-vlan-offload: on

ntuple-filters: off

receive-hashing: on

highdma: on [fixed]

rx-vlan-filter: on

vlan-challenged: off [fixed]

tx-lockless: off [fixed]

netns-local: off [fixed]

tx-gso-robust: off [fixed]

tx-fcoe-segmentation: on [fixed]

tx-gre-segmentation: on

tx-gre-csum-segmentation: on

tx-ipxip4-segmentation: on

tx-ipxip6-segmentation: on

tx-udp_tnl-segmentation: on

tx-udp_tnl-csum-segmentation: on

tx-gso-partial: on

tx-tunnel-remcsum-segmentation: off [fixed]

tx-sctp-segmentation: off [fixed]

tx-esp-segmentation: on

tx-udp-segmentation: on

tx-gso-list: off [fixed]

fcoe-mtu: off [fixed]

tx-nocache-copy: off

loopback: off [fixed]

rx-fcs: off [fixed]

rx-all: off

tx-vlan-stag-hw-insert: off [fixed]

rx-vlan-stag-hw-parse: off [fixed]

rx-vlan-stag-filter: off [fixed]

l2-fwd-offload: off

hw-tc-offload: off

esp-hw-offload: on

esp-tx-csum-hw-offload: on

rx-udp_tunnel-port-offload: off [fixed]

tls-hw-tx-offload: off [fixed]

tls-hw-rx-offload: off [fixed]

rx-gro-hw: off [fixed]

tls-hw-record: off [fixed]

rx-gro-list: off

macsec-hw-offload: off [fixed]

rx-udp-gro-forwarding: off

hsr-tag-ins-offload: off [fixed]

hsr-tag-rm-offload: off [fixed]

hsr-fwd-offload: off [fixed]

hsr-dup-offload: off [fixed]What is the optimal testing mechanism? I am using the following commands:

Code:

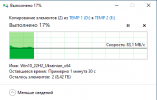

rbd bench --io-type write --io-size 4096 --io-threads 16 --io-total 1G --io-pattern seq ceph/test_image

bench type write io_size 4096 io_threads 16 bytes 1073741824 pattern sequential

elapsed: 5 ops: 262144 ops/sec: 46812 bytes/sec: 183 MiB/s

rbd bench --io-type write --io-size 4096 --io-threads 16 --io-total 1G --io-pattern rand ceph/test_image

bench type write io_size 4096 io_threads 16 bytes 1073741824 pattern random

elapsed: 58 ops: 262144 ops/sec: 4487.9 bytes/sec: 18 MiB/s

rbd bench --io-type read --io-size 4096 --io-threads 16 --io-total 1G --io-pattern seq ceph/test_image

bench type read io_size 4096 io_threads 16 bytes 1073741824 pattern sequential

elapsed: 36 ops: 262144 ops/sec: 7156.24 bytes/sec: 28 MiB/s

rbd bench --io-type read --io-size 4096 --io-threads 16 --io-total 1G --io-pattern rand ceph/test_image

bench type read io_size 4096 io_threads 16 bytes 1073741824 pattern random

elapsed: 133 ops: 262144 ops/sec: 1970.44 bytes/sec: 7.7 MiB/sMaybe I'm testing wrong? Or does it still need to be optimized somehow?

Last edited: