Hi All,

I am looking for the "best practice" for upgrading a server in a cluster (22 systems) that is sharing it's iscsi mounts to the rest of the cluster.

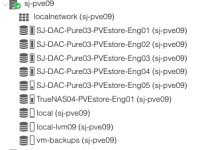

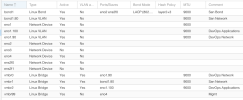

I am about to take on the task of upgrading the memory on one of my main servers that is the focal point for the connection to the iscsi storage. I am really concerned that it would take down all of the VMs in the cluster as the storage is being shared from that server.

Give me some ideas.

Thanks

--paul

I am looking for the "best practice" for upgrading a server in a cluster (22 systems) that is sharing it's iscsi mounts to the rest of the cluster.

I am about to take on the task of upgrading the memory on one of my main servers that is the focal point for the connection to the iscsi storage. I am really concerned that it would take down all of the VMs in the cluster as the storage is being shared from that server.

Give me some ideas.

Thanks

--paul