So I installed a New VM for Home Assistant but Accidently put a VM Number 101 That Already Existed on The Machine. It overwrote it, there was Issues with the Script install so I deleted the VM and recreated.

What I didn't notice was VM101 was already Taken as it was a VM not An LXC so was not at the top. This particular VM was a Synology VM with 2 6TB Drives in a RAID1 configuration, When I noticed it was gone I looked down at the Storage Pools for NAS1 & NAS2 (Respectively) they are no longer full but rather showing .57% Used on Both Drives. I restored a Backup to the VM with the Synology, Added the Detached Device (From the Hardware Tab) and booted it up, but it does not appear to have site of the drives?

Did the 2 Drives get wiped out? Is it possible that Data is still on those Drives? Any way to get the Restored VM to recognize and reattach?

So A Bit More Info

Listed in the 101.conf File:

sata2: nasDrive1:vm-101-disk-0,size=32G

sata3: nasDrive2:vm-101-disk-0,size=32G

That's what is throwing me is these Disks are 6 (5.5T) Each

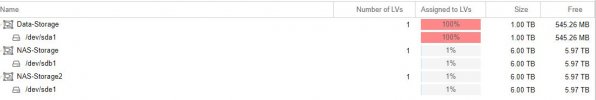

Here is shows that info:

What I didn't notice was VM101 was already Taken as it was a VM not An LXC so was not at the top. This particular VM was a Synology VM with 2 6TB Drives in a RAID1 configuration, When I noticed it was gone I looked down at the Storage Pools for NAS1 & NAS2 (Respectively) they are no longer full but rather showing .57% Used on Both Drives. I restored a Backup to the VM with the Synology, Added the Detached Device (From the Hardware Tab) and booted it up, but it does not appear to have site of the drives?

Did the 2 Drives get wiped out? Is it possible that Data is still on those Drives? Any way to get the Restored VM to recognize and reattach?

So A Bit More Info

Code:

lsblk -fs

NAS--Storage-vm--101--disk--0

└─sdb1 LVM2_member LVM2 001 xxxxxx-xxxx-xxxx-xxxx-xxxx-xxxx-xxxxxx

└─sdb

NAS--Storage2-vm--101--disk--0

└─sde1 LVM2_member LVM2 001 xxxxxx-xxxx-xxxx-xxxx-xxxx-xxxx-xxxxxx

└─sdeListed in the 101.conf File:

sata2: nasDrive1:vm-101-disk-0,size=32G

sata3: nasDrive2:vm-101-disk-0,size=32G

That's what is throwing me is these Disks are 6 (5.5T) Each

Here is shows that info:

Code:

sdb 8:16 0 5.5T 0 disk

└─sdb1 8:17 0 5.5T 0 part

└─NAS--Storage-vm--101--disk--0 253:1 0 32G 0 lvm

sdc 8:32 0 1.8T 0 disk /mnt/data2

sdd 8:48 1 28.9G 0 disk /mnt/data

sde 8:64 0 5.5T 0 disk

└─sde1 8:65 0 5.5T 0 part

└─NAS--Storage2-vm--101--disk--0 253:0 0 32G 0 lvm

Last edited: