T

TheCrwalingKingSnake

Guest

Hello all,

I just heard about ProxMox and got it up and running on a pretty nice machine here. However, I would like to use the two NICs I have on the server. I have a fresh new install of ProxMox v2.2-24 / 7f9cfa4c

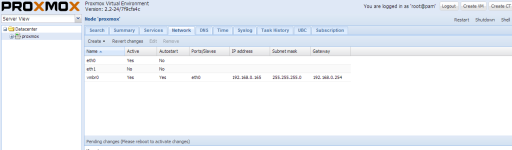

Ill post a screenshot of my Network tab if it helps. I have the 2nd NIC (eth1) plugged into the network. I have tested that port/cable with a laptop and it connected to the network fine. However, i am wondering if I need to have it auto-start, and should it show as active? I tried following the video for bonding ( http://pve.proxmox.com/wiki/Bond_configuration_(Video) ), but I changed the bond to balance-rr instead of active-backup. That resulted in me not being able to connect to ProxMox remotely and I just did a reinstall of PM.

What I would like do is have the two NICs be bonded together for a faster connection. Sorry if this gets asked a million times, but is there a page I can see what the options mean for bonding (balance-rr, active-backup, balance-xor)? Is bonding even what I want, or is it a bridge?

Any help/suggestions would be appreciated!

Thanks,

-Mark

I just heard about ProxMox and got it up and running on a pretty nice machine here. However, I would like to use the two NICs I have on the server. I have a fresh new install of ProxMox v2.2-24 / 7f9cfa4c

Ill post a screenshot of my Network tab if it helps. I have the 2nd NIC (eth1) plugged into the network. I have tested that port/cable with a laptop and it connected to the network fine. However, i am wondering if I need to have it auto-start, and should it show as active? I tried following the video for bonding ( http://pve.proxmox.com/wiki/Bond_configuration_(Video) ), but I changed the bond to balance-rr instead of active-backup. That resulted in me not being able to connect to ProxMox remotely and I just did a reinstall of PM.

What I would like do is have the two NICs be bonded together for a faster connection. Sorry if this gets asked a million times, but is there a page I can see what the options mean for bonding (balance-rr, active-backup, balance-xor)? Is bonding even what I want, or is it a bridge?

Any help/suggestions would be appreciated!

Thanks,

-Mark