Hi All,

I'm trying to run MariaDB with the correct blocksizes to get best performance, either in a VM or LXC. With an LXC, I can set the recordsize on particular datasets on the host so that the container uses them. I guess this is one layer less of abstraction for the filesystem compared to a VM so also maybe a good idea.

The problem is backup, we have 2Tb of data and LXC backup is slow compared to VM backup with the dirty bitmap that only backs up the changes.

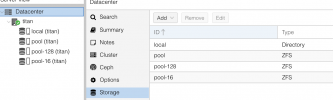

The pool record size is 128K. Do I need to create a pool with the correct recordsize (16K) then create a VM inside?

Using a VM in the normal pool, the Zvol has volblocksize, but I don't know if this is what I should set, and in fact if I try I get:

I really want to use VM, then I can have it backup to PBS hourly without much overhead, backing up LXC is too slow to do regularly with this much data.

Any ideas?

Thanks,

Jack

I'm trying to run MariaDB with the correct blocksizes to get best performance, either in a VM or LXC. With an LXC, I can set the recordsize on particular datasets on the host so that the container uses them. I guess this is one layer less of abstraction for the filesystem compared to a VM so also maybe a good idea.

The problem is backup, we have 2Tb of data and LXC backup is slow compared to VM backup with the dirty bitmap that only backs up the changes.

The pool record size is 128K. Do I need to create a pool with the correct recordsize (16K) then create a VM inside?

Using a VM in the normal pool, the Zvol has volblocksize, but I don't know if this is what I should set, and in fact if I try I get:

Code:

cannot set property for 'pool-hdd/vm-1001-disk-0': 'volblocksize' is readonlyI really want to use VM, then I can have it backup to PBS hourly without much overhead, backing up LXC is too slow to do regularly with this much data.

Any ideas?

Thanks,

Jack