Bro, you're a wizard.

I will let this run for 24 hours to test before I try that about the zvol.

Can you explain the command?

zfs set refreserv=3T Nextcloud/vm-101-disk-1

Well, this is like asking me to open the can of worms.

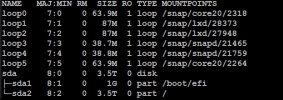

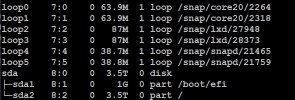

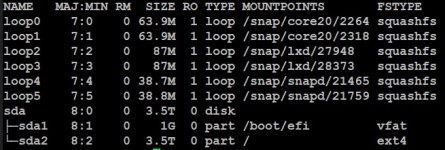

Especially if my hunch was right. The thing is, the moment you posted you first screenshot showing AVAIL as 0 on the pool I have written it off as not enough space.

Then I realised oh but wait PVE uses the REFRESERV (it's an extra option) to "save" people from overfilling. Basically it's a topic that I would be best to answer with have a look at deep dive ZFS guide first (just to align on the terminology). There's the pool, then there's datasets, the normal kind, and a special kind of dataset is a zvol (which makes the space appear as a block device - something you really have to do when you present it to VMs).

Because datasets can have children and there are snapshots and all the other features like compression, deduplication, clones, etc. - it's a very different concept of what constitutes "FREE" space. On top of that, you are running a RAIDZ2, that's yet another layer of complexity where what is actually "AVAIL" is what you called "usable capacity" (you probably calculate it differently yet but believe in that AVAIL number) - you have some overhead because any data stored requires parity data to be stored alongside elsewhere, then you have different amount of space wasted depending on how you set the ashift (especially for RAIDZ2). It's all quite counterintuitive.

To cut it short (if you are on the same page with the terminology), the REFRESERV is basically reserved space for a dataset because otherwise ZFS is basically thinly provisioned. The difference between REFRESERV and RESERVE is that REFRESERV does not include e.g. snapshots - you are supposedly really making sure that the space will be there for user data, i.e. you rather have snapshot creation fail than not have that (previously seemingly available) space. On the flipside, this reservation bites away from the parent, i.e. the pool than has no way to allow that space to be used by e.g. another dataset. But I find this case to be extreme in that it left 0 AVAIL for the pool itself.

I do not quite know why a write to your zvol is causing failures because you clearly have lots of AVAIL space in that dataset, but I have to guess it is trying to write something somewhere that requires some space to be available that does not count towards the dataset per se (but something else in the pool). For all I know it might even be any sort of bug related to RAIDZ2 which is notorious for showing confusing numbers when it comes to FREE/AVAIL/DSUSED etc.

Let's wait if it really resolved your problem.

NB Be aware this does not really take away any space from you, it just does not guarantee the ZVOL the entire space, but "only" 3TB. I suspect something needs to be written somewhere else in that pool that was left with 0 usable space with that value set. I reckon the value is set courtesy of PVE GUI. It would be interesting to nail this down further because guys developing PVE should be interesting in this and might want change their defaults.

Again, this all in case my hunch was correct. If the above was more confusing than explanatory, my apologies, but you really need to grab a whole book on ZFS and then RAIDZ2 chapter on top.