I hope the title give some clue to what is going on...

I could swear I saw a thread on this issue before but could not find it.

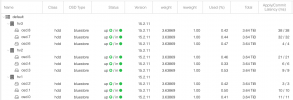

I have a 3 node cluster with Ceph. All 3 see a quorum. If I try to delete a VM from a specific virtual machine host, I get the error attached.

If I migrate the VM off of that node (either of the other 2) then I can delete the VM without issue.

Side note: I've noticed that when logging into the web UI, 2 of the three show the green checkmark instantly, but the problem node takes a few seconds. Including this in case it provides a clue about what is going on.

I could swear I saw a thread on this issue before but could not find it.

I have a 3 node cluster with Ceph. All 3 see a quorum. If I try to delete a VM from a specific virtual machine host, I get the error attached.

If I migrate the VM off of that node (either of the other 2) then I can delete the VM without issue.

Side note: I've noticed that when logging into the web UI, 2 of the three show the green checkmark instantly, but the problem node takes a few seconds. Including this in case it provides a clue about what is going on.