Hi everyone,

I have built the following setup: Mini-PC from AliExpress with 8GB RAM, Intel N5105, 250 GB NVMe, 4 Intel 225 B3 connections

I run Proxmox on it (VLAN 10) and pfSense as my router on top of Proxmox. pfSense runs very well. Now my intention is to run a docker-server as a VM (VLAN 30) in parallel to pfSense. I passthrough 3 of the 4 network ports to pfSense. I only use two of them, one for WAN and one for LAN. The one that is not passed through is used to access the proxmox GUI. This is very stable as well. The proxmox server runs on VLAN 10 and the IP 10.23.10.30. The docker server is supposed to run on VLAN 30 with the IP 10.23.30.40. I tried to draw the setup of the network. Sorry for the mad powerpoint skills:

The VM runs well when it runs, but freezes on an irregular basis. Before I had to kill the container with the “kill -9” command, but since I disabled memory ballooning, I can at least stop it from the Proxmox GUI. Still I see the freezes occur. Sometimes it takes less than 30 minutes, sometimes close to 24h. I think based on some googling and looking at the logs I narrowed it down to a network issue based on what I see in the PVE syslog and the docker kern.log:

PVE syslog:

Docker kern.log:

dmesg|grep block|grep vmbr

lspci -nnk | grep Eth -C 2

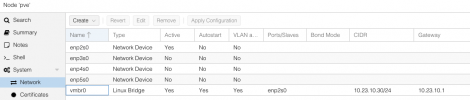

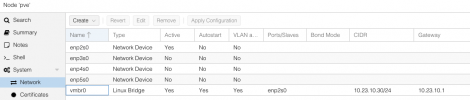

Please find the pve network setup as well as the pfsense and docker network setup below

PVE:

pfSense:

Docker VM:

My overall assumption is that I messed up the network connection, although it works fine apart from the freezes . Based on this thread (https://forum.proxmox.com/threads/s...ple-nic-network-and-routing-on-proxmox.81897/) I tried to configure an additional bridge for the docker VM, but I was not successful. Unfortunately, I cannot separate into two NICs, as I do not have any ports left on the switch, so everything needs to go through one NIC if possible.

. Based on this thread (https://forum.proxmox.com/threads/s...ple-nic-network-and-routing-on-proxmox.81897/) I tried to configure an additional bridge for the docker VM, but I was not successful. Unfortunately, I cannot separate into two NICs, as I do not have any ports left on the switch, so everything needs to go through one NIC if possible.

It would be great, if someone has an idea on how to do this.

I have built the following setup: Mini-PC from AliExpress with 8GB RAM, Intel N5105, 250 GB NVMe, 4 Intel 225 B3 connections

I run Proxmox on it (VLAN 10) and pfSense as my router on top of Proxmox. pfSense runs very well. Now my intention is to run a docker-server as a VM (VLAN 30) in parallel to pfSense. I passthrough 3 of the 4 network ports to pfSense. I only use two of them, one for WAN and one for LAN. The one that is not passed through is used to access the proxmox GUI. This is very stable as well. The proxmox server runs on VLAN 10 and the IP 10.23.10.30. The docker server is supposed to run on VLAN 30 with the IP 10.23.30.40. I tried to draw the setup of the network. Sorry for the mad powerpoint skills:

The VM runs well when it runs, but freezes on an irregular basis. Before I had to kill the container with the “kill -9” command, but since I disabled memory ballooning, I can at least stop it from the Proxmox GUI. Still I see the freezes occur. Sometimes it takes less than 30 minutes, sometimes close to 24h. I think based on some googling and looking at the logs I narrowed it down to a network issue based on what I see in the PVE syslog and the docker kern.log:

PVE syslog:

Code:

Jul 02 07:49:45 pve kernel: vmbr0: port 2(fwpr140p0) entered blocking state

Jul 02 07:49:45 pve kernel: vmbr0: port 2(fwpr140p0) entered disabled state

Jul 02 07:49:45 pve kernel: device fwpr140p0 entered promiscuous mode

Jul 02 07:49:45 pve kernel: vmbr0: port 2(fwpr140p0) entered blocking state

Jul 02 07:49:45 pve kernel: vmbr0: port 2(fwpr140p0) entered forwarding state

Jul 02 07:49:45 pve kernel: fwbr140i0: port 1(fwln140i0) entered blocking state

Jul 02 07:49:45 pve kernel: fwbr140i0: port 1(fwln140i0) entered disabled state

Jul 02 07:49:45 pve kernel: device fwln140i0 entered promiscuous mode

Jul 02 07:49:45 pve kernel: fwbr140i0: port 1(fwln140i0) entered blocking state

Jul 02 07:49:45 pve kernel: fwbr140i0: port 1(fwln140i0) entered forwarding state

Jul 02 07:49:45 pve kernel: fwbr140i0: port 2(tap140i0) entered blocking state

Jul 02 07:49:45 pve kernel: fwbr140i0: port 2(tap140i0) entered disabled state

Jul 02 07:49:45 pve kernel: fwbr140i0: port 2(tap140i0) entered blocking state

Jul 02 07:49:45 pve kernel: fwbr140i0: port 2(tap140i0) entered forwarding stateDocker kern.log:

Code:

Jul 2 09:53:42 docker-essentials kernel: [ 6.697890] random: dbus-daemon: uninitialized urandom read (12 bytes read)

Jul 2 09:53:42 docker-essentials kernel: [ 6.719639] random: dbus-daemon: uninitialized urandom read (12 bytes read)

Jul 2 09:53:43 docker-essentials kernel: [ 7.032923] random: crng init done

Jul 2 09:53:43 docker-essentials kernel: [ 7.719477] kauditd_printk_skb: 22 callbacks suppressed

Jul 2 09:53:43 docker-essentials kernel: [ 7.719481] audit: type=1400 audit(1656770023.684:33): apparmor="STATUS" operation="profile_load" profile="unconfined" name="docker-default" pid=692 comm="apparmor_parser"

Jul 2 09:53:43 docker-essentials kernel: [ 8.003645] bridge: filtering via arp/ip/ip6tables is no longer available by default. Update your scripts to load br_netfilter if you need this.

Jul 2 09:53:43 docker-essentials kernel: [ 8.005894] Bridge firewalling registered

Jul 2 09:53:44 docker-essentials kernel: [ 8.151409] Initializing XFRM netlink socket

Jul 2 09:53:45 docker-essentials kernel: [ 9.473740] docker0: port 1(veth7ecdafa) entered blocking state

Jul 2 09:53:45 docker-essentials kernel: [ 9.473746] docker0: port 1(veth7ecdafa) entered disabled state

Jul 2 09:53:45 docker-essentials kernel: [ 9.474266] device veth7ecdafa entered promiscuous mode

Jul 2 09:53:45 docker-essentials kernel: [ 9.491819] br-cbb501991c36: port 1(veth9ed4f72) entered blocking state

Jul 2 09:53:45 docker-essentials kernel: [ 9.491826] br-cbb501991c36: port 1(veth9ed4f72) entered disabled state

Jul 2 09:53:45 docker-essentials kernel: [ 9.491974] device veth9ed4f72 entered promiscuous mode

Jul 2 09:53:45 docker-essentials kernel: [ 9.493333] docker0: port 1(veth7ecdafa) entered blocking state

Jul 2 09:53:45 docker-essentials kernel: [ 9.493338] docker0: port 1(veth7ecdafa) entered forwarding state

Jul 2 09:53:45 docker-essentials kernel: [ 9.493393] docker0: port 1(veth7ecdafa) entered disabled state

Jul 2 09:53:45 docker-essentials kernel: [ 9.498026] br-cbb501991c36: port 1(veth9ed4f72) entered blocking state

Jul 2 09:53:45 docker-essentials kernel: [ 9.498041] br-cbb501991c36: port 1(veth9ed4f72) entered forwarding state

Jul 2 09:53:45 docker-essentials kernel: [ 9.499235] br-cbb501991c36: port 1(veth9ed4f72) entered disabled state

Jul 2 09:53:46 docker-essentials kernel: [ 10.542448] eth0: renamed from veth3c1f7eb

Jul 2 09:53:46 docker-essentials kernel: [ 10.557248] IPv6: ADDRCONF(NETDEV_CHANGE): veth7ecdafa: link becomes ready

Jul 2 09:53:46 docker-essentials kernel: [ 10.557289] docker0: port 1(veth7ecdafa) entered blocking state

Jul 2 09:53:46 docker-essentials kernel: [ 10.557292] docker0: port 1(veth7ecdafa) entered forwarding state

Jul 2 09:53:46 docker-essentials kernel: [ 10.557321] IPv6: ADDRCONF(NETDEV_CHANGE): docker0: link becomes ready

Jul 2 09:53:47 docker-essentials kernel: [ 11.176329] eth0: renamed from veth23d1213

Jul 2 09:53:47 docker-essentials kernel: [ 11.198463] IPv6: ADDRCONF(NETDEV_CHANGE): veth9ed4f72: link becomes ready

Jul 2 09:53:47 docker-essentials kernel: [ 11.198500] br-cbb501991c36: port 1(veth9ed4f72) entered blocking state

Jul 2 09:53:47 docker-essentials kernel: [ 11.198504] br-cbb501991c36: port 1(veth9ed4f72) entered forwarding state

Jul 2 09:53:47 docker-essentials kernel: [ 11.198532] IPv6: ADDRCONF(NETDEV_CHANGE): br-cbb501991c36: link becomes ready

Jul 2 09:53:47 docker-essentials kernel: [ 11.616941] loop5: detected capacity change from 0 to 8dmesg|grep block|grep vmbr

Code:

[1830069.024987] vmbr0: port 3(fwpr140p0) entered blocking state

[1831218.539345] vmbr0: port 2(fwpr120p0) entered blocking state

[1831218.539772] vmbr0: port 2(fwpr120p0) entered blocking state

[1831616.071324] vmbr0: port 3(fwpr140p0) entered blocking state

[1831616.071695] vmbr0: port 3(fwpr140p0) entered blocking state

[1831874.302814] vmbr0: port 3(fwpr140p0) entered blocking state

[1831874.303237] vmbr0: port 3(fwpr140p0) entered blocking state

[1850447.015570] vmbr0: port 2(fwpr140p0) entered blocking state

[1850447.015954] vmbr0: port 2(fwpr140p0) entered blocking state

[1855996.693535] vmbr0: port 2(fwpr140p0) entered blocking state

[1855996.693925] vmbr0: port 2(fwpr140p0) entered blocking state

[1861188.765491] vmbr0: port 2(fwpr140p0) entered blocking state

[1861188.765873] vmbr0: port 2(fwpr140p0) entered blocking state

[1992063.796918] vmbr0: port 2(fwpr140p0) entered blocking state

[1992063.797301] vmbr0: port 2(fwpr140p0) entered blocking state

[2000196.489270] vmbr0: port 2(fwpr140p0) entered blocking state

[2000196.489705] vmbr0: port 2(fwpr140p0) entered blocking state

[2067786.887480] vmbr0: port 2(fwpr140p0) entered blocking state

[2067786.887926] vmbr0: port 2(fwpr140p0) entered blocking state

[2069522.311114] vmbr0: port 2(fwpr140p0) entered blocking state

[2069522.311624] vmbr0: port 2(fwpr140p0) entered blocking state

[2076945.408813] vmbr0: port 2(fwpr140p0) entered blocking state

[2076945.409203] vmbr0: port 2(fwpr140p0) entered blocking statelspci -nnk | grep Eth -C 2

Code:

Kernel driver in use: nvme

Kernel modules: nvme

02:00.0 Ethernet controller [0200]: Intel Corporation Ethernet Controller I225-V [8086:15f3] (rev 03)

Subsystem: Intel Corporation Ethernet Controller I225-V [8086:0000]

Kernel driver in use: igc

Kernel modules: igc

03:00.0 Ethernet controller [0200]: Intel Corporation Ethernet Controller I225-V [8086:15f3] (rev 03)

Subsystem: Intel Corporation Ethernet Controller I225-V [8086:0000]

Kernel driver in use: vfio-pci

Kernel modules: igc

04:00.0 Ethernet controller [0200]: Intel Corporation Ethernet Controller I225-V [8086:15f3] (rev 03)

Subsystem: Intel Corporation Ethernet Controller I225-V [8086:0000]

Kernel driver in use: vfio-pci

Kernel modules: igc

05:00.0 Ethernet controller [0200]: Intel Corporation Ethernet Controller I225-V [8086:15f3] (rev 03)

Subsystem: Intel Corporation Ethernet Controller I225-V [8086:0000]

Kernel driver in use: vfio-pci

Kernel modules: igcPlease find the pve network setup as well as the pfsense and docker network setup below

PVE:

pfSense:

Docker VM:

My overall assumption is that I messed up the network connection, although it works fine apart from the freezes

It would be great, if someone has an idea on how to do this.