Hi Everybody,

we have a Dell PowerEdge R7525 with 2x AMD EPYC 74F3 24-Core Processor.

Inside this machine are two Nvidia A16 Grapic Cards.

On this PVE we want to run 8 virtual machines. Every machine should have one pci lane assigned (this config we had with vmware).

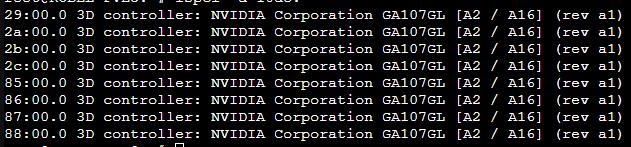

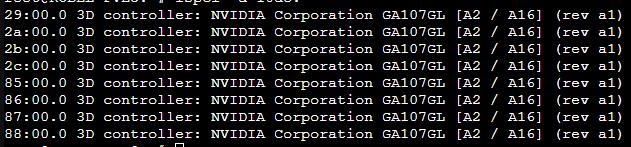

Every GPU has 4 PCI addresses:

What things did we check?:

- IOMMU activated

- In /etc/modules we added following lines:

- vfio

- vfio_iommu_type1

- vfio_virqfd

- vfio_pci

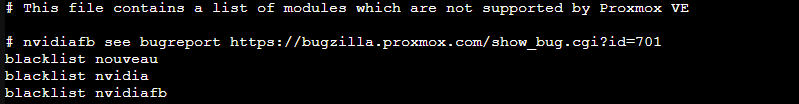

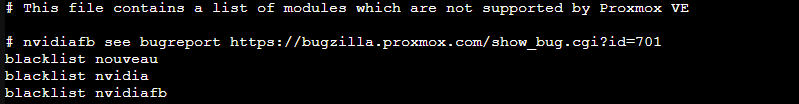

- In etc/modprobe.d/pve-blacklist.conf we added following lines:

- nouveau

- nvidia

- nvidiafb

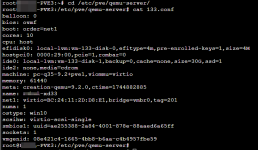

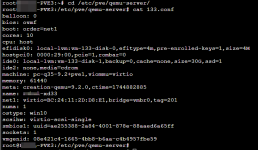

The machine is configured like that:

Now lets get to the problem:

Whenever we add a pci lane of the graphics card, the following error occurs:

Does anyone have any ideas or suggestions as to what could be causing this?

Many thanks in advance!

we have a Dell PowerEdge R7525 with 2x AMD EPYC 74F3 24-Core Processor.

Inside this machine are two Nvidia A16 Grapic Cards.

On this PVE we want to run 8 virtual machines. Every machine should have one pci lane assigned (this config we had with vmware).

Every GPU has 4 PCI addresses:

What things did we check?:

- IOMMU activated

- In /etc/modules we added following lines:

- vfio

- vfio_iommu_type1

- vfio_virqfd

- vfio_pci

- In etc/modprobe.d/pve-blacklist.conf we added following lines:

- nouveau

- nvidia

- nvidiafb

The machine is configured like that:

Now lets get to the problem:

Whenever we add a pci lane of the graphics card, the following error occurs:

Task viewer: VM 133 - Start

kvm: -device vfio-pci,host=0000:29:00.0,id=hostpci0,bus=ich9-pcie-port-1,addr=0x0,rombar=0: vfio 0000:29:00.0: hardware reports invalid configuration, MSIX PBA outside of specified BAR

TASK ERROR: start failed: QEMU exited with code 1

Does anyone have any ideas or suggestions as to what could be causing this?

Many thanks in advance!

Last edited: