Hi all,

I have to move a 4-nodes (1,2,3,4) cluster from subnet A to subnet B, so far what I did:

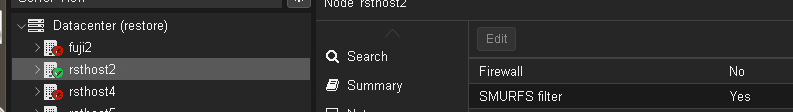

- added firewall rules to let A and B see each other fully (tested, ok) (rules will be removed after successful change)

on node 1:

edited network in the web interface of node 1 moving its ip from A to B

edited /etc/pve/corosync.conf changing IP of node 1 and added 1 to corosync_version

applied configuration

reattaching to the web interface with new ip address and asking a reboot of the node

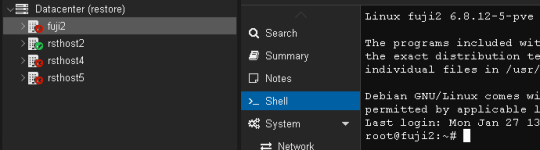

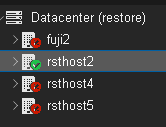

After reboot, I can attach to node 1 web interface and I see the node up, but all other nodes are shown as offline.

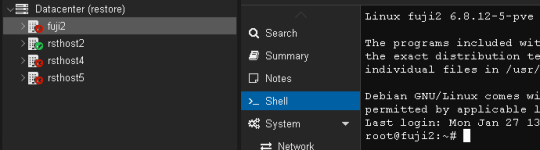

but, if I navigate an "offline" node, I can open, as example, its shell

What I am still missing/overlooked at? Some service is restriced to only node's network?

TIA

edit:

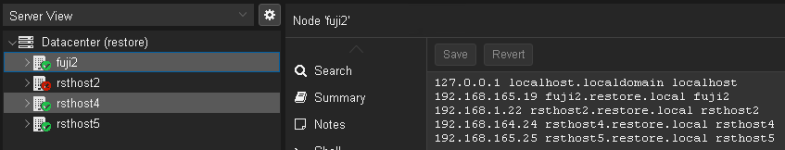

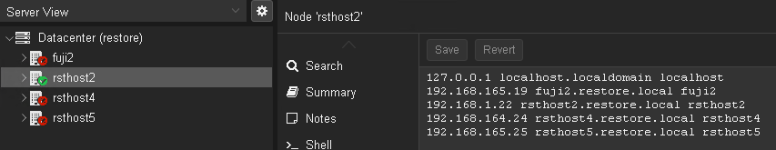

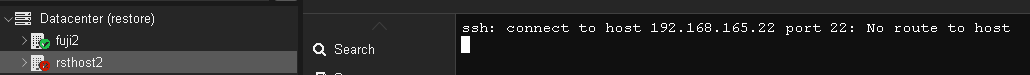

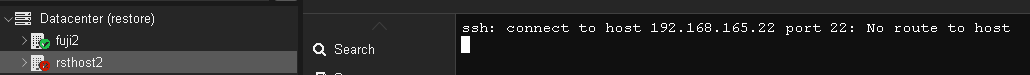

I noticed, even after rebooting another node, if it tries to connect to node 1 it still uses the old IP.

Do I had missed to update some other config?

I have to move a 4-nodes (1,2,3,4) cluster from subnet A to subnet B, so far what I did:

- added firewall rules to let A and B see each other fully (tested, ok) (rules will be removed after successful change)

on node 1:

edited network in the web interface of node 1 moving its ip from A to B

edited /etc/pve/corosync.conf changing IP of node 1 and added 1 to corosync_version

applied configuration

reattaching to the web interface with new ip address and asking a reboot of the node

After reboot, I can attach to node 1 web interface and I see the node up, but all other nodes are shown as offline.

but, if I navigate an "offline" node, I can open, as example, its shell

What I am still missing/overlooked at? Some service is restriced to only node's network?

TIA

edit:

I noticed, even after rebooting another node, if it tries to connect to node 1 it still uses the old IP.

Do I had missed to update some other config?

Last edited: