We'd like to get away from hardware RAID and move into distributed storage. I've been reading a number of posts regarding 3-node clusters with Ceph, how it's not ideal but I see that many people have implemented it without any issues and we'll add a 4th node sometime in the future, but I'd like to make sure we're approaching this the right way.

We're not looking for a full high-availability fault tolerant system for now, but it would be nice to have a system where we could distribute our services, take a server down for physical maintenance, upgrades, or due to failures and not have some of our services be unavailable during that time and not have to migrate each time. I am aware of the risk when only two servers are available in the pool, and this will be mitigated when the 4th node is added, and we have full backup systems in place should a disaster take place, but the idea of being able to build out our systems as we grow and how Ceph can easily help us do that is very appealing.

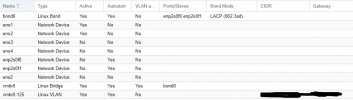

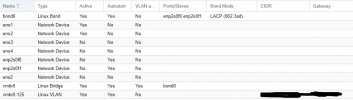

Currently each server is 20-cores with 48GB+ of RAM, enterprise SSD's for CT's/VM's and OSD's, and spinners for bulk storage, 1x10GBe port and 2 or 4 1GBe ports (will likely upgrade all to have 4 1GBe ports). The 1GBe ports are bonded and have VLAN aware bridges. The Proxmox hosts are part of a management VLAN, and serve a number of Linux Containers and a couple Windows VM's on two other VLAN's. Likely 2-3 OSD's on each server to start.

The image below shows the proposed network setup. There are also a few local workstations in the mix.

What's the idea network setup for this? I'm a bit confused as to how to create the Ceph networks and what networks to connect them to especially with all the VLAN's we have setup. Should the Ceph public and private both be on the 10GBe network? Should I create a separate Crososync VLAN as well?

Thanks for your help!

We're not looking for a full high-availability fault tolerant system for now, but it would be nice to have a system where we could distribute our services, take a server down for physical maintenance, upgrades, or due to failures and not have some of our services be unavailable during that time and not have to migrate each time. I am aware of the risk when only two servers are available in the pool, and this will be mitigated when the 4th node is added, and we have full backup systems in place should a disaster take place, but the idea of being able to build out our systems as we grow and how Ceph can easily help us do that is very appealing.

Currently each server is 20-cores with 48GB+ of RAM, enterprise SSD's for CT's/VM's and OSD's, and spinners for bulk storage, 1x10GBe port and 2 or 4 1GBe ports (will likely upgrade all to have 4 1GBe ports). The 1GBe ports are bonded and have VLAN aware bridges. The Proxmox hosts are part of a management VLAN, and serve a number of Linux Containers and a couple Windows VM's on two other VLAN's. Likely 2-3 OSD's on each server to start.

The image below shows the proposed network setup. There are also a few local workstations in the mix.

What's the idea network setup for this? I'm a bit confused as to how to create the Ceph networks and what networks to connect them to especially with all the VLAN's we have setup. Should the Ceph public and private both be on the 10GBe network? Should I create a separate Crososync VLAN as well?

Thanks for your help!